You run on server platform, it has fast buses iven in the past, I use desctop class PCs so there can be big difference on bus speed.

i was a bit surprised when i was checking up on the speeds… i mean 400gbit bus speed… ofc there is two cpu’s so already that seems to double up the numbers.

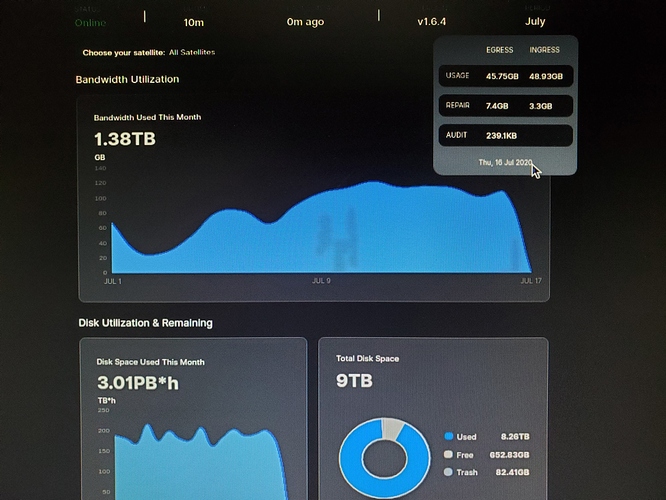

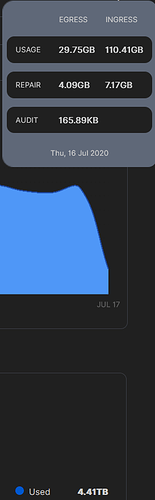

16Jul2020:

Note: Lost power unexpectedly at home around 5:45 pm (2145 GMT) for ~3 hours. And that’s why you have a UPS!

Node 1:

Node 2:

if the past is any indication then i should expect maybe 8 hours of downtime over the next 60 years… not including the ones i created

i’m 95% of the risk to the system really lol

Mine egress went down ![]()

Egress reverting to average here.

![]()

lIke a surgeons while cutting veins,

When you prepare to add disks…

- prepare the position, unwrap all disks, straighten cables, prepare screws…

- place them in proper order of use, check disks, cables, screws, … screwdrivers too

- shut down the node.

- quickly screw in new drives, connect cables,

- power up

- check the bios

- boot

- start the system,

- start dockers

- check elapsed time… 10 minutes tops

7h 50m left for accidental power loss

heheh clearly you haven’t tried booting my server… takes a good 10-15 minutes for a reboot and if you want to go past the bios you can expect it to take atleast 20-25 minutes

and that’s with quick boot… something to do with memory and hdd’s … tho supposedly if i flash my HBA’s to IT mode then i can bypass the individual hdd’s boot sequence and allow that to happen either in parallel or while the system is booting… tho there is usually good and many reasons for such enterprise features and thus i’ve not turned it off…

i’m told when one gets into the 40-80 hdd servers with like 288GB ram then a reboot can take the better part of an hour… and i don’t really doubt it at this point… but thats how it is with big systems… lots of things to keep track of… ofc this also means that the system can handle tons of parallel tasks…

also i was referring to @dragonhogan 's power outage… and why it seems silly for me to get a UPS… but who knows… not easy to predict the future

Maybe that’s the place where hot-swap shines? … I would be too affraid to hot plug any disk to my desktop, but server hardware can probably handle it with ease…

Wow @striker43 36$/month? nice change, though some is repair so 10$ not 20$ per TB

actually most sata controllers handle it just fine… i do that on my desktop all the time… tho you can run into motherboards / controllers that doesn’t like it… or where its turned off in the bios…

just remember not to begin throwing the hdd around before it’s had some 20-30sec to spin down… not sure exactly how long it takes… but because it’s a spinning metal disk they do have quite the angular momentum… so movement in the direction of the plane of the drive isn’t to bad… or up and down even… its the twisting that the angular momentum of the spindles will try to counter and thus slightly warp or put excessive strain on the mechanics…

but really disconnecting and reconnecting drives… well i’ve done that hundreds of times with no real issues i can attribute to it…

really making sure your computer is well placed, stable and isn’t exposed to bumps or vibrations is imo way more important… most operating systems also work fine with hotswapping drives… ofc sometimes one can run into some minor oddities… like say a drive that is connected to a drive one has just taken out can sometimes show the folders of the wrong drive… but it’s kinda rare and mostly if it’s the exact same models.

yeah hotswap is a very nice and useful feature… not to fond of it on the server as sometimes the bios might offset the boot drive… but that’s generally a configuration issue… but i really sucks if one has replaced a drive… and the boot drive is offset… then weeks may pass and then all of a sudden the server crashes and tries to reboot only to stall due to the boot drive not being configured right…

which is why i generally prefer multiple boot configurations on a mirror boot drive setup…

that way if one fails for whatever reason there is redundancy…

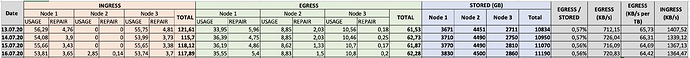

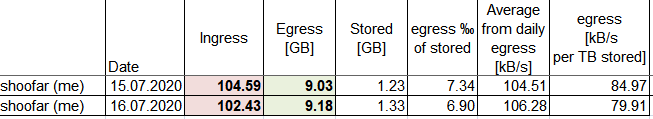

So far all nodes combined show a monthly value of 21.13$ ![]()

It’s my best month so far and it’s only half over… ![]()

So staying in the network for long term seems to become very profitable.

Do not forget that most of the current traffic is dummy data sent by test satellites to fill our HDDs.

I looks like it since 95% of my traffic is on europe-north and that’s a test satellite.

But don’t they have a few projects with other entities running on that satellite as well ?

I don’t understand why people continue to make this statement…all traffic and stored data pays the same regardless if test or customer…

Of course it is paid , but talking about long term profitability based on test data income is unrealistic.

I invite you to visit Storj’s github to see latest commits.

short term is an irrelevant discussion between test and customer… but long term test data isn’t sustainable for storj, if they don’t get enough customers…

but really with this network on hand… it’s difficult to imagine a path that didn’t make them money, and so long as they are a bit picky and bide their time and develop the network into some most if not all want to use, even if they cannot afford it…

just like i want a 100tb ssd… i just cannot afford it… lol

but yeah i think the test vs customer debate is fairly useless… but i can see how it atleast in theory would be unsustainable forever… if storj didn’t have income … ![]()

but that would basically make them the worst business people ever lol

but who knows… maybe they have trump card…

For me it is simple: Storj has to be profitable for a majority of SNOs otherwise SNOs will leave. Therefore they will keep a certain test traffic available so the SNOs stay on board. On the other hand, the more SNOs leave the network, the more data is available for the remaining SNOs and therefore more egress, making STORJ more profitable for the remaining SNOs. It will create a balance that STORJlabs can influence with the amount of test traffic.

Of course it has to be profitable for STORJlabs too in the long run but if it isn’t and the test traffic drops too much, too many SNOs could leave the network and then their whole business ends anyway.

So while we can not completely count on current earnings to be stay this way, I am confident that the earnings will stay acceptable for most SNOs.

(Of course I can be a bit more relaxed because I only invested money that I earned from being a SNO. So even if the network suddenly shuts down, I made profit and still have my HDDs additionally.)