This is the current implementation, yes. We want to reduce the number of I/O, thus we trying to put all items behind the folder with the name equal to a date.

This allows us to do not check every single item and just purge all folders with names outdated to the date.

But seems it was not go well…

There’s a .trash-uses-day-dirs-indicator in the trash directory; unfortunately my logging is set to FATAL ![]()

I guess I could just delete the first folder (2024-04-13) and a restart will recreate the structure without nesting?

I get the reason why it needs to be separated by date, that’s not my point.

My point is why go into every satellite’s directory and then separate it by date?

The most optimal IO way would be to go into every date’s directory and then separate by satellite.

Your way: delete process needs to change into the trash directory for every satellite (4 changes), scan the date directories (8 reads), delete the oldest (1 delete).

My way: delete process needs to change into the trash directory (1 change), scan the date directory (8 reads), delete the oldest (1 delete).

See the difference?

This is a negligible difference.

With all due respect: how is 4 times doing the same thing negligible?

Because on top of those ~dozen operations you still need to recursively delete pieces, which is one file system operation per piece.

Which the system will handle, we don’t have to bother with any logic of the kind "for every satellite (who are these satellites? let’s check a database or scan a folder for any subfolders? which is better?), go into its subdirectory and delete the directories older than 7 days.

Simpler and this is how it should be done: go into the trash folder and delete folders within it that are older than 7 days. Done, scales up to dozens of satellites (well, if the rest of the system can keep up). Literally a code once never deal with it again sort of scenario (look up UFS on FreeBSD).

POSIX only guarantees that you can remove an empty directory. There is no syscall that does “remove recursively”. Besides, even if there was one, it would still need to iterate over all files internally.

find /my/path/ -type d –ctime +7 –exec rm -r {} \;

comes to mind

Run it under strace and see how many file system operations it actually performs.

Not possible. Satellites are independent and operate separate from each other. Sure currently the satellites on the trusted list are all managed by Storj Labs and run similarly, but the node software is written to support satellites from different independent owners as well. You can’t mix logic across satellites as a result. And @Toyoo is right, compared to the actual removal of files, these few extra operations are negligible.

On another note, many of my nodes are working hard on GC for US1 now. Including the one with the big discrepancy. Thanks @littleskunk for following up with the team on this for us. And thanks @elek for letting us know they were all sent today as well. Hope you all have a nice weekend.

And just for fun… an AI’s interpretation of what’s going on on my nodes right now.

Also seeing retain requests now, but looks like my nodes might be to big to get any pieces deleted, since no pieces has been moved to the trash folders, and free space hasn’t gone up on any nodes.

So looking forward to bigger bloom filters ![]()

root@server030:/disk102/storj/logs/storagenode# grep "Prepared to run a Retain request\|Moved pieces to trash during retain" server*.log

server003-v1.100.3-30003.log:2024-04-13T09:10:26+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server003-v1.100.3-30003.log:2024-04-13T20:39:44+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5785733, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38495932, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h29m17.416296485s", "Retain Status": "debug"}

server006-v1.100.3-30006.log:2024-04-13T08:38:28+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server006-v1.100.3-30006.log:2024-04-13T19:48:16+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5589354, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38909156, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h9m48.560654394s", "Retain Status": "debug"}

server007-v1.100.3-30007.log:2024-04-13T06:59:08+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server007-v1.100.3-30007.log:2024-04-13T18:21:04+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5681552, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38664946, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h21m56.25389628s", "Retain Status": "debug"}

server011-v1.100.3-30011.log:2024-04-13T06:38:09+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server011-v1.100.3-30011.log:2024-04-13T19:00:44+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5863651, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38399013, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "12h22m35.011207564s", "Retain Status": "debug"}

server015-v1.100.3-30015.log:2024-04-13T05:19:35+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server015-v1.100.3-30015.log:2024-04-13T16:33:54+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5739902, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38555163, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h14m19.792494959s", "Retain Status": "debug"}

server016-v1.100.3-30016.log:2024-04-13T09:14:53+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server016-v1.100.3-30016.log:2024-04-13T21:06:53+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5944690, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38298453, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h51m59.415735343s", "Retain Status": "debug"}

server020-v1.100.3-30020.log:2024-04-13T06:17:20+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server020-v1.100.3-30020.log:2024-04-13T18:33:11+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 6076704, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38231911, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "12h15m51.108527916s", "Retain Status": "debug"}

server025-v1.100.3-30025.log:2024-04-13T07:02:32+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server025-v1.100.3-30025.log:2024-04-13T20:22:32+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 6096240, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38170150, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "13h20m0.429476217s", "Retain Status": "debug"}

server026-v1.100.3-30026.log:2024-04-13T05:46:11+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server026-v1.100.3-30026.log:2024-04-13T17:21:40+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5936505, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 38485878, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "11h35m29.111550649s", "Retain Status": "debug"}

server028-v1.100.3-30028.log:2024-04-13T05:47:16+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server028-v1.100.3-30028.log:2024-04-13T12:55:33+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2329549, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 52877013, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "7h8m16.719432698s", "Retain Status": "debug"}

server029-v1.100.3-30029.log:2024-04-13T09:30:54+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server029-v1.100.3-30029.log:2024-04-13T17:15:14+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2341448, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 52836997, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "7h44m20.250079733s", "Retain Status": "debug"}

server030-v1.100.3-30030.log:2024-04-13T06:55:15+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server030-v1.100.3-30030.log:2024-04-13T14:42:11+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2319364, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 52909828, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "7h46m55.416101838s", "Retain Status": "debug"}

server031-v1.100.3-30031.log:2024-04-13T05:01:26+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server031-v1.100.3-30031.log:2024-04-13T15:15:24+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 3859353, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 45884675, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "10h13m58.027449589s", "Retain Status": "debug"}

server034-v1.100.3-30034.log:2024-04-13T06:27:41+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server034-v1.100.3-30034.log:2024-04-13T13:59:20+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2463919, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 53518845, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "7h31m39.077858724s", "Retain Status": "debug"}

server035-v1.100.3-30035.log:2024-04-13T05:53:52+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server035-v1.100.3-30035.log:2024-04-13T13:13:32+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2449021, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 53550953, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "7h19m39.850701072s", "Retain Status": "debug"}

server042-v1.100.3-30042.log:2024-04-13T08:26:31+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server042-v1.100.3-30042.log:2024-04-13T22:37:28+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 5069263, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 35310431, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "14h10m56.690760105s", "Retain Status": "debug"}

server044-v1.100.3-30044.log:2024-04-13T05:34:21+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server044-v1.100.3-30044.log:2024-04-13T19:02:41+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4886256, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 35649615, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "13h28m19.479088602s", "Retain Status": "debug"}

server050-v1.100.3-30050.log:2024-04-13T06:11:13+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server050-v1.100.3-30050.log:2024-04-13T19:50:35+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4688683, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 40909869, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "13h39m22.372970217s", "Retain Status": "debug"}

server053-v1.100.3-30053.log:2024-04-13T07:28:58+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server053-v1.100.3-30053.log:2024-04-13T23:19:28+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4988389, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 40250404, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "15h50m30.1451746s", "Retain Status": "debug"}

server054-v1.100.3-30054.log:2024-04-13T09:18:12+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server054-v1.100.3-30054.log:2024-04-14T01:08:52+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4760727, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 40850918, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "15h50m39.921216882s", "Retain Status": "debug"}

server055-v1.100.3-30055.log:2024-04-13T07:04:02+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server055-v1.100.3-30055.log:2024-04-13T22:35:42+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4746093, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 40786944, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "15h31m39.571108113s", "Retain Status": "debug"}

server056-v1.100.3-30056.log:2024-04-13T08:14:41+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server057-v1.100.3-30057.log:2024-04-13T08:20:34+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server058-v1.100.3-30058.log:2024-04-13T08:06:30+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server060-v1.100.3-30060.log:2024-04-13T05:58:18+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server060-v1.100.3-30060.log:2024-04-14T00:20:16+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4994973, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 40212157, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "18h21m57.509402101s", "Retain Status": "debug"}

server064-v1.100.3-30064.log:2024-04-13T04:33:42+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server064-v1.100.3-30064.log:2024-04-13T12:43:25+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 4334298, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 41363659, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "8h9m42.897086502s", "Retain Status": "debug"}

server066-v1.100.3-30066.log:2024-04-13T05:36:36+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server066-v1.100.3-30066.log:2024-04-14T02:22:52+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 9705866, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 25603224, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "20h46m15.881106876s", "Retain Status": "debug"}

server067-v1.100.3-30067.log:2024-04-13T08:28:48+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server070-v1.100.3-30070.log:2024-04-13T05:37:42+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server070-v1.100.3-30070.log:2024-04-14T00:16:36+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 8355908, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 29267469, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "18h38m53.452148334s", "Retain Status": "debug"}

server072-v1.100.3-30072.log:2024-04-13T09:32:01+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server074-v1.100.3-30074.log:2024-04-13T05:19:35+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server074-v1.100.3-30074.log:2024-04-13T22:31:44+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 8681975, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 30752883, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "17h12m9.54635148s", "Retain Status": "debug"}

server075-v1.100.3-30075.log:2024-04-13T08:44:19+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server075-v1.100.3-30075.log:2024-04-13T22:52:53+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10994724, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 24512160, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "14h8m33.896156196s", "Retain Status": "debug"}

server077-v1.100.3-30077.log:2024-04-13T07:50:29+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server077-v1.100.3-30077.log:2024-04-13T21:59:21+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10459271, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22594190, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "14h8m51.55373842s", "Retain Status": "debug"}

server080-v1.100.3-30080.log:2024-04-13T07:39:54+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server080-v1.100.3-30080.log:2024-04-14T00:31:48+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10497989, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 23113328, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "16h51m54.735643118s", "Retain Status": "debug"}

server083-v1.100.3-30083.log:2024-04-13T07:43:11+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server083-v1.100.3-30083.log:2024-04-13T22:53:19+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10462107, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22963911, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "15h10m8.197717168s", "Retain Status": "debug"}

server084-v1.100.3-30084.log:2024-04-13T05:06:57+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server084-v1.100.3-30084.log:2024-04-13T08:33:19+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10409944, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22924555, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "3h26m22.099287107s", "Retain Status": "debug"}

server086-v1.100.3-30086.log:2024-04-13T06:49:51+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server086-v1.100.3-30086.log:2024-04-13T22:59:06+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10496484, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22897944, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "16h9m14.661107497s", "Retain Status": "debug"}

server088-v1.100.3-30088.log:2024-04-13T05:37:45+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server088-v1.100.3-30088.log:2024-04-13T20:17:46+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10530422, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22734531, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "14h40m0.514380627s", "Retain Status": "debug"}

server089-v1.100.3-30089.log:2024-04-13T09:53:09+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server089-v1.100.3-30089.log:2024-04-13T23:02:24+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10458621, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22886477, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "13h9m14.918130409s", "Retain Status": "debug"}

server091-v1.100.3-30091.log:2024-04-13T05:05:51+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server091-v1.100.3-30091.log:2024-04-13T05:25:15+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10463808, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22857653, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "19m24.084718482s", "Retain Status": "debug"}

server094-v1.100.3-30094.log:2024-04-13T05:26:28+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server094-v1.100.3-30094.log:2024-04-14T00:15:59+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10405851, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22482561, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "18h49m31.359374676s", "Retain Status": "debug"}

server098-v1.100.3-30098.log:2024-04-13T09:04:49+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server098-v1.100.3-30098.log:2024-04-13T19:45:58+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10466958, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22434535, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "10h41m8.682806317s", "Retain Status": "debug"}

server099-v1.100.3-30099.log:2024-04-13T08:28:49+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server099-v1.100.3-30099.log:2024-04-13T21:17:07+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10436668, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22406777, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "12h48m17.952908235s", "Retain Status": "debug"}

server101-v1.100.3-30101.log:2024-04-13T09:45:04+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server101-v1.100.3-30101.log:2024-04-13T21:52:13+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10421395, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22527574, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "12h7m9.193360179s", "Retain Status": "debug"}

server102-v1.100.3-30102.log:2024-04-13T06:10:07+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server102-v1.100.3-30102.log:2024-04-13T16:33:56+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10328711, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22241420, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "10h23m48.397729699s", "Retain Status": "debug"}

server106-v1.100.3-30106.log:2024-04-13T05:52:46+02:00 INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

server106-v1.100.3-30106.log:2024-04-13T14:37:14+02:00 INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 10405724, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 22435655, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "8h44m27.77890821s", "Retain Status": "debug"}

Updated 06-05-2024 : Retain not moving files to trash · Issue #6945 · storj/storj · GitHub

Th3Van.dk

I understand it that the fix has not been deployed in 1.99.3 yet.

d62e81b storagenode/retain: store bloomfilter on disk

So until v1.101 storagenode updates can interrupt the deletes by bloomfilter and if that happens they will not resume?

See the commit below. Recent improvements make it possible to:

- save bloom filter locally (and start from the beginning if it’s not finished).

- resume deletion process

Will be deployed with version 1.101 ?

Does this include a log information that these processes start resumed. That would be helpful to track that it is working.

What happens if the GC didn’t finish yet and the node receives a new bloom filter?

Dose it cancel the previous GC run and starts the new one? Or it starts a second instance of GC?

My guess is it will restart then and the processing of the bloom filter will not complete.

from the changes, it should survive restarts/reboots.

Sorry. Seems I missed the whole meaning. Please, explain it to me?

This is how it works now, but it requires the scan of the trash for every single piece.

So the idea is to place all old pieces under a nested folder represented the expire date, and then remove all pieces under this folder regardless of scan results. This should speed-up a process.

This is a very interesting question.

From the code I guess, that the current BF will be overwritten. However, it’s still should include what’s should be kept on your node.

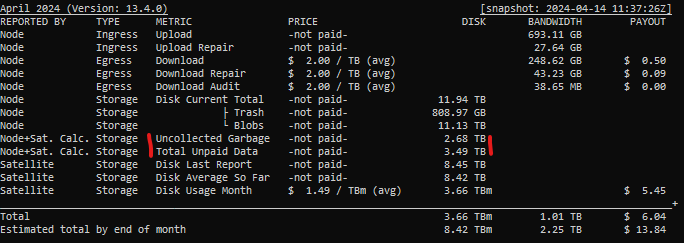

It looks like the GC runs are finished on my nodes, but I’m not impressed with the results unfortunately. Of course I was aware that it wouldn’t be able to achieve the intended 90% clean up of garbage, since it was limited by the bloom filter size limit, but it seems it only cleaned up less than 25% of garbage. That’s a lot worse than I was expecting (or maybe hoping).

As a result, this node still has almost 3TB of uncollected garbage.

@littleskunk is this to be expected when hitting the max BF size? If so, I think more urgency is required to implement the new way of sending larger BF’s.

For context and to not rely on only my own tools, here is the dashboard for this node.

Edit: Just to make sure the process was actually done and didn’t crash partway through, I looked up the log lines.

2024-04-13T02:24:41Z INFO retain Prepared to run a Retain request. {"Process": "storagenode", "Created Before": "2024-04-03T17:59:59Z", "Filter Size": 4100003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-04-13T05:18:24Z INFO retain Moved pieces to trash during retain {"Process": "storagenode", "Deleted pieces": 2890475, "Failed to delete": 172, "Pieces failed to read": 0, "Pieces count": 39879329, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "2h53m42.837156035s", "Retain Status": "enabled"}

It failed to delete some pieces. Not sure why, but it’s a minimal amount that obviously wouldn’t lead to so much left behind.

Edit2: I’ve added 2 more metrics to my Earnings Calculator in a test version (release soon), to provide more insight.

This node currently stores 3.49TB of unpaid data vs 8.45TB paid. Not a great look.

mine didn’t.

i had 1.96.6 and the at 13.04.2024 ~ 08:00 a node got terminated by not being a minimal version

It was doing retain (with 4100003 filter size) started at 13.04.2024 ~ 04:00 for some sattelite ending on “S3” its (us1 or eu1?)

prior to that FATAL of a version at ~~ 08:00, the retain has been canceled, and after few hours i come and installed manually the 1.99.3 ver. and the node is back operating, but doing nothing, at being full. I would like to be able to start that retain again somehow now ![]()

(Windows GUI)

is it possible, by some command to ask for bloom again?

or i have to wait for another week to get another bloom filter?