I forgot they said there was a tool to rebuild hashtables from the extents/logs. I got used to running a filesystem check and maybe just tossing a couple bad .sj1 files and moving on with life…

I managed to fix some, but some other giving me an error, and don’t throw out the file.

Processing 31/log-0000000000000031-00004fa2...

Processing 32/log-0000000000000032-00004fa4...

Processing 33/log-0000000000000033-00004fac...

Processing 34/log-0000000000000034-00000000...

Processing 35/log-0000000000000035-00000000...

unexpected fault address 0x7f7e7046c000

fatal error: fault

[signal SIGBUS: bus error code=0x2 addr=0x7f7e7046c000 pc=0x475cf3]

goroutine 1 gp=0xc000002380 m=2 mp=0xc000064808 [running]:

runtime.throw({0x6cdb73?, 0x11d0fa80?})

/usr/local/go/src/runtime/panic.go:1101 +0x48 fp=0xc0000e5690 sp=0xc0000e5660 pc=0x46dc68

runtime.sigpanic()

/usr/local/go/src/runtime/signal_unix.go:922 +0x10a fp=0xc0000e56f0 sp=0xc0000e5690 pc=0x46f3ca

runtime.memmove()

/usr/local/go/src/runtime/memmove_amd64.s:440 +0x513 fp=0xc0000e56f8 sp=0xc0000e56f0 pc=0x475cf3

At the end:

Counting 3a/log-000000000000003a-00004fa8...

Counting 3b/log-000000000000003b-00004faa...

Counting 3c/log-000000000000003c-00004fad...

Counting 3d/log-000000000000003d-00000000...

Counting 3e/log-000000000000003e-00000000...

platform: invalid argument

storj.io/storj/storagenode/hashstore/platform.mmap:30

storj.io/storj/storagenode/hashstore/platform.Mmap:16

main.openFile:37

main.(*cmdRoot).iterateRecords:148

main.(*cmdRoot).countRecords:212

main.(*cmdRoot).Execute:79

github.com/zeebo/clingy.(*Environment).dispatchDesc:129

github.com/zeebo/clingy.Environment.Run:41

main.main:29

runtime.main:283

When restarting the process, it rushes through, and give the same error.

Even though this error some files worked, but now it wont give me any.

How do I fix this?

Check directory for zero byte logfiles and delete them. The tool can’t handle such files and crashes.

How do I check for this under linux? Is there any tool? I don’t want do lookup each folder on several nodes.Would be great if storj itself would fix this kind of issue in their tool, but I think hashstore is still very new.

For example, for bash

find /mnt/storagenode/storage/hashstore -type f -size 0

The node should be stopped though. If you use docker, then also remove the container, because docker may revert changes made outside of the container while it was only stopped.

I’m sure it will get some updates. Storj developers may not have had much need to recover files internally… but with almost 30k nodes now somebody will by trying to recover their hashstore tables probably every day. That tool is going to get used a lot!

I guess they run most if not all of the select nodes but hardware seems to be much more reliable there.

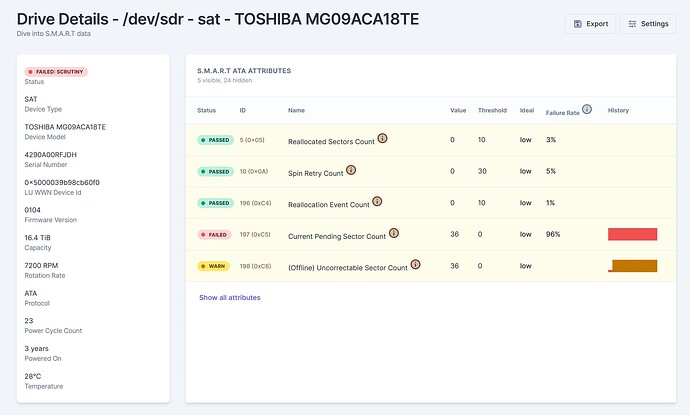

I have used the tool 4 or 5 times so far. The problem was caused by bad HDD sectors (all Toshiba 18TB ![]() ). I just copied all files to new location with rsync. If a hashtable was damaged I used the tool to write a new one. For damaged logfiles I tried ddrescue but it did not recover anything that rsync had not copied already. With zfs any bad sector results in missing data of blocksize if there is no redundancy. However, all nodes survived even with some of those 128K holes in the logfiles.

). I just copied all files to new location with rsync. If a hashtable was damaged I used the tool to write a new one. For damaged logfiles I tried ddrescue but it did not recover anything that rsync had not copied already. With zfs any bad sector results in missing data of blocksize if there is no redundancy. However, all nodes survived even with some of those 128K holes in the logfiles.

18TB drives should be pretty new, like 4 years or less. Dissapointing to see Toshiba failing so badly. Do you use UPS? Did the drives were baught on sh market?

I have 5 years old Seagates that don’t show any problems.

All bought new over a period of about 2 years. So no problem with RMA, sometimes they even replaced a bad 18TB with a 20TB.

I am using a lot of HDDs under same conditions in 4 HE server cases with UPS. Seagate, WD, Toshiba, size is 12TB..28TB. Only MG09 18TB dying like flies.

Right now I have another 3 on my desk ready for RMA.

The 18 TB was the first one using MAMR technolgy btw. ![]()

What are the symptoms of dying? I only had one with bad sectors, which I RMA’d, the other ones are running very well.

It is always increasing numbers of bad sectors.

This one is still in use but will be replaced soon.

BTW: All my old MG09 having firmware 0104 while latest replacements having 0107. Is there a way to flash new firmware to Toshiba drives?

For the “normal” End-User, I don’t think so. I don’t know what kind of benefit it’ll bring. Bad sectors etc. are more likely a hw failure.

I would like to update firmware before errors occure. In my opinion there is something wrong with MAMR and they might have fixed this by changing parameters in later firmware versions.

You jinxed it, my second MG09 has bad sectors too, time to swapt it out and do the next RMA and get 5 Years warranty again.

All my MG10s hae 0104 as well, and when I got two through RMA, they had 0105 and 0107

I’ve not found any user-reachable tool that I could get to work.

HDDguru has some files: HDD Firmware Downloads. PC-3000 Support Downloads. Data recovery and HDD repair tools

And Dell of all have some as well: https://www.dell.com/support/home/da-dk/drivers/driversdetails?driverid=kk671&msockid=397e729ffc5d625236bb6411fde7635b

As far as I know replacement drives only have remaining warranty from old drive. So you can get more than one replacement but not forever.

Doesn’t you basically get a new one with a new S/N? My replacement was manufactured 2025 so pretty new.

New S/N yes but warranty is a database so they can just transfer remaining days. Although all my replacements looking like new I don’t think this is the case, refurbished is more likely.

Where are you based? In Europe, the entire warrenty is reset, once RMA finished. This is law for all products

I’ve heard of minimum-term seller warranties in Europe… but not that RMAs keep resetting it. Perhaps that’s just in certain countries (like Germany maybe)?

I haven’t heard of it in North America: replacement products just get however many days were left from the initial warranty.