Hello Community,

I see many Infos how to migrate to Hashstore.

Is there one Document available with all Options, Settings and stuff ?

When to restart the node ? Explanation of Environment Variables ?

I try to collect and update this Thread:

For Migration (passive / active) Files need to be modified in your Storage Location:

/<storagelocation>/storage/hashstore/meta

Also note the permissions of the `.migrate and .migrate_chore files may be very restrictive. You might have to modify permissions on the files to allow writing, or make modifications as the specific owner.

Enable passive migration. (requires version v1.119)

Info:

-

WriteToNew will send all incoming uploads to the hashstore

-

TTLToNew will send only uploads with a TTL to the hashstore

-

ReadNewFirst will check any new download request first in hashstore then piecestore. Use true if you start using hashstore.

-

PassiveMigrate will migrate any piece that gets hit by a download request

todo:

1) cd /<your node location>/storage/hashstore/meta

2) use 1 to 4 strings below (one for each satellite)

3)

echo '{"PassiveMigrate":true,"WriteToNew":true,"ReadNewFirst":true,"TTLToNew":true}' > 121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6.migrate

echo '{"PassiveMigrate":true,"WriteToNew":true,"ReadNewFirst":true,"TTLToNew":true}' > 12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S.migrate

echo '{"PassiveMigrate":true,"WriteToNew":true,"ReadNewFirst":true,"TTLToNew":true}' > 12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs.migrate

echo '{"PassiveMigrate":true,"WriteToNew":true,"ReadNewFirst":true,"TTLToNew":true}' > 1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE.migrate

Enable active migration (requires version v1.120)

Info:

-

Will take multipe days with high CPU time.

-

You can enable it for all satellites at the same time.

-

The migration will run through them one by one.

-

single migration per satellite between node upgrades possible ? powerfull cpu ?

todo:

1) cd /<storagelocation>/storage/hashstore/meta

2) use 1 to 4 strings below (one for each satellite)

3)

echo -n true > 121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6.migrate_chore

echo -n true > 12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S.migrate_chore

echo -n true > 12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs.migrate_chore

echo -n true > 1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE.migrate_chore

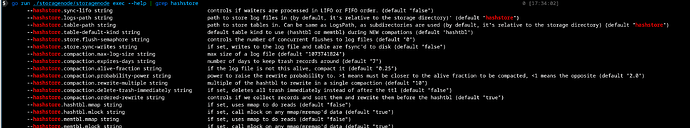

CONFIG-Options in Environment Variables:

(note these will be changing to docker command line options in a future release after 135.5)

- STORJ_HASHSTORE_COMPACTION_PROBABILITY_POWER=2 (default)

- STORJ_HASHSTORE_COMPACTION_REWRITE_MULTIPLE=10 (default)

- STORJ_HASHSTORE_MEMTABLE_MAX_SIZE=128MiB (default ????)

- STORJ_HASHSTORE_TABLE_DEFAULT_KIND=hashtbl (default)

- STORJ_HASHSTORE_MEMTBL_MMAP=false (default)

CONFIG-Options in Docker:

--hashstore.table-path (if you have SSD, use that one, but monitor degradation)

--hashstore.table-default-kind (if you have at least TB * 1.3 = GB memory)

--hashstore.hashtbl.mmap (if you know what is mmap, but don’t have enough memory, neither SSD, but still interested to check if it’s faster for hashtbl)

--hashstore.compaction.rewrite-multiple (if you have enough space, one compaction could rewrite more logs. You need * free space in addition to the used space)

--hashstore.compaction.alive-fraction: if you are fine with heavier IO / in exchange of less stored garbage (I use 0.65 on some nodes)

Compaction

Compaction is the process of removing dead bytes from the log files. It runs once per day for each DB.

Most of the time you don’t need to touch the configuration of the compaction.

But if you are really interested about tuning it manually, the most important configurations are:

--hashstore.compaction.alive-fraction float

--hashstore.compaction.probability-power float

--hashstore.compaction.rewrite-multiple float

And they are expoained here:

hashtbl vs memtbl

-

Info: need atleast TB * 1.3 = GB memory

-

--hashstore.table-default-kind memtbl

use SSD

-

create dir on ssd: mkdir //table

-

--hashstore.table-path

How to check Logfiles

docker logs storagenode 2>&1 | grep "piecemigrate:chore"

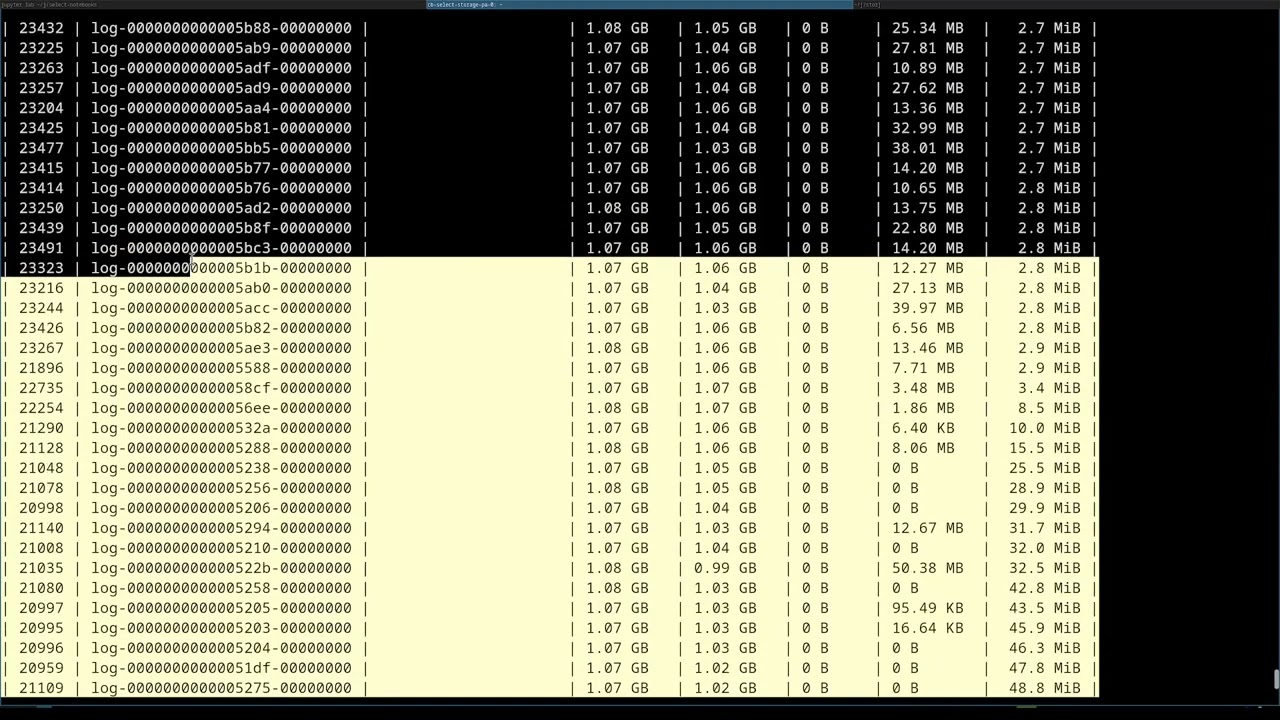

How to check a progress

How to check a progress with strace

sudo strace -p your_storagenode_pid -f -e trace=file -tt 2>&1 | grep blob

To get your storagenode pid, run

sudo docker top storagenode

Find the one that has the storagenode run on it.

How to check a progress with fatrace

cd /path/to/storj/node-data

fatrace -ct

Migration finished ? Cleanup your blobs

-

delete files inside //storage/blobs/

but dont delete blobs directory itself

bash

to find all non-zero files which could remain:

find /mnt/storagenode/blobs -type f -size +0

to remove all under /mnt/storagenode/blobs:

rm -rf /mnt/storagenode/blobs/*

zsh

to check:

ls -l /mnt/storagenode/blobs/**/*(.L1)

or

stat -f '%z %N' /mnt/storagenode/blobs/**/*(.L1)

to remove:

rm -rf /mnt/storagenode/blobs/*

PowerShell

to check

Get-ChildItem -Path "X:\storagenode\storage\blobs" -Recurse -Directory | Where-Object { (Get-ChildItem -LiteralPath $_.FullName).Count -eq 0 } | Remove-Item -WhatIf

to remove only empty

Get-ChildItem -Path "X:\storagenode\storage\blobs" -Recurse -Directory | Where-Object { (Get-ChildItem -LiteralPath $_.FullName).Count -eq 0 } | Remove-Item

Errorhandling

- Dashboard shows incorrect used space

- → stop node, delete

storage/used_space_per_prefix.db, restart node

- → stop node, delete

Example Docker-String

coming soon

Example Windows-String

coming soon

Greetings Michael