Dear fellow Storjers I wanted to report on some experimentation I’ve done the last few days.

TL;DR: NFS and SMB mounted storage are not supported by Storj! [1] NFS doesn’t work at all with the new hashstore. But if you are network mounting your storage anyway, despite advice, you’ll need to either switch to SMB for everything, or move the hashtable (and only the hashtable) to local storage, while keeping the bulk on NFS.

Intro

Against the advice and documentation for running a storj node, I’ve been running my nodes with the data drives mounted via NFS.

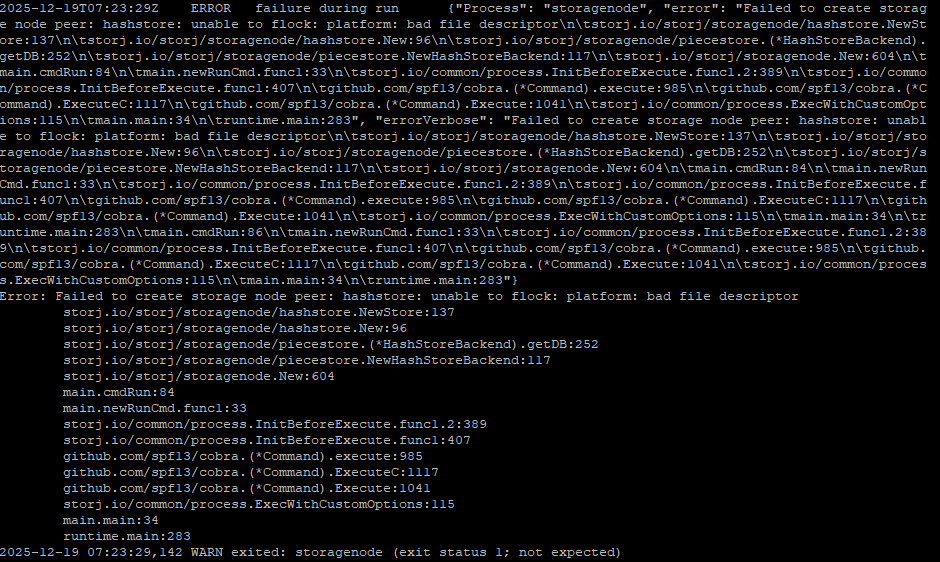

starting with version 1.120.4, storj would fail and throw an error “Failed to create storage node peer: hashstore: unable to flock: hashstore: bad file descriptor”

Important Prerequisite: my databases have, since forever, been mounted on a local SSD. Database don’t work on network storage.

Various Options

0) Doesn’t work at all: experimenting with NFS client lock options

NFS v4 client offers options to mount a NFS share with options like lock or nolock, or local_lock options. No matter which combo I used, I would still get the error. The internet search implied that the Go language just doesn’t allow a flock over NFS, but I’m not a real coder.

Oh, what also doesn’t matter? using “memtbl” or “hashtbl” for your hashstore setup. same error either way.

1) Kind of works but not really: switch to NFS v3

You can switch to mounting the drives with the v3 NFS protocol. Apparently NFSv4 made big changes in file locking, but a side effect is that old v3 can run storj without throwing the “unable to flock” error. HOWEVER, very quickly the storagenode software will start returning errors about “Stale NFS file handle” and it will fail to open various files. Including important things like hash tables. so this doesn’t really work.

2) short term Workaround: Use docker volume mount option to trick the entire hashstore to a different location:

In my docker compose file I tried this line:

- /<nfsmount>/storj:/app/config #(the normal data location)

- /<localdrive>/storj/hashstore:/app/config/storage/hashstore #(a local drive to fake hashstore)

This effectively tricks storj into using a local drive for the hashstore and only the hashstore, old piecesstore (blobs) stay on the NFS mount. The problem is, when storj migrates our data to hashstore, this would migrate ALL your data to a local drive, which is fine… but you’re no longer using your NFS mount, which is the whole point of me writing this.

3) Longer term workaround: store only the hash table (cache) on a local drive

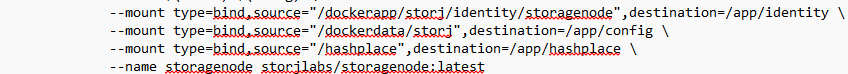

add these lines to your docker (I use compose so it’s):

volumes:

- /<localdrive>/hashplace:/app/hashplace

…

command: --hashstore.table-path=/app/hashplace

This will store the actual hash “table”, which is fairly small, only in the local drive, while the bulk of the data (the logs) are still in their old location. Some other folks have talked of this for performance reasons (hash table on a SSD), but this usage would just be to keep the bulk of files on a NFS store.

A downside here is the storage set up now relies on TWO different drives, and a failure of either one will render the entire Storj node unreadable. So it effectively doubles your chance of failure.

4) “I don’t want to do what I’m told” workaround: switch to a SMB share

I’ve tried mounting my storage drive as a SMB share and… it seems to work okay? Note the important note that my local database have ALWAYS been stored on a network drive instead of a network share. dbs on a network share is probably bad news.

So my docker compose volume setup looks like this:

volumes:

- /<mysmbnetworkmount>/<storjfolder>:/app/config

- /home/<me>/.local/share/<storjcontainername>/identity/storagenode:/app/identity

- /<localdrive>/<storjfolder>:/app/dbs

(which is pretty vanilla setup)

Test Results

I’ve got 3 nodes with option #3 (hash table on local and bulk storage on NFS), in partially migrated state (data on both pieces store and hash store) and they’ve bene running okay for a couple of days.

I’ve got 3 other nodes using SMB for the network storage for everything. Two are tiny and fully migrated to hashstore, on is partially. They also seem okay for the last few days.

Yes I can feel the brooding majest of Alexey’s disapproval as I’m writing this ↩︎