That assumption is correct. As described in the newer of the 2 announcements we are preparing the network for onboarding a new usecase. Unfortunatly cleaning up data that was uploaded without a TTL takes 2-3 weeks because of the way garbage collection works. So first your usage will go down a lot by the current and the following garbage collection run. I am sorry there is no way to make this a smooth transition. We are freeing up the space for a reason. So in the following weeks/months that space might or might not get quickly replaced with customer data. But lets be honest here. Currently all you have from our side is a promise and no way to verify it. You see the decrease right away and all thats left is hope that our plan works out at the end.

these two different data as far as I know.

Possible but it sounds a bit extreme. Is that trash mostly on the SLC satellite? There was a lot of old test data we deleted from SLC. I was told it finished yesterday evening/today morning. That would be a very quick bloom filter creation but it would explain your trash situation. I have to double check.

I am 100% perfectly OK with that. I don’t need any promises, or predictions, or roadmaps. As long as I receive some coins near the start of every month… Storj can do whatever they want! ![]()

Nodes pretty much run themselves anyways: I only need to take a look if I receive a “Your Node has Gone Offline” email… which is rare.

avg disk space used this month for saltlake

another bigger nodes also have 3-4TB of data deleted last night but it means a lower % of total data. In small nodes the wheel chart is more surprising

Things are getting interesting ![]() At least this month we will see how the payout looks like without dummy data artificially filling the storage nodes.

At least this month we will see how the payout looks like without dummy data artificially filling the storage nodes.

We know exactly what the payouts will look like… ![]()

![]()

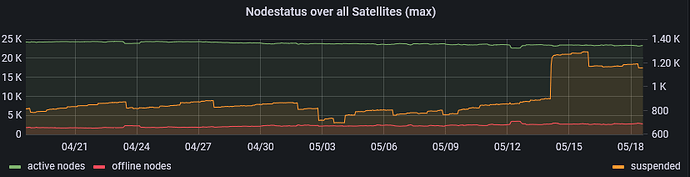

Sadly yes, we know ![]() The number of suspended nodes has increased in the last week… not sure if it has something to do with the new storagenode release.

The number of suspended nodes has increased in the last week… not sure if it has something to do with the new storagenode release.

I guess - nothing. The usual thing - some “potatoes” didn’t make it.

Dude! You’ve got more trash than most SNOs have data! ![]()

Good Lord, that is brutal!

A 20% increase in suspended nodes is normal? a lot of potatones decided to explode at same time.

I am turning down watchtower in 50% of my fleet to avoid a storagenode upgrade. We will see.

New storagenode release comes with the fsync option disabled by default?

Clearly a lot of synthetic, test & free tier data is being purged, which sadly will have a big effect on old nodes the most, but this was something that had to be done eventually. All we have to hope for is that new data will soon follow.

If you check out the town hall of march, they show that there was about 12PB of actual consumer data, therefore a lot of the data currently in the network will eventually be purged. Given the recent focus on performance, the trend of customer data increase that was shared in the town hall, and the recent testing taking place, I do think more data will come. How much and when? I got no clule.

Never hurts to be careful about updates, but I dont believe that this recent increase of suspended nodes is related to the update.

Usually a node takes about 2 weeks to be suspended when shutdown (Im recalling from memory, might be off, but takes a while).

The update started rolling out 5 days ago. The sudden rise of suspended nodes was 4 days ago. Therefore, it looks to me like the suspended nodes was not a cause of the 1.104.5 update, but rather something else.

Sync writes (fsync) should be disabled by default in the new update. Can be enabled manually if you are worried about it or have an unstable system.

it updates only the base image, which has a Downloader only. All docker nodes have storagenode and storagenode-updater. The last one updates nodes to the last version accordingly their NodeID. There is no way to disable it.

In short - disabling the watchtower will not stop the rollout.

Looks like changing the retain log level from debug to enable did the trick for my nodes.

Date Available hdd space Used hdd space Free hdd space

---------------------------------------------------------------------------------------------

2024-05-14 - 1.986.332.595.376.128 1.508.894.220.869.632 (75.9638 %) 477.438.374.506.496

2024-05-18 - 1.986.332.595.376.128 1.283.717.187.338.240 (64.6275 %) 702.615.408.037.888

Sum of 117 BF files, that are current being processed by the nodes : 783004 KB

Th3Van.dk

Is that saying you’re down about 225TB in the last 4 days?!? Holy crap! ![]()

If nodes auto-upgrade every couple weeks… did your 95TB node even have time to complete a used-space-filewalker run? You must have hundreds of millions of files!