Sorry, I wasn’t clear. I was asking if someone could make the change and build an exe to test? It’s going to be 5 minutes for someone with the dev environment set up but probably several hours for me.

i doubt moving the database really does much anyways… so long as its below a certain size most of it will be in cache somewhere… and easy fix for you might be to setup an extended cache on your ssd… then it will end up having the most used data ie database… and you also improve anything else using the drive.

having a large cache also allows the drive to do more sequential operations, in many cases and thus should in most cases improve performance greatly.

In that case, this should definitely be a test that’s run. It appears that some users think moving the DB would make a difference and others don’t. It’s worth knowing if having the DB and blob store on the same SMR drive causes issues.

What does this default to? Or is it unlimited/dynamically adjusted depending on hardware/performance.

I’m going to try running 24 hour tests. The metric I will use to analyse performance is the % of successful uploads. I’ve tried changing the max-concurrent-requests to a few different values to see what happens. I currently have it set to 10. I’ve experienced a couple of brief freezes while using the machine, but infrequently and no more than 2 seconds, which is better than it was previously. Approximately 10% of uploads are successful after running for an hour. I will report results back in this thread.

SMR are notoriously bad, i’ve read about datacenters trying them out have having 175kb/s write speeds on them… yes KiloBytes/s

they are good at one thing… cheap data storage that’s mostly read not written… for that they are quite good for their price and capacity…

but writes… the problem is something like this, if you want to write to a SMR drive that has data next to the data your are writing… then it will read the existing data in the track next to it, and then rewrite both tracks, being the new data and the old data next to the new.

so it can end up being overloaded very easily… but sequential data is about bandwidth not specific IO

so there it excels

well you don’t want to overload the drive either, it will reduce it’s expected lifetime.

when the computer stalls it’s because it’s waiting for the disk to respond…

you might be able to bypass that somehow tho… maybe if you put it in a usb external hdd case…

disk io waits are system killers.

i can try and test that later… pretty sure the system shouldn’t stall if its on the usb, that’s one of the nice things about running it through the cpu… will cost some cpu time tho.

Assuming the logged uploads are me uploading rather than me being uploaded to, then it looks like reads are far more frequent that writes (which I would expect). I don’t know the details of how the database is utilised with storj, but is the database updated when a blob is read? Tracking statistics/usage etc? If there are database writes when a blob is read, then I’d assuming moving the database to a different drive will make a difference. If not, then there would be less reason to do so.

Writing to databases is likely to be re-writing areas of the drive already is use, which as I understand is the worst case scenario for an SMR drive.

other way around… uploads are download and downloads are uploads xD

they say its because it’s seen from the customers perspective…but yeah its just weird xD

maybe the satellites and storagenodes share log syntax… who knows

In that case it’s no wonder the drive is being killed! I’d still be interested to try running with a relocated DB for 24 hours to see what happens, if anything. Any volunteers for sending me a build and telling me what files I need to move?

It defaults to unlimited. Which is where it probably should stay for most users. I highly recommend keeping this line commented out and fixing your system bottleneck instead.

The problem with an SMR drive at 100% utilisation is that even if there is a write cache, there’s never any downtime to clear that cache, no matter how big that cache is. There’s no easy answer than I can see for fixing this bottleneck.

Defaults to zero, meaning „unlimited”. Which, in my opinion, is a poor default, as any setup will eventually hit some kind of bottleneck.

Be careful. User usage patterns will change over time, so this metric might not be very useful.

upload log entries are ingress, ie. someone uploading to you.

That’s what I’m thinking too.

There needs to be an SMR drive option, were upload requests are refused for x seconds every minute to give the drive write cache a chance to clear. Apparently my drive has a ~20GB cache, so it’d take a while to fill that.

Note that we’re still not sure that these freezes are due to the drive being SMR. However, if it turns so, you’re welcome to post an feature idea on https://ideas.storj.io/!

It is a SMR drive

link below and benchmarks in the other link.

doesn’t say in its spec sheet from seagate that it’s a SMR drive… classic seagate

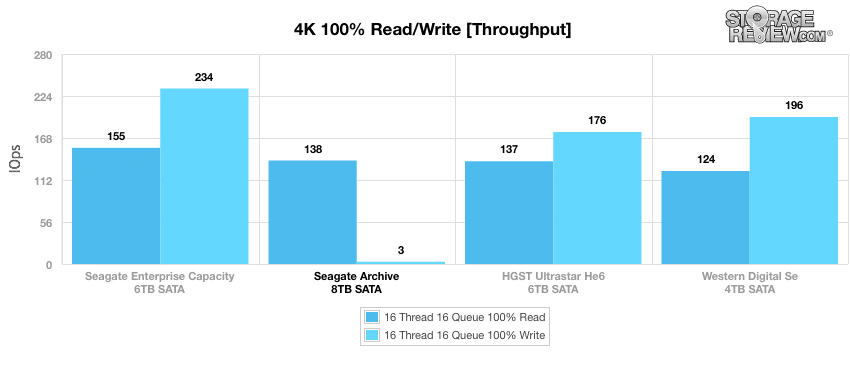

In our next few benchmarks, we will be measuring smaller 4K random transfers. In the first 4K profile test, which measures MB/s, the Seagate Archive recorded 0.30MB/s and 10.52MB/s for reads and writes respectively.

grabbed from the Seagate archive link

https://www.reddit.com/r/DataHoarder/comments/86sf1z/psa_regular_barracudas_are_now_5425rpm_smr_drives/

@Toyoo yeah i would be pretty certain that its the drive technology’s fault…

Benchmarks on these drives are inconsistent as well. They can be fairly ok if you’re doing continuous writes or even random writes as long as you never delete or change any data on the disk. But when you start filling up the disk and deletes and writes are happening constantly they slow down to a crawl. I’m really not a fan of them for anything other than cold storage.

I think this chart from the above link probably indicates what we’re seeing here.

It looks like these drives can’t be run at 100% if writing to the drive.

Is data is written to the drive as it’s received? Or is the blob stored in memory and written to the drive once complete?

I think it depends on if disk has write cache enabled or disabled.

I meant in the Storj code rather than the disk technology.