AI starting to get better with hands… but still…

Nom Nom … Break noted as well. Very active on deletes & ingress these last 12 hours … 24hr TTL? Ingress here has upped a bit to about 8-9x normal. All the while disk space used, after deletes, is staying relatively the same. An interesting workout.

Edit: I may add that b/w is swerving all over the road, haha that’s experimentally satisfying.

2 cents,

Julio

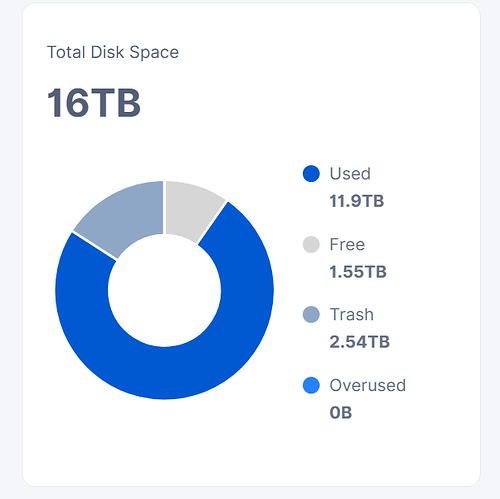

I can’t tell. Disk space calculations with dedicated disk is still not working ver. 1.123.4 This node has around 5.8TB

What testing at this time? Don’t think it’s a customers data.

Trash can’t be full. It dosen’t have limits. ![]()

That messed with my brain for a second. OS-level saw all that Mar-1-retrash data getting deleted (so more space)… but node said a firehose of uploads was coming in (so less space). What the? ![]()

funny the big ingress spikes started at 6:00 Your local german time, and thats 0:00 in Washington, USA. So a new day: Sunday in whole east coast, New York etc. Some schedule has to be set up for some backups i guess. Maybe some D.O.G.E. worker found us as a cheaper alternative for some gov’s docs backups ![]()

I’m showing the same, 100+ Mbps ingress… But interestingly the storjinfo site is reading a loss of about 6PB??

The graph includes data for Storj Select too.

I have to fiddle with the storjinfo reports: like try different durations: because there are so many data-dropouts.

Like the drop Nodemansland just referenced is about the size of the EU satellite temporarily missing numbers (so if you switch to 30-day duration reports: the gap is smoothed over and we’re back to 28TB used)

Edit: Looks like the size just refreshed and fixed itself:

I wonder if this is sticky data or slooowly-sliiiding- than-kaboom-into-trash data?

The pacman closes it’s mouth more and more each day.

Very little data seems sticky these days.

I guess we’ll find out in a week or so ![]()

It seems we are back to normal ingress.

That is intensional. Dediacated Disk tells the node to not worry about used space and stop tracking it. Instead just fill the entire disk until there is no free space.

Is it test data ? And we’ll see the TTL thing all over again ?