Try out hashstore. My nodes are not impacted by the TTL deletes at all.

I’m looking so much forward to it! Can’t wait to adopt … as soon as it leaves beta : )

I must say I love it. Switched all nodes to hashstore and my Gard drives were never this quiet ![]()

Doubt that. With piecestore and badger, they work way less than with hashstore. And the scores are higher too. Hashstore needs more impruvements and maybe they will become more performant with new teaks. But now, that compaction is awful.

Ok, could be just a placebo effect cause I now payed some more attention to the noise and it seemed to calm down.

I wonder if once hashstore ships for-real… if node updates will slow?

I can see Storj continuously improving the satellites (as they need them as efficient and durable and maintenance-free as possible: to be run cheaply). But for what nodes have to do… aren’t they kinda “done” already? Like hashstore is a performance optimization… for higher loads that may never arrive.

Then Storj won’t survive anyway… ![]()

I was able to create 2 identities simultaneously while running 5 nodes in the meantime, with nearly 100% CPU usage all the time, process ran with the console constantly lagging for roughly 12/13h but nodes never crashed and neither Raspberry did, so for the moment at least I feel quite safe.

In any case I know that RPi-4 Rev1.1 cannot handle the more than 2 disks being stressed with a high I/O rate, however we should initially consider how long may it take to reach such a period of a high-stress (disks and setup may be both outdated and unusable 5/6 years from now), plus I heard that, whether anything similar should happen, the issue could be fixed by purchasing a DC-Powered USB-Hub where to plug most of the disks.

Let’s say that another stress test happening right now won’t be the worst thing, so I could put at testing my setup and see if it holds the pump. ![]()

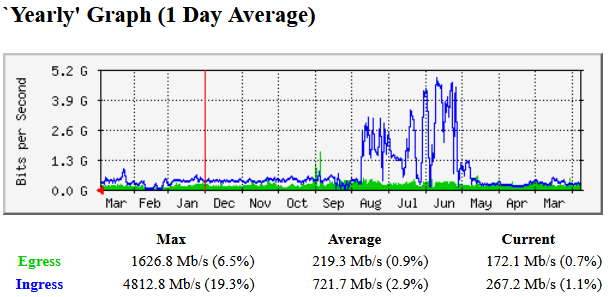

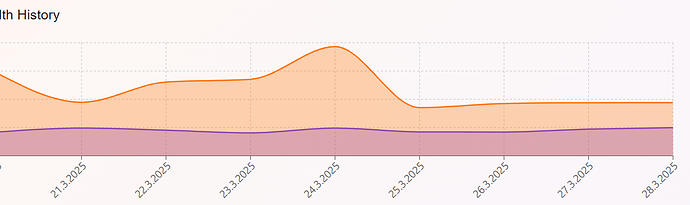

Right on time like a clock… here comes the traffic wave…

Maybe a scheduled backup as many guessed. However, if they also download, then it might be one of the customers with similar to Vivint load.

Today I got info what is going on. It is a paying customer that has uploaded a few more PB in the last 30 days or so. I was told they are migrating data. I don’t understand why it comes in bursts. I didn’t want to ask these details because privacy is more important to me. As long as they pay for it I don’t mind having some upload spikes on my nodes every now and than.

Try to talk them to set longer TTL.

“What if something happens right in the middle of uploading Your data?

Do You have enough time left to download lastest backups before TTL will delete them?”

Setting 14 days instead of 7, could give You that extra week to act just in case!

Unfortunately all this traffic doesn’t result in a significant net growth. Is storj still deleting old unpaid data or is this because of the 7 days TTL?

I wanted to know where the traffic was coming from ![]() , here’s my investigation:

, here’s my investigation:

see the video here

By me there’s more trafic, but it’s not unbelievable. I’ve always had 2.5 Mbps.

After that, my Internet connection is ultra-stable and I’m currently reaching 5/month TO of reception and 2 TO/month of sending on the server, not just with storJ .

I use iostat -m and iftop

do you have any other ideas on how to better understand why the traffic is not the same for me as for others?

I wonder if there’s a writing speed problem with one of my HDDs?

I only saw the start of your video: but if you’re comparing your setup to Th3Van… he has 130+ nodes doesn’t he? (and a 10Gbps Internet connection?)

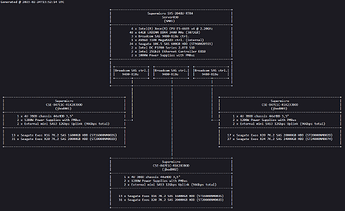

132 nodes to be exact (which are in the process of being migrated from EXT4 to XFS), running on Supermicro hardware.

The Supermicro server is hoked up to a 100Gbit/s network, using a 25Gbit/s NIC.

Th3van.dk

Of course we deleting the expired accounts.

May we have some info about how much it’s still to be deleted or some info about the ongoing delete stuff?

So, I checked some aggregate node i/o history. Indeed TTL averages 48 hours over the last two weeks.

This new client seemed to add about 1 PB to the network at most. It’s entertaining to watch them throttle b/w up and down. Here’s hoping they stick around past 30 days, I suppose we’ll know come April 1st.

2 cents,

Julio