No. I guess I have to explain that part a bit more in detail.

Hashtable needs 0 bytes of memory but it writes 128 bytes of metadata per piece.

Memtable needs 18 bytes of memory but it cuts the metadata in halve so 64 bytes of metadata. You can store that metadata on HDD or SSD. This translate into 1 GiB of memory per 10 TiB of space for memtable. Always regardless of where you store the 64 bytes of metadata.

A 100% overhead? Or do you mean your used space has doubled?

real space on disk is OK, but node show used is more.

I have 8 hashstore nodes most of them 6tk OK they are smaller. 2 bigger they node HDD are 12 and 16 TB and they both show doble from real amount.

Used space scan on startup is disabled I guess? After the migration is finished you should be able to enable it again and it should take only seconds to run.

Previously you didn’t recommend to use SSD for hashtables. I also found very intensive writes to hashtables during compaction (I guess, full rewire of hashtable while compacting each log) when tried to use SSD. Is anything changing in this behavior when memtables are used?

I deleted all db-es after migration and restarted the node. It solved it.

You could just restart the node first, wait 8 days and restart again, to be sure the startup file walker ran. See if it solves it. If not, delete the db-es.

Why 8 days? Because it seems that startup FW runs only once in 7 days.

just delete used_space_per_prefix.db

So, I assume you’re not storing the piece ID in memory at all, and instead verify it on disk?

Is there a way to configure trash threshold for the file rewrite, like rewrite only if there is more than 50% of trash?

Some of the migrated nodes are spending more than half a day almost every day rewriting the files, causing the success rates to sink, to only gain insignificant amounts of free space.

Does hashstore automaticly mean that used space will be calculated by real HDD size and usage? I mean not like old way

I got an answer from the team:

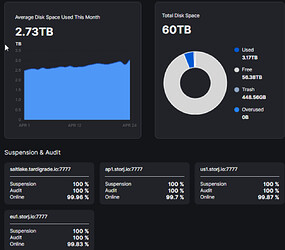

"I’ve been using Hashstore since December. I have 3.2 TB, and all folders are mirrored using Drivevpool. All databases are redirected to SSD 1 via a rule.

With Piecestore, that would be over 25,000,000 files, and the MFT would then be several hundred GB in size on every 5 hard drives. Access and fragmentation were always a nightmare here.

Compaction takes me around 20 to 60 minutes per day, with the pool showing up to 400MB of read and write operations. Since the files are received via Piecestore, and this is also redirected to a second SSD 2 (which achieves up to 7GB/s) via a rule, I still have no problems. There are only 34,000 files in total, and the MFTs are only 20MB in size per hard drive. The migration is every hour.

Every day, the hard drives and free space are automatically defragmented. There are usually only around 40-100 fragmented 1GB Files.

So, I will definitely not deviate from Hashstore anymore. It brings an immense improvement in performance." And the best, i have at least only one Upload error and download error per hour.

My actual Version is v1.127.0.rc

The problem here is that the hash store helps with shit setups. It does nothing but add overhead to properly configured systems.

Drivestore? Mft? Come on! How can you manage to create a system that suffers on a 3TB storagenode?!

My nodes are all around 12TB, not 3, I don’t have any issues with performance, and I also don’t have to waste 20-60 minutes per day at high load compacting that nonsense.

I still don’t know why does storj keeps wasting engineering efforts improving performance of couple potatos, reinventing file systems and databases. It’s a solution in search of a problem. It’s unnecessary risk to customer data for no benefit in return. It was done before and it did not end well. Three broken systems that hashstore helps make less broken for a few hours a day are irrelevant. It’s nuts.

I suspect it’s because storj select nodes are deliberately set up to be potatoes to raise margins.

It sounds like you all have forgotten the last stress test already. And the stress test was uploading artifical big files at the end to reserve space without killing the nodes. By that point most nodes have fallen behind several weeks with deleting TTL data. The lesson learned was no matter how potent your server is the moment it has to deal with short TTL it can only delete the data as slow as the hard drive allowes it. Hashstore is the solution for that. Just that this low TTL data goes into the storj select network for now. The mistake you are making here is assuming storj select has the same kind of load as your personal node.

Just imagine the situation we had during the load test but multiple IOPs 20-30 times. That is what storj select needs to deal with every day.

If you recall @arrogantrabbit’s statements from that time, he kept saying only “potato” nodes fail.

Besides, at this point I believe it was a confluence of multiple factors. For example, the huge number of pieces prevented the too-small bloom filter from operating correctly. It would be better now that the problem was fixed.

That’s not the lesson. Everything on a storage server is “as fast as the hard drive allows”.

The lesson I learned was the opposite – even at that high load modern filesystems are perfectly capable in managing themselves. Note, my criteria is “node activity not noticeable”, not “server does not burn in flames”. Under that “heavy” load my IOPS were still under 20-30 IOPs per drive. And I’m not an enterprise, I’m a home user, with some old server put together as cheaply as humanly possible (that does not men cheap potato, more like cheap old crusted steak)

Since you claim

and mine did not – does it mean most of storj runs on potatoes? Holy crap.

Deletions take time on any filesystem; it’s a tradeoff. You can’t avoid deletions, you can only defer them (and rename into “compaction”) but they still have to be done at some point.

To simplify somewhat:

- the high IOPS are handled by an SSD (zfs special device) – writing many many small files and their metadata. My PCIE SSD utilization was under 1% . Go ahead, increase traffic 100x. Then I’ll add another SSD

- The throughput is handled by HDD – writing big chunks of data. Under the high load I saw 30-40 IOPs hitting disks, and my internet connection was almost saturated. So, you can’t possibly increase that load, and there is plenty capacity left – disks can sustain over 200 IOPS.

Hashstore needs maintenance (compaction, deferred deletions, and what not) – if select servers are busy all the time – when will this be happening?

note, deletions don’t go to disks. They go to metadata device. That has massive performance headroom. Compacton however, hits HDDs. So how is it better?

I don’t think we forgot the load test. It just takes some effort to imagine how Select may be performing… when the public network is essentially idling. I can understand if the much-smaller-node-count on Select is struggling with a customer like Vivant constantly uploading and expiring files. And understand Storj is concerned a similar customer may start using the public nodes and hitting the same bottlenecks.

No this only means we changed the load test in time to not kill the network. Again storj select has to handle about 20-30 times the load we simulated in the public network.

Hashstore has no problems with TTL data. Deleting TTL data is almost for free because they get all grouped by expire time in the same LOG file. And for garbage collection we simply tune some config values to run enough compaction to free up space while at the same time make sure the nodes still have enough resources to handle the incoming load.

So you complain about hashstore consuming some extra space while talking about installing additional SSDs? Is this some kind of joke?