Yes, you can migrate (sort of) back to piecestore; just set all those lines to false, instead of true. Pieces will stop going in hashstore and be stored the classic way. And with the future deletes, the hashstore will be emptied in time. That’s what I did with one of my nodes, after migrating 2TB of data. Now it has 4TB stored, most of them in piecestore. It’s very quiet, with no compactions, and very performant. It took in like 1TB per month.

it’s just what i did too

it’s possible old nodes lossing storage because unpaid data deleted?

Old nodes with hashtore loss storage but new nodes with piecestore gained storage

I asked the team, since this thread is over flooded with piecestore/hashstore comparing, they didn’t look into it (as me too, unfortunately, I didn’t have a time until now).

We now have it:

Likely you are correct, the older nodes may keep the old unpaid data, and it’s deleted every time. So, maybe not the hashstore to blame.

At least on Select it showed a significant improvements in performance and effective resource usage on a “standard” servers without any special hardware requirements (we do not use a fresh new hardware there, it was there before, at least the majority as far as we know. So yes, we eat our own sentence about using of what’s exist and online).

set STORJ_HASHSTORE_TABLE_DEFAULT_KIND=mem and it’ll write out memtbls during compaction. That is all.

Can I enable hashstore during first storj node installation or still I have to edit this files manually?

My logic dictates that you first run setup, than start up the node to make those files appear, in order to change the settings and switch to hashstore, but maybe they are created at setup? I don’t realy know…

You still need to edit these files manually.

What performance do you get with hashstore compared to badger cache, as GB stored?

I run hashstore only on 2 nodes and the others run the badger cache. The hashstore ones performe the worst, storing 3x less data in july than others.

Do you see similar behavior? It’s not from deleted data. I have older and younger nodes than those 2.

Raw data:

-hashstore: +300GB

-badger: + 870GB

(I don’t know why these spaces between lines appear…?!)

Seeing this and the fact that hashstore had enough work done, and it’s pretty much finished, maybe Storj would allocate some resources to continue impruving the badger cache…? And the recovery after an abrupt shoutdown? I believe many of us will stick with it.

2 posts were split to a new topic: New forum UI

Are you saying that your hashstore nodes increased by 300 GB while your badger nodes grew by more than 870 GB in July? If I recall correctly, hashstore was specifically designed for Select nodes, which handle significantly more ingress and pieces overall. Therefore, it shouldn’t perform worse than running a node with badger. Otherwise what is the point of using hashstore?

Are the specs identical between those two nodes? Used space available space and so on?

Available space plenty. There are differecies between the systems, but they didn’t made any difference for ingress before switching to hashstore. They are all Synology’s 2 bays, the hashstore rig has 8GB RAM, the others have 18GB RAM. But even when I had only 1 GB RAM installed, the ingress/stored data did’t show an uderperformant system (that was before switching to hashstore). I rang the alarm from the first moth about hashstore causing a node to store less data, and some of you confirmed it.

I’ll keep asking… do you see the same thing? Are you running badger nodes and hashstore nodes in parallel?

Thanks for the feedback.

We continuously measured the performance of thousands of piecestore/hashstore nodes.

Hashstore was way better all the time.

Especially with normal/large storagenodes with 20-80 M of pieces. Badger cache can help sometime (it can make the walkers faster), but still there are IO operation (like write) which requires to touch the file system. ext4 dirs are especially slow with million of objects, and badger cache couldn’t help if file system should be touched.

On the other hand, hashstore requires only the scanning of 2x 4-16GB files, which is way easier, and cache friendly. And performance can further be improved with SSD or more memory, if required.

With all of these we believe hashstore is the right path to go forward, and we are considering to rollout hashstore to every node.

It’s hard to judge what is the problem with your nodes. There are so many moving parts which can make differences (selection algorithm, latency, success score, free space, …). Also it’s not clear how do you measure space usage (file system usage vs satellite_usage?).

But If you are really interested to debug the issue, I would be happy to organize a call / office hours to dig deeper, what’s going on with your nodes. (But sometimes it requires historical recording of metrics).

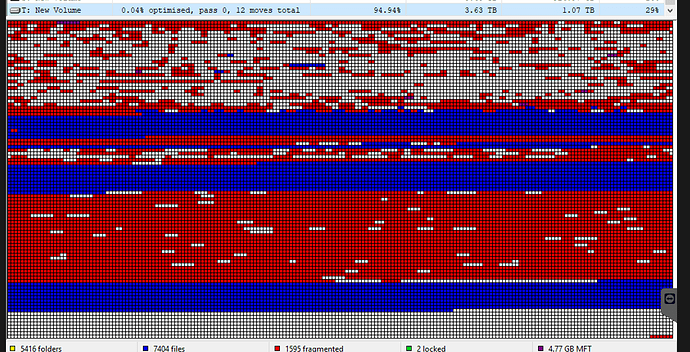

after node conversion, hashstore is very fragmentated, so it can perform a lot slower, that tested in the lab, that made from scratch. I think we will see main performance benefits when nodes start to go over 6-10TB of used space. this is how it looks like

I just noted the average monthly stored reported by satelites, for which I receive the payment. The usage coicides with system reported used space.

Pushig hashstore to every node I believe it’s a bad ideea.

My 10TB used space nodes are working great with piecestore+badger, and they accumulate a lot of data. If you push the hashstore to them and they start accumulating 1/3 of data, I will be pretty upset.

Maybe if all the nodes perform bad, the accumulation of data will flatten for all, and I won’t see any change, but still…

if hashstore will be everywhere, then all will be in equal state, so i dont think that something change.

does Hashstore and piecestore sharing same ip? because if they have different locations, ISPs it can be also a reason for difference. You also need to see how much deletes are going, may be one node delete more than other.

If Storj thinks it’s the way forward, I’ll follow. But it would be nice to know a bit about how recovery works? Right now disk/filesystem errors can result in a handful of .sj1 files being nuked: which is survivable. If the key hashstore tables get damaged can they be rebuilt (even if it means reading all the data/log files)? Or is the node “just gone”?