i didn’t run hardware raid i just mixed sas and sata in the same array… tho they may also have been or was very likely on the same ssf8087 port with sata and on the same backplane as sas and sata…

so i’m not 100% on what actually fixed the issue, but the guy that explained it, basically said one shouldn’t mix them on arrays in general… may or may not apply to zfs pools… now that i think about it… i’m not even sure i actually had them mixed in pools, because i wanted the drives to all be the same so they didnt have offsets in mechanical or software to cause them to be out of sync and thus created extra latency and wear.

was a painful trouble shoot tho…

it’s not that it doesn’t work… its that most basic stuff ends up being so complicated, but that might also be down to me working on windows for a couple of decades… all the possible settings and stuff you can do in linux sort of makes windows look like iOS when compared to linux…

so much stuff in linux you mostly never have to deal with in windows, because it all runs on auto…

but thats what happens when something is used by that many people and so much money is pushed into the development.

windows as a hypervisor tho… thats a joke, i spent weeks trying to passthrough a usb port…

maybe i misunderstood how that spanning tree thing works then… i understood it as it made a sort of table over the MAC addresses to avoid sending data back and forth over the same cable multiple times…

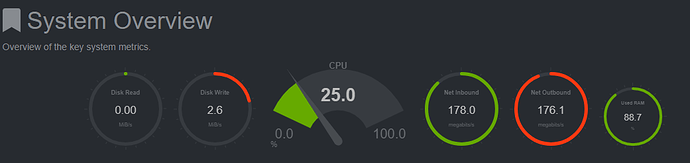

pretty sure my vlan setup is bouncing data back and forth atleast two times between the switch and the vmbr in the server, thus the 1gbit network connection ends up being able to do 160-170mbit

which is really annoying…

sounded like it was to fix that… STP but haven’t gotten it working yet, so maybe not… vlan’s gets really complicated… i should just a pulled another cable lol

uhhhh was checking up on L2ARC it seems ZFS 2.0 has been released…

i’m so going to hack upgrade my proxmox zfs version

PERSISTENT L2ARC