Test data is still coming… Everybody asks to stop test data and remove it. I was just showing some stats about it. I think we already discussed the payouts inside and out… there is nothing more to it. Now we just debate and observe how things are moving forward, test data being one of them.

You seem to have ignored my reply though… Almost all of that will be repair. Not new data.

@BrightSilence

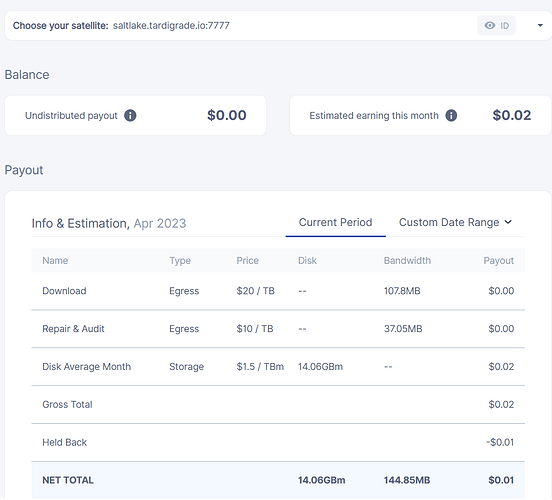

I read the reply, and that’s why I posted the graph; but is my fault to post incomplete data without explanations. I had a lot of things to do, sorry. In short, I don’t realy care about test data, or what type of data is on my nodes, as long as I get payed. I just try to bring some numbers into this debate over test data. I don’t know how accurate the dashborad is, but from what I see on it, there seems to be more than just repair and audits:

-data storted on 1 april: 29.74 GB

-data stored on 12 april: 43.85 GB

-data accumulated in april: 14.11 GB

-repair and audit in april: 37.05 MB

-egress: 107.8 MB.

@heunland

I think I missed that.

Just put your mouse over the graph and see how much of ingress is “usage” and how much is “repair”.

![]() of course there is egress repair and ingress repair… this is what I was missing. It all makes sense now. I never payed attention to those pop-ups. Yeah, you’re right; that is repair the most part.

of course there is egress repair and ingress repair… this is what I was missing. It all makes sense now. I never payed attention to those pop-ups. Yeah, you’re right; that is repair the most part.

My income has been declining since the beginning of April. It seems that developers are lowering egress to reduce costs at the same rates.

It’s not possible to lower egress of the customers from Storj side - all data is going directly from your node to the customers.

But egress from the customers of test satellites was low for a long time already, at least on my nodes

While the customers from the public network have much greater usage:

Just curious, who is a customer of a test satellite? Anybody external to Storj?

No. External customer don’t have access to test satellites now. We had some limited beta testing of some features on US2 in the past but this is no longer ongoing.

the issue with lower egress earnings, i think could be related to the elevated ingress.

my download successrates is near an all time low…

only getting 70% or so last i checked, can’t really blame Storj for our hardware being unable to keep up with the rather massive ingress we are seeing these days.

so if i could get 100% download rates i would earn like 40% more…

i know thats a 110% but to that i will only say… THATS NOT HOW % MATH WORKS!!!

Do you also see massive deletes in the last 7 days? My trash is growing and growing ![]()

One of my 3.2TB nodes has more than 800GB in trash now…

same got a couple of deletes lol

Yes, it is terrible!

My Bandwidth usage halved and around 5% (and growing) of used capacity is in trash atm

Think they are tidying up the abuse-accounts/abused storage.

LOL how much total storage do you have?

Enough for now lol.

capacity operations bandwidth

pool alloc free read write read write

----------------------------------------------------------------------------------------------------------- ----- ----- ----- ----- ----- -----

bitpool 275T 71.4T 4.15K 3.93K 58.0M 62.7M

raidz1-0 16.0T 380G 471 315 17.7M 4.85M

ata-ST33000650NS_Z2900Q1V - - 92 26 3.54M 324K

ata-ST33000650NS_Z2900QML - - 92 25 3.51M 312K

ata-ST33000650NS_Z2900PN0 - - 97 26 3.58M 324K

ata-ST33000650NS_Z29004BT - - 2 268 32.2K 4.74M

ata-ST33000650NS_Z29004LA - - 94 26 3.56M 324K

ata-ST33000650NS_Z2900QPV - - 92 25 3.51M 312K

raidz1-1 84.5T 13.8T 287 229 5.51M 6.12M

ata-TOSHIBA_MG09ACA18TE_62P0A00NFJDH - - 48 39 946K 1.02M

ata-TOSHIBA_MG09ACA18TE_62P0A01PFJDH - - 47 42 936K 1.01M

ata-TOSHIBA_MG09ACA18TE_62X0A07QFJDH - - 48 43 945K 1.02M

ata-TOSHIBA_MG09ACA18TE_7220A013FJDH - - 47 38 934K 1.01M

ata-TOSHIBA_MG09ACA18TE_82V0A024FJDH - - 48 31 945K 1.02M

ata-TOSHIBA_MG09ACA18TE_7220A01GFJDH - - 47 34 936K 1.01M

raidz1-3 29.2T 3.52T 243 248 4.41M 6.01M

ata-HGST_HUS726060ALA640_AR31021EH1P62C - - 40 41 758K 1.01M

ata-HGST_HUS726060ALA640_AR11021EH1XAPB - - 40 41 749K 1020K

ata-HGST_HUS726060ALA640_AR31021EH1RNNC - - 40 41 758K 1.01M

ata-HGST_HUS726060ALA640_AR31021EH1TRKC - - 40 41 749K 1020K

ata-HGST_HUS726060ALA640_AR31051EJS7UEJ - - 40 41 758K 1.01M

ata-HGST_HUS726060ALA640_AR11021EH21JAB - - 40 41 749K 1020K

raidz1-4 72.4T 25.8T 365 226 7.79M 7.06M

ata-TOSHIBA_MG09ACA18TE_Z1F0A003FJDH - - 61 35 1.31M 1.18M

ata-TOSHIBA_MG09ACA18TE_9120A224FJDH - - 60 41 1.29M 1.17M

ata-TOSHIBA_MG09ACA18TE_9120A30KFJDH - - 61 38 1.31M 1.18M

ata-TOSHIBA_MG09ACA18TE_Z1A0A0F4FJDH - - 60 36 1.29M 1.17M

ata-TOSHIBA_MG09ACA18TE_Z1B0A0FGFJDH - - 61 39 1.30M 1.18M

ata-TOSHIBA_MG09ACA18TE_Z1B0A0NYFJDH - - 60 34 1.29M 1.17M

raidz1-8 70.4T 27.8T 330 311 8.28M 10.7M

ata-TOSHIBA_MG09ACA18TE_81X0A0A7FJDH - - 55 55 1.39M 1.80M

ata-TOSHIBA_MG09ACA18TE_9110A008FJDH - - 54 51 1.38M 1.78M

ata-TOSHIBA_MG09ACA18TE_9110A00MFJDH - - 55 48 1.39M 1.80M

ata-TOSHIBA_MG09ACA18TE_9110A00VFJDH - - 54 52 1.37M 1.78M

ata-TOSHIBA_MG09ACA18TE_9120A1ABFJDH - - 55 53 1.39M 1.80M

ata-TOSHIBA_MG09ACA18TE_9120A1H9FJDH - - 54 50 1.37M 1.78M

special - - - - - -

nvme-nvme.8086-43564654373031313030305a31503644474e-494e54454c205353445045444d443031365434-00000001-part1 1.44T 15.3G 1.36K 1.01K 7.52M 5.43M

fioa 1.27T 188G 1.13K 1.62K 6.78M 22.6M

logs - - - - - -

ata-INTEL_SSDSC2BA400G3_BTTV604607F0400HGN-part4 1.71M 5.50G 0 0 1 951

----------------------------------------------------------------------------------------------------------- ----- ----- ----- ----- ----- -----

Just noticing an abnormal amount of repair egress. Are we seeing a small exodus of nodes?

12GB today, normally repair egress doesn’t go above 2GB a day for me throughout the month.

Interesting, I see the same in my nodes. In the statistics you can definitely see some fluctuations on the amount of active nodes at May 1st 00:00. The amount of active nodes declined, but then also restored in the hours after that? And that repeated that once again?

There seems to be only about 500 nodes less then a few days ago

That must be a stats glitch. I noticed some changes to node tally in this commit. satellite/accounting/nodetally: remove segments loop parts · storj/storj@6a55682 · GitHub

Perhaps that momentarily impacted some accounting on satellites.