10MB bloom filter successfully processed. It definitely cleaned up more than before, but it left way more than 10% behind unfortunately.

2024-04-30T16:33:38Z INFO retain Prepared to run a Retain request. {"Process": "storagenode", "cachePath": "config/retain", "Created Before": "2024-04-23T17:59:59Z", "Filter Size": 10000003, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-04-30T21:45:51Z INFO retain Moved pieces to trash during retain {"Process": "storagenode", "cachePath": "config/retain", "Deleted pieces": 7775774, "Failed to delete": 0, "Pieces failed to read": 0, "Pieces count": 34133480, "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Duration": "5h12m12.637650404s", "Retain Status": "enabled"}

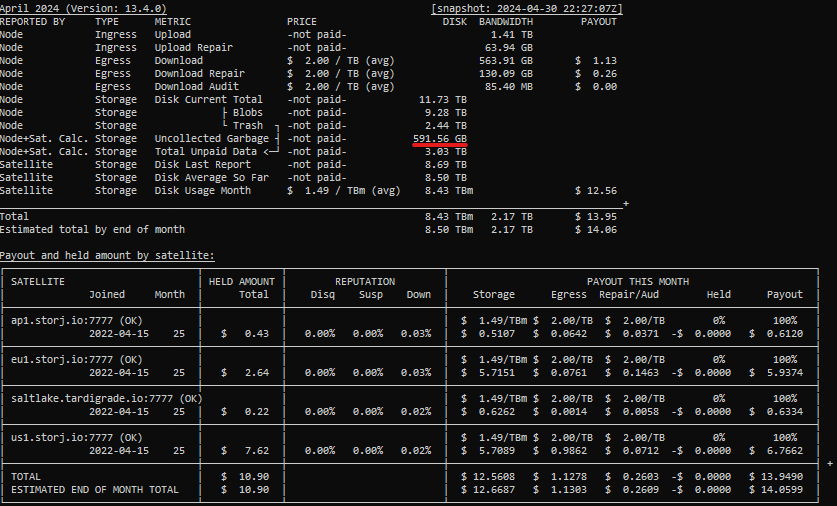

Prior to the run I had about 1.85TB of uncollected garbage. But it left about 592GB behind.

In your PM you mentioned the satellite has 22,929,122 pieces for this node. My node saw 34,133,480. So ideally it would have deleted 11,204,358 pieces. But it only removed 7,775,774 for about 69% (nice). Leaving 31% behind.

Side note: 592GB/1.85TB = 32% indicating that the way I derive uncollected garbage in the earnings calculator is quite accurate.

Are larger sizes than 10MB planned? If we’re bumping up to the limits of that already, it seems insufficient long term.