I’ve had this open in a tab ever since you posted it, but never got around to doing an in depth read until now. I guess the upside is that there is now quite a bit of feedback from other Storj people on the page as well.

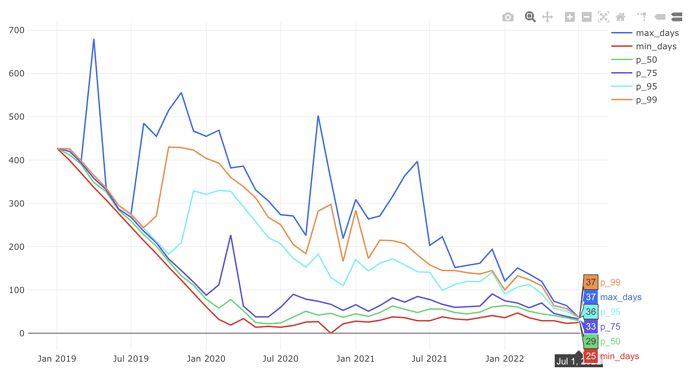

See this redash graph of how long it takes nodes to get vetted https://redash.datasci.storj.io/queries/2941/source#4683

Is this something you could share? The link isn’t public. From my own experience the three customer satellites take about a month and a half and the other three all over 6 months currently. So I see the urgency on speeding up that process.

- If each item results in a successful response (not offline or timeout), continue. Else, skip until the next iteration of the chore?

This may give the node too much leeway in terms of getting away missing data by timing out.

- If a pending audit was attempted and reverification count is increased, don’t try it again for x amount of time. Add field for last_attempted timestamp

Depending on the timeframe, this would spread out failures evenly. Currently the audit scores have a short memory and rely on bad luck of consecutive failures to drop all the way below the 60% threshold. Spreading out failures is currently an excellent way to avoid disqualification, even if the node has suffered significant data loss. For context currently even nodes with 15% loss would survive audits when they are randomly ordered. If the errors are spread out, it wouldn’t surprise me if that nearly doubles.

I think the solution to this has already been discussed here: Tuning audit scoring - #72 by thepaul

The suggested changes we arrived at there put the threshold much closer to the percentage of failure that is actually and make the memory for the score longer. With that set up, the order of failures doesn’t really matter any more, as the memory of the score is long enough for spread out failures to still be remembered.

I guess what I’m saying is that implementing those changes is probably a requirement, before implementing the pending audit refactor. Otherwise you open yourself up to node operators abusing this change by just timing out audits if they don’t have the file and using the spreading out to survive much worse data losses than otherwise possible.

That said, some spreading is required. If you collect stalled audits for a while and then try them all at ones and fail all audits at the same time, you get artificial clustering, which would cause the opposite problem of clustering failures together and disqualifying the node too soon.

A possible solution is to not group the audits by node id at all and just tackle them randomly. Though this solution is still not ideal as the different processes would still cause artificial spreading or clustering depending on how much work there is in both processes and how fast regular audits and reverifies are performed in relation to each other. Implementing the audit score changes would help in any scenario.

paul cannon

Jul 29

I’m going to suggest that we add AND NOT contained to our default node selection query. With this new change, nodes should only be in containment for about as long as they are offline. It’s in everybody’s interest not to send new data to a node which we think is offline.

I think this is a good suggestion. It was my understanding that containment isn’t necessarily for offline nodes, but for irresponsive online nodes. These nodes will almost certainly also not respond to customers though. Not sending them new data, doesn’t just prevent uplinks from encountering more failures, it also gives a stalled node a break to possible recover from being overloaded.

If I remember correctly, auditing new unvetted nodes is prioritized by separate processes. Scaling of those should probably depend on the number of unvetted nodes and the average time in vetting. However, scaling for normal audits, should depend on the number of nodes and amount of data on them. This is probably out of scope for this blueprint. But I was curious what the ideas around that scaling were? Is this going to be a manual process or automated in some way? And will it differentiate between audits for unvetted nodes and normal audits?

Sorry for the slow response on this. But I hope this can still be helpful.