its too full. theres the problem.

4 blobs folders or 6-7?

if more than 4:

try to move databases to an ext4 formated usb stick. (not the SD card, not a standard formated stick. the smaller the shorter it will live.)

just to be sure: check if clustersize is 4k

as soon you have enough (~1tb) free space, at least check fragmentation.(defragment it).

Regarding decommissioned satellites you are correct.

This is ext4,

so unrelated, and

is not applicable here.

if the drive is this full, + the use of storj, there is a relative small chance, depending on the time under usage, that even ext4 will fragment. so, why not check it? he can use every IO he can get.

thanks there was more than 4 blobs… its deleting the data now:

root@raspberrypi:~# docker exec -it node2 ./storagenode forget-satellite --all-untrusted --config-dir /app/config --identity-dir /app/identity

2024-03-28T14:15:47Z INFO Configuration loaded {"process": "storagenode", "Location": "/app/config/config.yaml"}

2024-03-28T14:15:47Z INFO Anonymized tracing enabled {"process": "storagenode"}

2024-03-28T14:15:47Z INFO Identity loaded. {"process": "storagenode", "Node ID": "1zUu9TXz7Qq5VhCyCyA7aa7pZx9P2mn4ft47iNpCQw4m185khu"}

2024-03-28T14:15:47Z INFO Removing satellite from trust cache. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:15:47Z INFO Cleaning up satellite data. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

will take some time after ~2 min it has deleted about 3GB

/dev/sda1 9.1T 9.0T 36G 100% /store

edit: its done:

root@raspberrypi:~# docker exec -it node2 ./storagenode forget-satellite --all-untrusted --config-dir /app/config --identity-dir /app/identity

2024-03-28T14:15:47Z INFO Configuration loaded {"process": "storagenode", "Location": "/app/config/config.yaml"}

2024-03-28T14:15:47Z INFO Anonymized tracing enabled {"process": "storagenode"}

2024-03-28T14:15:47Z INFO Identity loaded. {"process": "storagenode", "Node ID": "1zUu9TXz7Qq5VhCyCyA7aa7pZx9P2mn4ft47iNpCQw4m185khu"}

2024-03-28T14:15:47Z INFO Removing satellite from trust cache. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:15:47Z INFO Cleaning up satellite data. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:25:49Z INFO Cleaning up the trash. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:26:28Z INFO Removing satellite info from reputation DB. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:26:28Z INFO Removing satellite v0 pieces if any. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:26:29Z INFO Removing satellite from satellites DB. {"process": "storagenode", "satelliteID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2024-03-28T14:26:29Z INFO Removing satellite from trust cache. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:26:29Z INFO Cleaning up satellite data. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:28:00Z INFO Cleaning up the trash. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:28:10Z INFO Removing satellite info from reputation DB. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:28:10Z INFO Removing satellite v0 pieces if any. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:28:10Z INFO Removing satellite from satellites DB. {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

but only some GB was deleted

/dev/sda1 9.1T 9.0T 43G 100% /store

did restart the node and will see if its free more space, any ideas are welcome

edit²: found some filewalker errors:

2024-03-28T14:30:39Z ERROR pieces failed to lazywalk space used by satellite {"process": "storagenode", "error": "lazyfilewalker: signal: killed", "errorVerbose": "lazyfilewalker: signal: killed\n\tstorj.io/storj/storagenode/pieces/lazyfi83\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:105\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:717\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-03-28T14:30:39Z INFO lazyfilewalker.used-space-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2024-03-28T14:30:39Z ERROR lazyfilewalker.used-space-filewalker failed to start subprocess {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "error": "context canceled"}

2024-03-28T14:30:39Z ERROR pieces failed to lazywalk space used by satellite {"process": "storagenode", "error": "lazyfilewalker: context canceled", "errorVerbose": "lazyfilewalker: context canceled\n\tstorj.io/storj/storagenode/pieces/larun:71\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:105\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:717\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:5ate/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2024-03-28T14:30:39Z INFO lazyfilewalker.used-space-filewalker starting subprocess {"process": "storagenode", "satelliteID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE"}

2024-03-28T14:30:39Z ERROR lazyfilewalker.used-space-filewalker failed to start subprocess {"process": "storagenode", "satelliteID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "error": "context canceled"}

2024-03-28T14:30:39Z ERROR pieces failed to lazywalk space used by satellite {"process": "storagenode", "error": "lazyfilewalker: context canceled", "errorVerbose": "lazyfilewalker: context canceled\n\tstorj.io/storj/storagenode/pieces/larun:71\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:105\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:717\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:5ate/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE"}

2024-03-28T14:30:39Z INFO lazyfilewalker.used-space-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:30:39Z ERROR lazyfilewalker.used-space-filewalker failed to start subprocess {"process": "storagenode", "satelliteID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo", "error": "context canceled"}

2024-03-28T14:30:39Z ERROR pieces failed to lazywalk space used by satellite {"process": "storagenode", "error": "lazyfilewalker: context canceled", "errorVerbose": "lazyfilewalker: context canceled\n\tstorj.io/storj/storagenode/pieces/larun:71\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:105\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:717\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:5ate/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75", "Satellite ID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2024-03-28T14:30:39Z ERROR pieces:trash emptying trash failed {"process": "storagenode", "error": "pieces error: filestore error: context canceled", "errorVerbose": "pieces error: filestore error: context canceled\n\tstorj.io/storj/storage.(*blobStore).EmptyTrash:176\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).EmptyTrash:316\n\tstorj.io/storj/storagenode/pieces.(*Store).EmptyTrash:416\n\tstorj.io/storj/storagenode/pieces.(*TrashChore).Run.func1.1:83\n\tstorj.io/common/synnc1:89"}

2024-03-28T14:30:39Z INFO lazyfilewalker.used-space-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-03-28T14:30:39Z ERROR lazyfilewalker.used-space-filewalker failed to start subprocess {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "error": "context canceled"}

2024-03-28T14:30:39Z ERROR pieces failed to lazywalk space used by satellite {"process": "storagenode", "error": "lazyfilewalker: context canceled", "errorVerbose": "lazyfilewalker: context canceled\n\tstorj.io/storj/storagenode/pieces/larun:71\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:105\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:717\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:5ate/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-03-28T14:30:39Z ERROR piecestore:cache error getting current used space: {"process": "storagenode", "error": "filewalker: readdirent config/storage/blobs/6r2fgwqz3manwt4aogq343bfkh2n5vvg4ohqqgggrrunaaaaaaaa/hr: no such file orcontext canceled; filewalker: context canceled; filewalker: context canceled; filewalker: v0pieceinfodb: context canceled; filewalker: context canceled", "errorVerbose": "group:\n--- filewalker: readdirent config/storage/blobs/6r2fgwqz3manwt4aogq343aaaaaa/hr: no such file or directory\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).Spae:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySastorj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- file\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstopieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: context ca/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagice).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: v0pieceinfodb: context canceoragenode/storagenodedb.(*v0PieceInfoDB).getAllPiecesOwnedBy:68\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).WalkSatelliteV0Pieces:97\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:66\n\tstorj.io/storj/storage).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "contact.external-address"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "storage.allocated-disk-space"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "console.address"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "operator.wallet"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "operator.email"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "healthcheck.enabled"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "operator.wallet-features"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "server.private-address"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "server.address"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "storage.allocated-bandwidth"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "healthcheck.details"}

2024-03-28T14:30:50Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "storage2.trust.exclusions"}

2024-03-28T14:30:50Z INFO Invalid configuration file value for key {"Process": "storagenode-updater", "Key": "log.development"}

2024-03-28T14:30:53Z INFO lazyfilewalker.used-space-filewalker starting subprocess {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-03-28T14:30:53Z INFO lazyfilewalker.used-space-filewalker subprocess started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-03-28T14:30:53Z INFO lazyfilewalker.used-space-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode"}

2024-03-28T14:30:53Z INFO lazyfilewalker.used-space-filewalker.subprocess used-space-filewalker started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode"}

u must be joking?!?!

I did not say its the only problem.

Just saying it is the same for me now for more than a month.

I will let it run for one more week and then all my nodes will go offline. It is just a joke.

All comments about it is your config or you have errors with the filewalkers.

No i do not have any filewalker errors and the config is as shipped…

Tried V1.100 now for over a week and no change.

Trash folders are empty, no additional blod folders for old satellites.

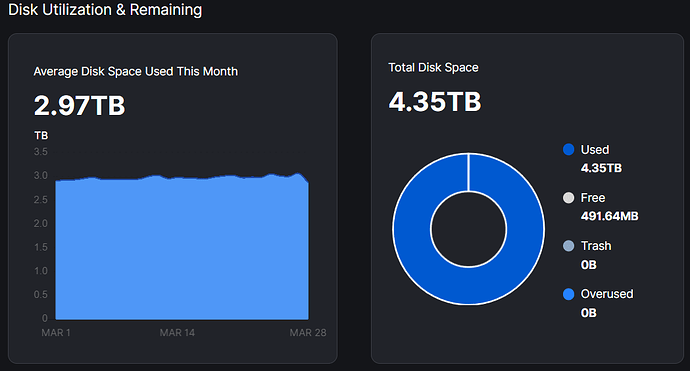

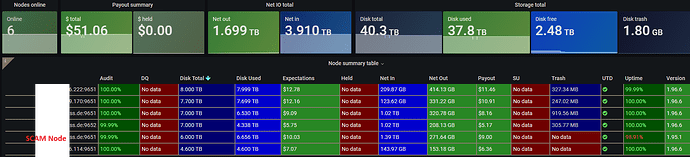

Like i said in a earlier post. It is a business and one side is not caring about their business partner. If you are using 4.35 TB of my space you will have to pay for it and not for an imaginary value the satellite thinks it is using.

There is definitely in the software that is just not getting fixed as fixing it would cost money and on top they will even have to pay me more for the correct space allocation.

I am missing more than 30% of my payouts now!

i feel everything u say and agree 100%

will wait about 1-2 weeks for a fix and if not fixed this scam node gets deleted with all data and i start a new node

all other nodes work nice but this scam node is really annoying

Has anyone resolved this yet? Read most the comments and still doesn’t seem to be resolved.

I’ve had:

No filewalker issues in a week.

Only a couple database issues on restart but none after 1-2 minutes.

Disabled lazy filewalker.

Removing the satellites only removed ~200GB.

Storj is still taking up around 4TB more than it should be.

and not for an imaginary value the satellite thinks it is using.

Have you verified that that values on the left correspond with the satellite data:

As far as I see, they are both based on file walker. (Storagenode section of this wiki: Debugging space usage discrepancies). Also see this comment: storj/storagenode/pieces/store.go at 52881d2db3732c07e3975237ff987e9f7c01bdf2 · storj/storj · GitHub (this is an estimation!). If you are interested about the space usage, calculated by the satellite. I would recommend to query the sqlite database (see the mentioned wiki page).

Because it seems that node and satellite are calculating their things on their own differently.

I don’t know why it cannot be done that the satellite values are shown in the dashboard as well. Then it would be much easier to compare.

What’s the error after crash?

I never checked the error log but it’d be whatever error is associated with the file system being 100% full but storjnode being told more storage is still available because of config file.

I would suggest to check your log to see why is it crashed, it could be a readable check timeout and/or a writable check timeout (the node will crash to protect itself from disqualification in case of inability to read/write data from/to the disk within configured timeout).

context canceled

This is a timeout error, your disk is unable to keep up. I would suggest to disable a lazy mode for filewalkers

you may disable a lazy filewalker to increase a priority to normal and speedup a process:

pieces.enable-lazy-filewalker: false

save the config and restart the node to see did it help to avoid timeout errors. You may also pass it as a command line option after the image name in your docker run command as an alternative:

docker run -it -d ...\

...

storjlabs/storagenode:latest \

--pieces.enable-lazy-filewalker=false

Do you use exFAT? Or what’s filesystem there?

Is it a VM?

How the disk is connected?

Is it a network connected disk?

18 posts were merged into an existing topic: Average Disk Space Used This Month went to Zero

Thanks I tried but about instantly after restarting node I got this

2024-03-29T05:24:01Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "pieces.enable-lazy-filewalker"}

2024-03-29T05:25:23Z ERROR piecestore:cache error getting current used space: {"process": "storagenode", "error": "filewalker: context canceled; filewalker: context canceled; filewalker: context canceled; filewalker: context canceled", "errorVerbose": "group:\n--- filewalker: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75\n--- filewalker: context canceled\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkSatellitePieces:69\n\tstorj.io/storj/storagenode/pieces.(*FileWalker).WalkAndComputeSpaceUsedBySatellite:74\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:726\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:57\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-03-29T06:08:53Z INFO Invalid configuration file key {"Process": "storagenode-updater", "Key": "pieces.enable-lazy-filewalker"}

edit:

I changed the filewalker config back to default because i was missing the filewalker process in iotop.

the filewalker should check for used space and fix the error or what is his goal?

i just saw two filewalkers at the same time in iotop for the first time:

damn slow, about 7MB/s both together, will wait some days/weeks now if they can fix itself

the filewalker should check for used space and fix the error or what is his goal?

it should calculate the used space and update databases. But it will do so only if it will not fail.

You need to check, that all filewalkers are finished successfully for all trusted satellites:

Logs should be at least info level Used space filewalker docker logs storagenode -f 2>&1 | grep "used-space-filewalker" | grep -E "started|finished" GC filewalker docker logs storagenode -f 2>&1 | grep "gc-filewalker" | grep -E "started|finished" retain docker logs storagenode -f 2>&1 | grep "retain" collector docker logs storagenode -f 2>&1 | grep "collector" trash docker logs storagenode -f 2>&1 | grep "piece:trash"

i can see the filewalker running:

with this speed of about 7MB/s it will take about 14 days to complete the 9TB… i will just wait 2 weeks from now and if not fixed i will delete the whole node and start a new one

I believe the filewalker only iterates over file metadata, not the entire file content, so it should finish a lot sooner than 14 days. For about 10TB one of my nodes takes about a 2 days with lazy on.