I know what are you saying. The network trying to avoiding the copies of the data storage in a same physical location, if 80 copies are stored in one physical location, It’s nonsense.

This is a wrong policy, the network hard to detect where the real location of the node is. this policy actually not working because it’s easy to avoid.

The data won’t be secured under this policy, because it’s actually not working, as i know many operators are running multi nodes.

Much better to let operators report there real location, and update the uploading rules, make another one physical location one copy policy instead of /24 policy.

I think it’s way easier to report a wrong location than getting hand on many different IP subnets, where you are alone as node operator.

And if you try hard enough you can circumvent any rule. But the mass secures the network. So if anyone would just break the rules (and the ToS they agreed to) it would be a bigger problem.

And if the StorJ team really wants to, they can implement other methods to make sure you are playing by the rules

there’s no data to “farm”, please look at the actual data usage of the whole network before starting to invest.

yes i noticed that, it’s a long-term investment. Since storj doesn’t into the mainstream yet, there is a lot of space to glow.

I’m not hopeful, I’m more interested if StorJ able to get SOC2, cc @Alexey

I don’t think SOC 2 is so hard to get, just find a related consultant company and pay

SOC 2 is not that important, only big company care about that, small companies and personal users have a lot of data too. They care about price rather than SOC 2, the problem is, most of them doesn’t know storj yet

storj comunity network lost 10PB client because it dosnt has SOC2, so it is important. If it would has it, then I think your 1PB today wouldn’t have problem with fill-up.

I wouldn’t say it lost the client. I would rather say it went to stoj select network.

But yes, SoC2 is very important. Even small companies often have Soc2 requirements.

I writen Comunity network lost. we are not participating in select, so not really care about it.

There are no copies.

Any policy and any restriction can be circumvented. The /24 is just a convenience shortcut. The rule is set by policy.

The same way you don’t go stealing from the store just because the lock was easy to pick. “If they wanted me to not to steal — they should have used better lock and put products behind carbon steel cages.

Yes. Many operator are running multiple nodes. I do too. But I do so while abiding to the policy. All

My nodes are physically in different locations, connected via different ISPs, powered by different grid segments. The only correlation between them is my email address, wallet, and me being the only support. Running multiple nodes is well within ToS.

Running multi nodes in one locations and pretend they are not — is not.

Precisely. Everyone shall play by the rules. If everyone breaks them network collapses, everyone loses. If someone thinks they are special and can break the rules because they are in minority and won’t have significant impact — they are right, and it’s also a shitty thing to do. There will be always small number of cheaters in any system.

Why? Why waste time on this? Why reading and abiding by policy is so foreign a concept to some? Continuing analogy — don’t steal from a supermarket just because it’s easy to do and supermarket should have enforced better security. Same exact reasoning — it’s not worth the expense if only small number of people steal. Does not mean it’s free for all now. And definitely don’t build business strategy rooted in abuse of the existing system.

Wrong again. This is not an investment. This is small amount of income to offset operating costs of already online but underutilized capacity.

If it was profitable to build new servers storj would not need you. If you plan to spin more servers - it’s the opposite of what this projects aims at. And if your goals are not aligned with the project goals — you will drop out sooner or later, when it stops being profitable by crossing some arbitrary threshold for the reasons beyond your control, or when your become large enough for storj to care to stop your shenanigans. This is bad for the project and waste of time for you.

Hence, I feel that should not need to, but I’ll repeat again:

You are such a good guy that you came here to ask and at the same time you don’t listen to anyone, be sure to launch at least on a petabyte. ![]()

I am sure that the whole community is very happy to have such participants in its ranks.

Also make everything beautiful and move on to the topic with photos, everyone will be very interested to see a high-quality assembled unit.

With the rollercoaster we’ve been on with used-space: I’d be surprised if any setup could gain more than about 400TB/year. Even our mascot has gone backwards several hundred TB lately.

Greetings former chia farmer. I assume chia because you use the word “farming”.

Storj data takes forever to fill. It’s like the opposite of chia.

Even if you circumvent the terms of service to spoof multiple IPs, each node (aka each disk) is still going to take years to fill up, plus the payouts are held back significantly for 18 months.

There is just no reason to dedicate a lot of capacity to it because that capacity can’t be utilized effectively.

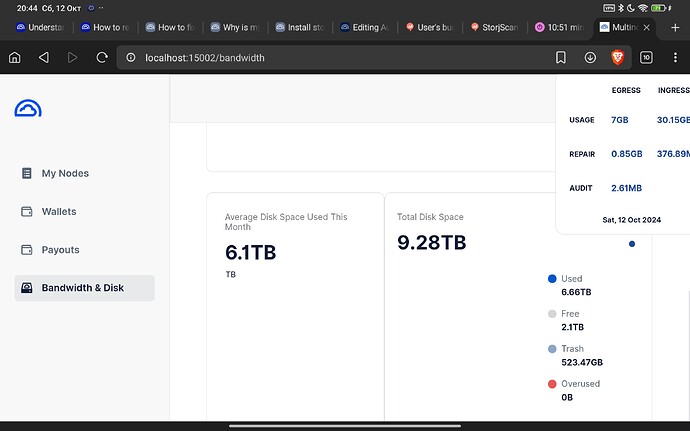

Interesting report. That guy running multi nodes with 2.4Pb of capacity.

A cautionary tale for sure. He has been running almost 5 years now… and today sits just under 600TB filled. Keep him in mind before you throw 1PB at the project - expand s l o w l y as you actually fill drives. ![]()

We do not use replication, we use a Reed-Solomon erasure codes, so no copies, each piece is unique. You do not need to keep 80 pieces of the segment to make it unavailable. Holding 52 pieces would be enough to make the segment unavailable. Sometimes even less, because the repair will start when the minimum threshold is passed, so 65-28=37 pieces could be enough.

Numbers are various, depending on used R-S settings, they now can be dynamic. So, numbers above are valid for the default schema:

Thank you for your explanation

This thread said running multiple nodes in one network is allowed

I have multiple power supply, I have multiple network connection, i have 10G internal connections between all the localhost, also i maintain zfs pools with backup harddisks, once there is a harddrive failure, it can be online replaced within 24 hrs.

Actually this looks like my previous job, all about high availability.

My plan is maintain 200-300 nodes, each node size about 2-4Tb, it means all pieces of one file possibly stored in my location, in all the normal situation i have confident to maintain high availability and no data lose, but what if there is earthquake, flooding or any other critical things happen, the service still will fail.

That’s why I’m suggesting the platform to update the strategy, allow more nodes to join but simply don’t store all the pieces at one location in the system level.

Yes, it’s. Just not allowed to mimic nodes from the same physical location like they operated in another (circumventing the /24 filter, which is not allowed).

Exactly. This is the main reason for this filter in place. I can believe, that you have a redundancy for everything, but any local disaster still could shutdown your server(s).

Yes. And we are moving to there. We implemented recently the choice of n in the node selection

It would prefer the nodes with a better success rate from otherwise equal nodes. So, basically it would select nodes with a greater latency less often, reducing the probability to select nodes to store the piece of the same segment in the same location, when one or all of them circumventing the /24 filter but increasing a latency doing so.

It’s not 100% solution, but better than only /24 filter.

There is a discussion, how we may improve things even better:

My plan is maintain 200-300 nodes, each node size about 2-4Tb,

In the last 30 days my best node made 1.5 TB in used space.

Here is the math:

1,5 TB / 300 = 5 GB per node per month

4 TB / 5 GB = 800 Month = 66,66 years until node are full.

Have fun!