Hey everyone!

We are about to embark on perhaps the single biggest change for storage nodes in years. We are going to be rolling out the “hashstore” backend to the public network!

TL;DR: In the coming weeks, we’re going to start enabling a new storage backend for new uploads on all nodes. Following that rollout, we will then start migrating old data to the new backend as well. We think that, for the vast majority of cases, this is the right decision and will dramatically improve the overall performance of the network and your node. This is exciting and we’re excited!

This won’t be a surprise to all of you, some of you have been following along with the 8-month-long thread ([Tech Preview] Hashstore backend for storage nodes), where many aspects of both the old “piecestore” backend and the new “hashstore” backend have been discussed. We’ve been reading that, too, and our rollout strategy has been informed by that thread. But I digress, let’s start from the top.

What is the problem we’re trying to even solve?

When Storj stores an object, it first encrypts the object, breaks it up into many little pieces using Reed Solomon encoding, then stores all of those little pieces on storage nodes. For the last 6 years, the way we have stored this data is… each piece is its own individual file! In our network, there are billions and billions of these pieces (these pieces are often smaller than a hard drive sector or block size).

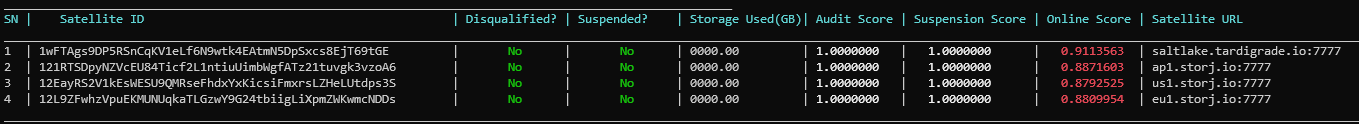

The storage node is responsible for doing a number of things - in addition to responding to GET and PUT requests, it has to keep track of how many bytes it is storing, how many pieces it is storing, and occasionally compare notes with the Satellite metadata service about which pieces it should even have and which pieces are garbage.

Storj now has a couple of different product offerings. One of our offerings, Storj Select, allows for datacenter operators with certain certifications to reach out to us and provide certified disk space of certain kinds, for customers who have regulatory requirements that the default global network doesn’t satisfy. In the case of Storj Select, Storj engineers currently directly help manage many of these nodes. We often run these configurations much closer to full capacity than the global network seems to get, and so resource use optimization is especially valuable.

When we looked closely at these storage nodes, we discovered that: (a) they were using 100% of their CPU and not 100% of their disk IOPS, and (b) the CPU use was operating system inode management. We were not getting the most throughput possible out of our disks! Bummer!

Truthfully, I’m shocked that the file-per-piece strategy even lasted 6 years. The fact that it has is a huge testament to how well the storage node community has found tricks and solutions to make it last (ZFS pools, etc). Good work everyone!

How do other providers do it?

As you know, Storj is attempting to dethrone Amazon S3 as the best object storage platform. But, S3 has a really big head start, so it’s worth at least reviewing what their current approach looks like.

That approach looks like ShardStore. ShardStore keeps object “shard” data concatenated directly in large contiguous ranges called “extents”, and then keeps track of the metadata (e.g. which “extent”, which offset a “shard” has) in a log-structured merge tree.

The benefit of this approach is that alignment overhead is kept to a minimum (shards don’t have to be padded to a multiple of a disk sector), and there no longer needs to be any random writes - all writes are sequential. Every update in this model is appending to an existing file and rarely having to seek around and update something elsewhere.

Even though SSDs and NVMe reduce the performance penalty of random writes, there is still a penalty relative to sequential writes, even with SSD and NVMe. But perhaps more importantly, there are still a lot of spinning metal HDDs in S3 (and in Storj!), where random writes have a huge penalty relative to sequential writes. Reducing or eliminating random writes in favor of sequential writes is an extremely consequential design goal.

What is the hashstore?

Hashstore also has the goal of improving alignment overhead and minimizing random writes. We noticed after designing hashstore that, I suppose due to convergent evolution given the same set of real-world constraints, hashstore and ShardStore have a lot in common.

Hashstore stores all of its pieces concatenated in contiguous large files called “logs”. For a write heavy workload, this all but eliminates random writes and operating system filesystem book keeping. This change alone changed our workload from CPU-limited to disk IOPS-limited.

But once you do this, you do need to keep track of where the files are, and thus you need to store some metadata. We observed that Storj pieces have cryptographically random IDs, and so therefore, keys are evenly distributed in the keyspace and do not collide. We decided to use a hash table to store the metadata indicating which log each piece belongs to and its offset within that log.

Hashstore actually supports two forms of hash table data structures - the “fast one”, called “memtbl”, where the hash table is stored in memory and changes to it are written to another log file, or the “still-fast-but-uses-much-less-RAM” one, called “hashtbl”, where the hashtable itself is mapped to disk via mmap.

The “hashtbl” mmap approach is straightforward, but does result in a small amount of random writes during updates. The hash table itself is extremely small and compact, storing only 64 bytes per entry. Reads use the hash table data structure on disk. This system is fast and efficient, and the hashtable usually resides entirely in the operating system’s disk cache. The “hashtbl” approach is the default approach as it doesn’t use much RAM. It is ideal when you can place the hash table on an SSD, even if all of the logs are on HDD. It’s still quite good on just HDD, certainly better in all of our testing than the old piecestore on Ext4 filesystems.

The “memtbl” approach takes an additional step of eliminating this last random write, at the cost of explicitly storing an even smaller amount of metadata entirely in RAM. The “memtbl” doesn’t even store the full piece id to reduce RAM-based hash table entry size further. This approach requires an additional on-disk data structure that is an append-only event log, but all writes to it are sequential. This approach is better if you have more RAM, and does not need an SSD for the best performance.

The practical upshot of all of this is that our Storj Select storage nodes, which have all been on one or the other form of hashstore for the better part of a year now, practically absorb all load thrown at them.

Wait, really? What about deleted files?

There are two primary data-removal mechanisms in our system, TTL-based storage (Time-To-Live, storage with an expiration date), or garbage collection, where data that is no longer needed gets cleaned up.

TTL-based storage is actually surprisingly straightforward on hashstore. When a piece comes in with an expiration date, hashstore chooses a log file where all data in that log file expires on the same day. This means that cleanup of TTL-based data is fast and efficient; only these few log files need to be removed once the time elapses!

Garbage collection is a separate process, where the Satellite metadata service sends out what’s called a “bloom filter”, which tells the storage node what it absolutely must keep. The storage node is then responsible for looping through all of the pieces it has and comparing them against the bloom filter, removing anything it doesn’t need to keep. This is an extremely time consuming process on the old piecestore backend, as it has to walk the filesystem.

On hashstore, this step is extremely fast! All that needs to happen is the hash table data structure is flipped through once (no trawling through inodes in the filesystem, all of the metadata fits in a couple of pages of RAM) and a new hash table is generated with the unneeded data removed.

Because the hash table points to log files and knows what size the log files are, the hashstore can very quickly estimate what percentage of data is “dead” in each remaining log file. Dead data happens when a piece is deleted from the hash table; it is not immediately deleted from the log file that stores it. If a log file has below a configurable percentage of data that is still alive, it rewrites the log file without that data with a configurable probability. Note that these values are tunable so you can choose the right tradeoffs for your system. This operation also happens to be entirely sequential, which means the throughput of the disk can be orders of magnitude higher.

All of these steps together are called “compaction”, and compaction is a process that typically takes less than a few minutes total, even on extremely full and extremely active nodes. Believe us, we are running this on fleets of nodes ingesting 125k pieces/second!

How stable is this?

Very stable. Hashstore has been running in production for the better part of a year, and already handles more traffic than piecestore, across all product lines.

But more importantly, hashstore has been designed with stability and durability in mind. A problem with Amazon’s ShardStore, or really any log-structured merge-tree based design, is that bit flip or small corruption in just the right place could lead to cascading effects, where whole key ranges are lost. One of the reasons we used a hash table design for metadata instead is because even if an entry is corrupted, the rest of the entries will continue to work just fine, both before and after the corrupted entry. Hashstore is aggressively checksummed and is designed from the beginning to fail gracefully and keep everything else working even if corruption is detected.

Perhaps you’re worried about the loss of the hashtable itself. The log files contain enough information to entirely rebuild the hashtable. We’ve never had to use it, but we do have a tool for you that will rebuild the hashtable from log files.

But my current node setup is already optimal!

It is truly impressive the work some of our storage node operators have gone through to make our one-file-per-piece system last as long as it has. Some node operators have switched everything to ZFS pools, so ZFS can essentially make the same or similar optimizations we did (focusing on sequential writes, keeping metadata together in RAM, and so forth). This is impressive!

Over 6 years, a smart and savvy userbase has definitely learned the ropes on our old storage format, and some of you have gotten quite good at operating it. No question! But not all storage nodes benefit from these optimizations, and we’re excited to bring them to everyone. We’re excited to see you all learn the ropes on this new system, and help us improve and optimize it!

Hashstore is already our only storage backend for thousands of nodes we operate, and over time we intend to migrate all nodes to hashstore and eventually delete the piecestore code. However, you can buy yourself some time with the --storage2migration.suppress-central-migration=true flag, or STORJ_STORAGE2MIGRATION_SUPPRESS_CENTRAL_MIGRATION=true environment variable. These variables will tell your node to ignore central migration rollout commands, though these variables will not be respected indefinitely.

Note that in our experience, in the vast majority of cases, piecestore is much, much slower than either hashstore configuration. A risk to a node that remains on piecestore is that it will start to lose races relative to similar nodes on hashstore.

So what’s next?

As of the v1.135.x storage node branch, the storage node knows how to concurrently run both piecestore and hashstore. It can be toggled to start using hashstore by individual Satellite metadata servers for certain circumstances.

We will be starting by flipping the switch to hashstore for new uploads only on growing numbers of nodes in the network, and will be monitoring performance metrics and your feedback here on the forum. We will be doing this by Satellite, too, so we can watch the effects with different use patterns and limit the impact. Then after that, we will start another similar process to begin migrating already uploaded data.

We’re excited about this new, much more performant future, and are excited to see the aggregate benefits on the network. We’re especially excited to see the experience and wisdom of our SNO community lend their hand to optimizing this new system! Let us know what you find!