You and I definitely love our long posts. But it’s good to share a lot of information. So, here’s another long post.

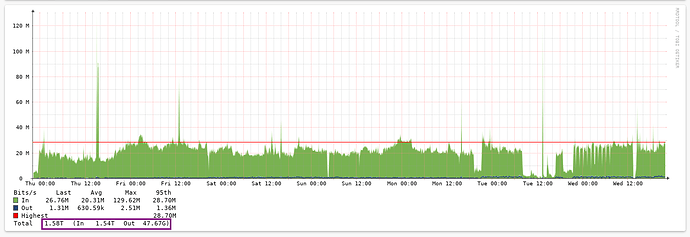

In terms of Node profitability, you are right that the actual amount of customer data on the network and the type of use case (low vs. high bandwidth, long-term vs. short-term storage) have a huge amount of impact on what Storage Nodes earn.

In terms of expectation management, the estimator assumes a mature level of network utilization. Any calculator we publish is going to naturally have a point of reference to the amount of usage on the network. The assumptions baked into the current estimator are based on a future state where most Storage Nodes are getting about 50% utilization across the whole network. We chose that as the baseline set of assumptions (as opposed to early network state) because we are looking for Nodes that will join and help grow the network for the long term. That also aligns the interests for Storj, SNOs and customers.

We just launched the production service on March 19. It will take time to acquire new customers, to onboard developers, build out the partner ecosystem, and onboard large amounts of data. Any new storage platform has the same set of challenges, but we have the added challenge that the majority of our infrastructure is operated by people outside the company.

We use tactics like surge pricing and test data to bridge the gap between when we build and launch the service and when the amount of customer data stored and bandwidth utilized creates a balanced network where it’s profitable to be a Storage Node without any subsidy from Storj.

We’re not there yet, but we also don’t expect to be there for some time. The amount of STORJ a SNO can reasonably expect to earn on the network a month into general availability is very different from a point in time where there are thousands of people storing PB or even EB of data, and especially where the platform supports a wider range of use cases with higher bandwidth utilization.

What are we doing to get there? Our strategy is fairly straightforward.

- We launched the service with a specific set of launch qualification gates, and based on our performance, durability, availability and other metrics, the current network supports a range of initial use cases.

- The use cases we’re pursuing with developers, partners and customers are based on what the network can successfully handle and the types of use cases those early adopters are willing to try on the network.

- Our roadmap is geared toward differentiating our service within existing use cases and expanding into adjacent use cases where there is customer demand.

- In addition to product work, we are actively building our partner network and continuing our marketing and PR efforts to grow our customer base.

What can you do to help? Obviously, by providing reliable storage and bandwidth to the network you make the network possible. We’re in this for the long haul and we need you with us. If you have the opportunity to help drive usage and adoption of the platform - even better. Whether you recommend us to a partner or for a project, blog, tweet or post about the platform, everything helps to grow the network.