Alright. Can you help me out with the ther issue, showing the wrong “total disk space” in the MND? I removed the Storj-Node from the MND and added it again with a new generated API-Key, but the issue stays the same. I don’t know where this issue is coming from, since I have this problem on 2 nodes, showing a smaller “total disk space” in the MND than in the “original” dashboard.

Please show the example

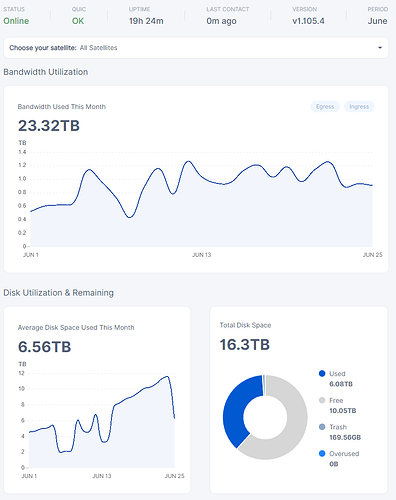

This for example. It’s a 16.3TB drive shown right in the “original” Dashboard, but shown as 11.54TB Drive in the MND. Same with a 10.8TB 12TB) Drive, beeing shown as 5.2TB in MND.

Ah, I see. That’s the difference provided via API. The MND uses API.

That’s the amount which node is detected as available accordingly data in the database and the physically available space on the disk, so it’s adjusted to do not to try come out of boundaries.

You may search your logs for the message “less than requested”, unfortunately it seems checked only on restart.

Help me, my node is shrinking again lol. How I can fix this issue? I restarted the MND, I restarted the Node itself, the drive is really 18TB (16.3TB), the partitition fills the whole drive, I created a ne api key and re-added the node to the MND. I upgraded one of my 6TB nodes to 16TB and wondered why my MND shows almost the same overall size, even though it around 10TB more than before, seems like my 18TB node shrunk again regarding the MND.

There is nothing to help with. The node detects the available space automatically and select a minimum from the allocated and what’s it’s thinking as an available (used + free).

So, as soon as you fix an issue with the used space discrepancy on your nodes, it will be resolved itself.

Today I upgraded my 12TB drive to 16TB. As the system showed, the drive was filled to the brim (100%), but when I cloned the drive with gparted (I know it is not recommended due to downtime), it showed only around 30% full. The SND showed ca 10TB (reported from the satellite). Why is there such a discrepancy between my debain system and gparted? Due to small filesizes on storj, how many inodes are recommended? Did I had not enough of them?

I do not know about GParted and OS discrepancies.

I only know, why there could be a discrepancy between the OS and the dashboard - because of not updated databases. So you need to fix issues, which could prevent to update databases, like filed filewalkers or locked/corrupted databases.

Please do not modify the default settings for ext4 if you are not familiar how it would affect your setup. The default is working pretty fine.

This weekend I upgraded my 6TB to 16TB Nodes. I’m using docker compose, and entered 16.1TB by mistake because I had 18TB drives in my mind. When checking the SND I saw, that it reported a disk Size of about 14.95TB so I know what you mean, it reports what the drive can handle at maximum when entering a too big number, so I adjusted it to around 14.4TB. With this in my mind, I cannot explain, why the 18TB gets reportet smaller and smaller, since this is an Toshiba enterprise CMR drive. I don’t know how “high end” the drives need to be. It is now around 6TB big instead of the original 16.3TB. Satellites reporting around 13.33TB though (db seem to stuck at around 6.01TB, right side chart). Maybe it gets smaller and smaller, because DB say “6TB” while filling the drive, the free space shrinks, so it’s getting “smaller” in the MND? When cloning with gparted, the programm is doing an optimization process regarding tree size etc. This time it also corrected wrongly counted inodes, so “real used inodes” and “recorded inodes” were off a bit. I don’t know why.

The API is reporting the minimum between the allocated, (used+free in the allocation) and (used+free on the disk).

So, if the “used” is incorrect, the resulting allocated will be incorrect too.

The SND still reports what you have specified as allocated on the piechart, but MND uses the allocated, provided by the node’s API. You also should have a line “less than requested” in the logs with the safe amount to be allocated.

So, if you would fix the problem with not updated databases, both dashboards should show the same on the piechart.

so with reference to my post in the other thread

I do have the same error

piecestore:monitor Disk space is less than requested. Allocated space is {“bytes”: 8189413012499}

and a bunch of others

2024-07-03T12:55:16+12:00 ERROR pieces failed to lazywalk space used by satellite {"error": "lazyfilewalker: context canceled", "errorVerbose": "lazyfilewalker: context canceled\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*process).run:73\n\tstorj.io/storj/storagenode/pieces/lazyfilewalker.(*Supervisor).WalkAndComputeSpaceUsedBySatellite:130\n\tstorj.io/storj/storagenode/pieces.(*Store).SpaceUsedTotalAndBySatellite:707\n\tstorj.io/storj/storagenode/pieces.(*CacheService).Run:58\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:51\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:78", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-07-03T12:55:16+12:00 ERROR lazyfilewalker.used-space-filewalker failed to start subprocess {"satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "error": "context canceled"}

and also have a bunch of Database locked errors

2024-07-03T15:56:33+12:00 ERROR orders failed to add bandwidth usage {"satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "action": "GET_AUDIT", "amount": 10240, "error": "bandwidthdb: database is locked", "errorVerbose": "bandwidthdb: database is locked\n\tstorj.io/storj/storagenode/storagenodedb.(*bandwidthDB).Add:76\n\tstorj.io/storj/storagenode/orders.(*Service).SendOrders.func2:254\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:78"}

How do I proceed to “fix the problem with not updated databases” to rectify this issue? Is this a move the DB to a SSD kind of issue?

.

.

.

adding to this, the node in question does seem to have an old version of the config.yaml

missing items I can see from my other nodes are

# directory to store databases. if empty, uses data path

storage2.database-dir: C:\STORJ_DB_CACHE\

# size of the piece delete queue

# storage2.delete-queue-size: 10000

# how many piece delete workers

# storage2.delete-workers: 1

# how many workers to use to check if satellite pieces exists

# storage2.exists-check-workers: 5

as well as total allocated bandwidth in bytes still having a value and not showing as (deprecated)

Is it Ok just to copy one from the other node and update the values, seems odd that it hasn’t updated

Unfortunately that’s mean that used-space-filewalker is failed and will not be restarted.

That’s can be solved either by optimizing a filesystem or by disabling a lazy mode and restart.

This can be solved either by optimizing a filesystem and enabling a write cache on the disk (warning - you need to have a backup power for your setup to be able to gracefully shutdown it in the case of the power cut) or by moving databases to a less loaded disk/SSD.

this is ok. You can see all available settings and their default values by executing this command (PowerShell):

& "$env:ProgramFiles\Storj\Storage Node\storagenode.exe" setup --help

this one in particular is showed that you moved databases of this node to your system disk (I guess - SSD).

No, you need to change only those options which are needed for your setup. If you would copy-paste values from the other node it could result to crash of the current one because of duplicated keys or wrong paths, etc. I wouldn’t recommend to do so.