yes i have logs at least previous and current.

it looks like I jumped to conclusions. Pieces really was uploaded to my node

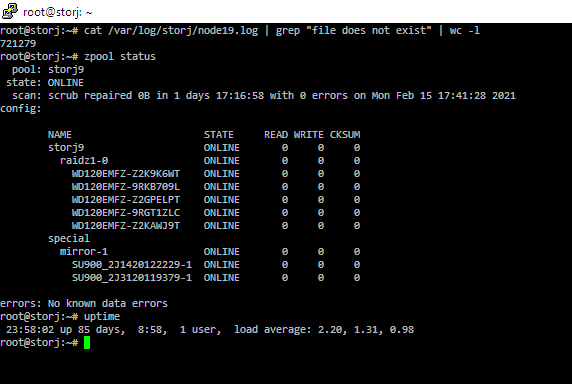

greps

root@nas:/mnt/log/storj/v3/20210903/storj# tail node19.log

2021-09-03T12:56:58.587+0300 INFO piecedeleter delete piece sent to trash {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "2VWSZNCZHLRSBY3VVDVVPU6XG2A7RTESJNAQJQ3Z26Q3BJBCRQNA"}

2021-09-03T12:56:58.736+0300 INFO piecestore upload started {"Piece ID": "QMZBADDCZRI5XKCAALBYDLOEUCHH6OBCIZ4LJMIIUVWCBUNTCBGA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "PUT", "Available Space": 1250275885056}

2021-09-03T12:56:58.836+0300 INFO piecestore uploaded {"Piece ID": "QMZBADDCZRI5XKCAALBYDLOEUCHH6OBCIZ4LJMIIUVWCBUNTCBGA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "PUT", "Size": 1280}

2021-09-03T12:56:58.934+0300 INFO piecestore uploaded {"Piece ID": "IJGA2JI55HTABZL3YDJILJAZVK4M6QYCQFHRL6PNJHIEPKQSKETA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "Size": 181504}

2021-09-03T12:56:59.027+0300 INFO Got a signal from the OS: "terminated"

2021-09-03T12:56:59.028+0300 INFO piecestore downloaded {"Piece ID": "CP7N6W262AHPVOJ6JOAWBS2ADOTJS4LX2LO4W7TGV626EWIK7Q6Q", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET"}

2021-09-03T12:56:59.028+0300 INFO piecestore upload canceled {"Piece ID": "H5LRE6JXPKTGCQFWPRCAX7AM4HZ5QKHUDA4J43HH4EA57HJ5GBQA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "PUT", "Size": 0}

2021-09-03T12:56:59.028+0300 INFO piecestore upload canceled {"Piece ID": "LRRS7QVE2W4H5TXAQDEH4NCQRTONWDV5KEDXDBVFPPST33OAJJDA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "PUT", "Size": 0}

2021-09-03T12:56:59.028+0300 INFO piecestore downloaded {"Piece ID": "FATFDRA67H2PAKW5UQ5FAGHH5EQ6I24OFUL7ZVNVXXDVVQOZFXAA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET"}

2021-09-03T12:56:59.028+0300 INFO piecestore downloaded {"Piece ID": "GO3VINNXCWBMAFCNRZG6ESZRE4ULUUNMJPF5576NHURHNKVJRKHA", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "Action": "GET"}

START OF CURRENT LOG

root@storj:~# head -n 40 /var/log/storj/node19.log

2021-09-03T14:02:32.845+0300 INFO Configuration loaded {"Location": "/mnt/storj/node19/config.yaml"}

2021-09-03T14:02:32.859+0300 DEBUG Anonymized tracing disabled

2021-09-03T14:02:32.872+0300 INFO Operator email {"Address": "krey@irinium.ru"}

2021-09-03T14:02:32.872+0300 INFO Operator wallet {"Address": "0x7b9c2d38dfc07692e955476f907541a7ca186573"}

2021-09-03T14:02:33.118+0300 DEBUG Version info {"Version": "1.36.1", "Commit Hash": "e1410f6a55ee452e7b57f37744ea185d1643f4d9", "Build Timestamp": "2021-08-09 16:56:43 +0300 MSK", "Release Build": true}

2021-09-03T14:02:33.739+0300 DEBUG version Allowed minimum version from control server. {"Minimum Version": "1.24.0"}

2021-09-03T14:02:33.739+0300 DEBUG version Running on allowed version. {"Version": "1.36.1"}

2021-09-03T14:02:33.740+0300 INFO Telemetry enabled {"instance ID": "1aGsGmNPwGzwQvr2RMGDoeedsdGrC9wKnY1ZqEvxo1s36Lxbd4"}

2021-09-03T14:02:33.761+0300 INFO db.migration Database Version {"version": 53}

2021-09-03T14:02:33.763+0300 DEBUG db Database version is up to date {"version": 53}

2021-09-03T14:02:33.936+0300 DEBUG trust Fetched URLs from source; updating cache {"source": "https://www.storj.io/dcs-satellites", "count": 6}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE"}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2021-09-03T14:02:33.937+0300 DEBUG trust Satellite is trusted {"id": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2021-09-03T14:02:33.937+0300 INFO preflight:localtime start checking local system clock with trusted satellites' system clock.

2021-09-03T14:02:34.824+0300 INFO preflight:localtime local system clock is in sync with trusted satellites' system clock.

2021-09-03T14:02:34.824+0300 DEBUG servers started {"items": ["debug", "server"]}

2021-09-03T14:02:34.824+0300 DEBUG services started {"items": ["version", "trust", "contact:chore", "PieceDeleter", "pieces:trash", "piecestore:cache", "piecestore:monitor", "retain", "orders", "nodestats:cache", "console:endpoint", "gracefulexit:blobscleaner", "gracefulexit:chore", "collector", "bandwidth"]}

2021-09-03T14:02:34.824+0300 INFO Node 1aGsGmNPwGzwQvr2RMGDoeedsdGrC9wKnY1ZqEvxo1s36Lxbd4 started

2021-09-03T14:02:34.824+0300 INFO Public server started on 192.168.156.219:4319

2021-09-03T14:02:34.824+0300 INFO bandwidth Performing bandwidth usage rollups

2021-09-03T14:02:34.824+0300 INFO Private server started on 127.0.0.1:4219

2021-09-03T14:02:34.824+0300 INFO trust Scheduling next refresh {"after": "6h5m40.040805768s"}

2021-09-03T14:02:34.824+0300 DEBUG pieces:trash starting to empty trash

2021-09-03T14:02:34.824+0300 WARN piecestore:monitor Disk space is less than requested. Allocated space is {"bytes": 12386991667723}

2021-09-03T14:02:34.824+0300 INFO failed to sufficiently increase receive buffer size (was: 208 kiB, wanted: 2048 kiB, got: 416 kiB). See https://github.com/lucas-clemente/quic-go/wiki/UDP-Receive-Buffer-Size for details.

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "12tRQrMTWUWwzwGh18i7Fqs67kmdhH9t6aToeiwbo5mfS2rUmo"}

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE"}

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2021-09-03T14:02:34.825+0300 DEBUG contact:chore Starting cycle {"Satellite ID": "12rfG3sh9NCWiX3ivPjq2HtdLmbqCrvHVEzJubnzFzosMuawymB"}

2021-09-03T14:02:34.837+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "CI65EGSF3QPRVVL3EKEBZJGZOA5XYN5QJBBYT4235QPGWGUP32ZQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:02:34.837+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "J3J2T45OMGZJFJDTEPKHQPCME2KM6LREPSUBIKK4GDZ2Z6XU6UIA", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:02:34.837+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "2MLEFHZXHZEJNCDRWPYP3RX7XL4LSVCXEUSVPW5KV3QUVPZP4AUA", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:02:34.837+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "EWUW5M22HHYCFPEARWD4HSMMET6BOM3FBDITQTWKTRJ47WUF2BAA", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:02:34.838+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "LR4ALUGVAKA7NJTYKVUJWOQ4ZTKOF6Q2VGTBIKB6O6PSBZ75VSPA", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

ARCHIEVED LOG

root@nas:/mnt/log/storj/v3/20210903/storj# cat node19.log | grep Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ

2021-08-19T06:51:54.349+0300 INFO piecestore upload started {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "Available Space": 1788539305984}

2021-08-19T06:52:22.915+0300 INFO piecestore uploaded {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "Size": 2319360}

2021-08-19T17:18:29.442+0300 INFO piecestore download started {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET"}

2021-08-19T17:18:35.268+0300 INFO piecestore downloaded {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET"}

2021-08-20T00:54:30.748+0300 INFO piecestore download started {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET"}

2021-08-20T00:54:34.787+0300 INFO piecestore downloaded {"Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET"}

CURRENT LOG

root@storj:~# cat /var/log/storj/node19.log | grep Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ | head

2021-09-03T14:02:35.117+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:45:11.246+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T15:45:11.214+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T16:45:11.195+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T17:45:11.208+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T18:45:11.199+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T19:45:11.198+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T20:45:11.241+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T21:45:11.206+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T22:45:11.246+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "Q7ZL3U66CTGO2PMC5KCA56EXZWZMUYL7UFISDTUFFTIW7LH7ZFIQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:152\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

ARCHIEVED LOG

root@nas:/mnt/log/storj/v3/20210903/storj# cat node19.log | grep -i I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ

2021-08-19T07:30:02.316+0300 INFO piecestore upload started {"Piece ID": "I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "Available Space": 1787339735040}

2021-08-19T07:30:08.538+0300 INFO piecestore uploaded {"Piece ID": "I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "Size": 2319360}

CURRENT LOG

root@storj:~# cat /var/log/storj/node19.log | grep I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ | head

2021-09-03T14:02:35.118+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-09-03T14:45:11.248+0300 ERROR collector unable to delete piece {"Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Piece ID": "I7S3INA7F3K5NUK5SCCJACLK4AHUDLHBQ5S3WC3DTNL3QMMN27LQ", "error": "pieces error: filestore error: file does not exist", "errorVerbose": "pieces error: filestore error: file does not exist\n\tstorj.io/storj/storage/filestore.(*blobStore).Stat:103\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).pieceSizes:239\n\tstorj.io/storj/storagenode/pieces.(*BlobsUsageCache).Delete:220\n\tstorj.io/storj/storagenode/pieces.(*Store).Delete:299\n\tstorj.io/storj/storagenode/collector.(*Service).Collect:97\n\tstorj.io/storj/storagenode/collector.(*Service).Run.func1:57\n\tstorj.io/common/sync2.(*Cycle).Run:92\n\tstorj.io/storj/storagenode/collector.(*Service).Run:53\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2.1:87\n\truntime/pprof.Do:40\n\tstorj.io/storj/private/lifecycle.(*Group).Run.func2:86\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}