When I stop two of my nodes (version 1.35.3, not latest but still actual between 1.24.0 and 1.37.1)

they do not stop, but start writing to the logs at high speed, something like this

logs reach the next size quickly enough

-rw-r--r-- 1 root root 4.2G Sep 17 02:05 node02.log

-rw-r--r-- 1 root root 4.3G Sep 17 02:04 node03.log

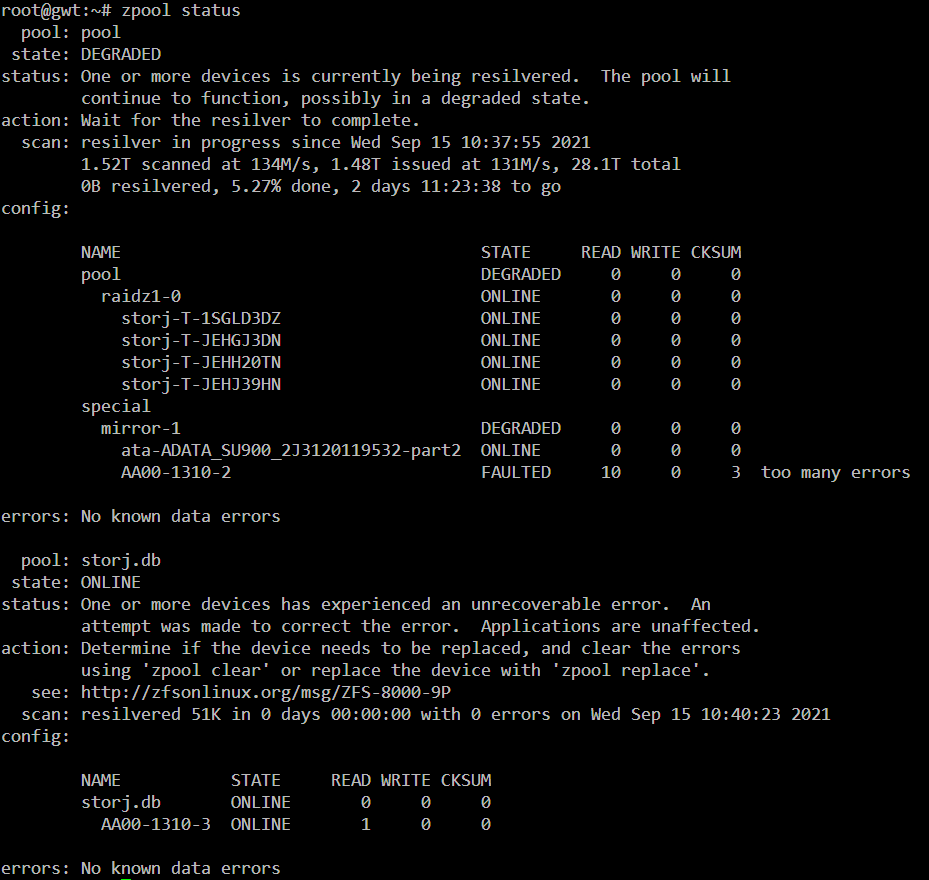

I had reason to believe that the problem could be in corrupted databases.

I run on them PRAGMA integrity_check and vacuum. Everything went without problems, launched, the log was perfect, waited, stopped, the problem was reproduced

I erased the all dbs, started the nodes without a database, the database was created successfully, the log was perfect, stopped, the problem was reproduced

I am wrote this text during a break while the remote machine is being soft rebooted and trying to stop the nodes processes.

…Next i update storagenode binary, erase DBs, problem was reproduced

I am wrote this post because it seems to me that the problem maybe potentially serious for entire network . I do not see my own mistakes that could lead to such very serious consequences.

Just pay attention to it.

If the problem is mine, and I lose these (my very first and largest nodes)

no problem, I do not ask to restore them.

Affected nodes:

node02 - 1M1zL3YVVAsdLtvcTbpxhTahmp9jrhiw3GsQwAmukSsnLmWv7U

node03 - 1boQ78NbzEmNq59UwABfYJH1TzjBG7TvnVqjz5ho95FcdchAww

this is a short version

in long version timings is