What is a drives main purpose if not I/O?

I’d be sharing that I/O. Perfect example. I have a 6TB drive in use for my Proxmox backups (one set - there are two). If I was to put a Storj node on the same disk the Proxmox backups would complete slower. Note that the Hypervisor is connected to the NAS by 10gbit Ethernet so the bottleneck is not Network here.

So? Storj has very little bandwidth, so it doesn’t impact the drive performance a lot. Obviously, if you want to use the drive for high performance I/O don’t put another service on it or even better, get an SSD.

I’m perfectly happy with the performance of my current backups - that don’t share any disk with Storj. So I do not understand your answer whatsoever.

It might have very little bandwidth but most of the IOPS are very small and very random. Spinning drives are extremely bad at handling workloads like this causing other services running from the same drive to slow down significantly.

Yes, but it does not mean you have to put storagenode on a separate drive. If your storage system cannot handle that level of io — it’s not suitable for storagenode. Don’t run the node.

Note, most io that storagenode generates today are mostly database sync writes. Disks can only handle 200iops on a good day. You have to have some sort of solution (like ZFS special device) to get any decent performance from your server even without storagenode. And if you do — additional io from storagenode becomes a drop in the ocean.

I don’t see the problem. Don’t run storage node on raspberry pi with USB SMR drive from Costco and you will be fine.

This would be weird, as there are known TBW warranty limits for some drives. Can you find these posts?

Correct! If the I/O dosen’t affect drive’s life, how the bad sectors appear? This would sugest that after 10 years, a drive with no data on it, or not accesed even once (0 I/O) would have the same number of bad sectors, or just will have bad sectors, as a drive used for storagenodes or whatever, with daily high I/O. I don’t think bad sectors appear from thin air.

Just stumbled across this, about windmill discussion… if it’s ok to link twitter here:

https://twitter.com/goodfoodgal/status/1682676336815636480

Everything affects everything technically but then any discussion loses meaning.

Some things affect more than others.

I would not worry about temperatures or iO. I bought drives to use them, not throw them away in the end in pristine condition.

I would worry about not kicking the drive while it’s spinning, if your drive is not in an unwieldy 20kg enclosure.

It’s like buying a car and then avoiding driving it not to “wear it out”.

It’s madness. The sole reason disks exist is to serve data. And if the disk fails from exhaustion in a few years — I got my money worth out it. If it in pristine condition — I overpaid. Should have bought shittier disk.

But then disks are today commodities and brands don’t matter. I’m already buying cheapest I can find, used.

There are better things in life to worry about than amount of disk IO. Do you also worry about amount of water your plumbing handles daily and avoid washing ![]()

Uhh… What?

(20 chars)

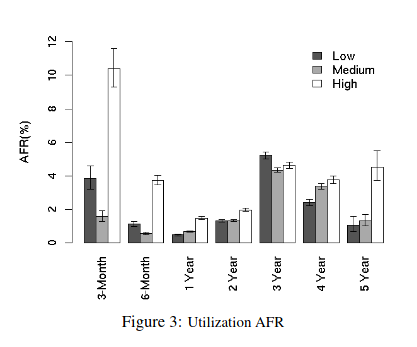

If I can prolong the life of a single 20 TB drive by one month, that’s 30 USD in additional Storj revenue. If by adding a fan I can reduce temperature of 4 drives in a NAS, each 20 TB, and reduce their AFR by 1-2% this way, that’s way more than 1 month in MTTF.

BTW, this is one of the reasons why large operations are more efficient. I wouldn’t probably bother about details like that for a single drive. But for ten of them it starts becoming enticing.

Fusion reactors are not dangerous. The difficulty is in keeping the reaction going at all. If anything goes wrong in even the slightest way, the reaction stops. And radiation is minimal to begin with. There’s no danger of melt down like with fission reactors, no pollutants as byproducts. The output is just helium, which there is a growing shortage of, so it would actually be a useful byproduct. They are however crazy expensive and so far have not been able to produce more energy than they consume. It’s one of those things that seems to be perpetually 10 years away from happening, but when it finally does it will be able to provide a safe and clean effectively endless energy source.

That said, modern fission reactors have also become incredibly safe if you compare it to secondary effects of any other energy source. Leading to far fewer deaths per energy generated than any other source of energy (including wind and solar, which caused deaths due to chemical exposure and dangerous maintenance situations). Nuclear just has a major image issue due to the 2 well known large scale disasters, the biggest of which was a nuclear weapons factory converted to a mismanaged and badly protected powerplant lacking good safety controls. And the other caused a single death from cancer caused by radiation, with the only other deaths later attributed to the disaster being the once caused by physical and mental stress of evacuation and relocation efforts. Which was exacerbated by the ongoing turmoil as a result of the tsunami.

It is very clean and one of the fewer clean sources that allows generation based on demand rather than weather conditions. But due to dan failures it’s also one of the more dangerous ones. Additionally, it requires an environment where they can actually be built and much of the world simply doesn’t have that luxury. I do really love using hydro for energy storage as part of a larger green energy network though, which can be done more locally and at smaller scale in many more places than large hydro dams alone.

For SSD’s, not for magnetic drives as far as I know.

I don’t mind you linking Twitter, but I would prefer some sources with actual data to back up their conspiracy claims. I’m not going to address the details of a specific countries incentive programs, but the claims made regarding energy use vs production are insane. Just check this link for example: https://www.quora.com/How-much-power-does-a-wind-turbine-need-to-consume-to-start-working-and-to-operate

Please see WD Red Pro 20TB Launched with Wickedly Weak Workload Rating. It’s not exactly TBW, my memory was wrong, but it’s still a workload “rating”. Given that it’s apparently counting both reads and writes, I suspect it’s about longevity of actuators… but it’s just my hypothesis.

This is the study I was talking about: https://static.googleusercontent.com/media/research.google.com/en//archive/disk_failures.pdf it is not even that recent it was just recently picked up by the media again.

I know this one, it’s not exactly recent at this point… Besides that, even this paper states that there is an observable difference in AFR for young and old drives:

What they only point out is that seem to be no difference in the middle of the bathtub curve.

I’m not sure I’d trust this study anymore for modern drives. Backblaze has observed that the bathtub curve looks pretty different for modern drives.

With low or medium utilization, you have the problems of spin up and spin down, maybe even warm up and cool down, which perhaps are way more damaging than workload.

I believe that workload rating is just there to separate the enterprise lineup from consumer lineup. All companies use the same marks: 150TB/year (I believe) for basic NAS drives, 300TB/year for pro NAS drives and 600TB/year for enterprise/data center drives. Maybe these are stated in some rulebook or international standard, like the motor oil specs.

I strongly suspect some degree of binning is involved. Passing drives → label enterprise, slap 5 year warranty.

Barely passing — consumer market.

Straight up rejects - white label that shit into usb enclosures.

Anecdotally, I never spin down disks, but my server is and always was on the patio. Outside, essentially. Outside temperature changes ± 25F daily. No issues.

I don’t pamper my hardware.