yes i am creating a bottleneck of that there is no doubt and yes the system will run a lot better without sync always… atleast short term…

the immediate effects of turning sync always to standard, is things like higher rates of file handling in case of moving around mostly empty files, which goes up by a magnitude of like x50 if not more…

it would give me higher throughput because i wouldn’t have to go through the ssd, i also wouldn’t create extra internal bandwidth utilization from moving data around inside the system.

pushing all data to the SLOG ofc makes all writes essentially become double, because one path goes to the SLOG and the rest goes to memory and then towards disks, going to count the memory and then disk as one … even tho it will essentially use twice the bandwidth of the SLOG depending on the configuration and host hardware configuration… in case of a mirror SLOG that bandwidth usage would of cause also be doubled… so yeah running sync standard would be nice…

but i also gain improved read speeds now and in the future i gain even more from less fragmented data because there are “no” random writes to my hdd’s, then i ensure the data is written on to the hdd’s instead of sloshing around in memory for like up towards of 167sec for non sync writes on rare cases… again risking data integrity because there is limited redundancy on the data… even with ECC RAM.

Data base writes are known to cause great fragmentation on storage and thus for special large scale long term database loads a sync = always approach is recommended and taken by most.

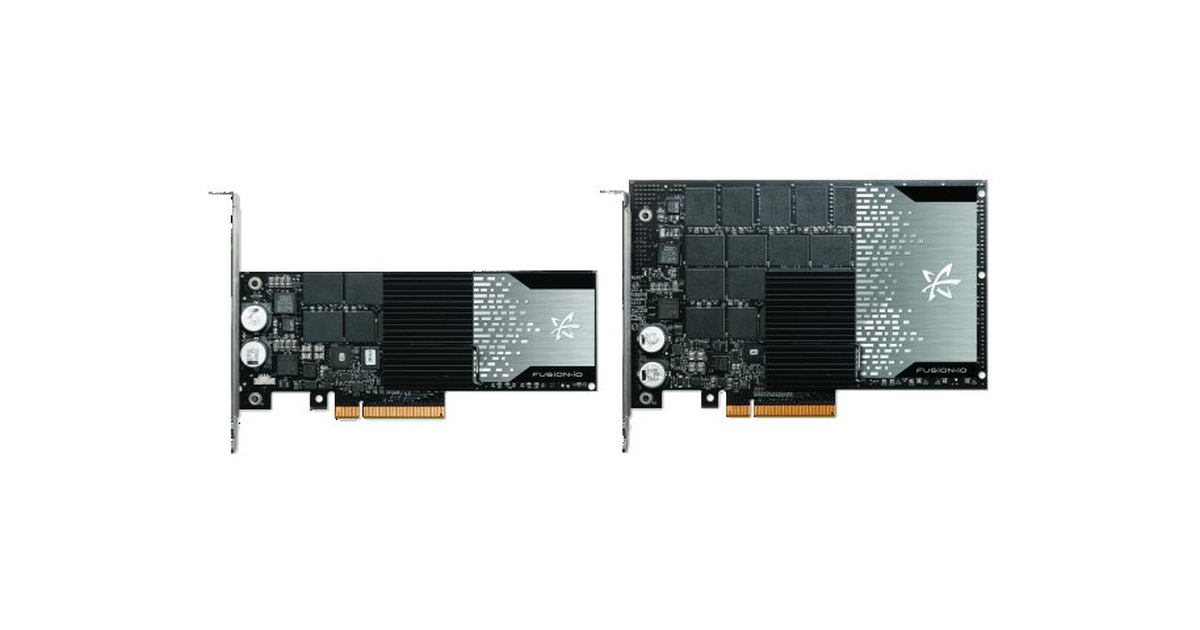

it’s not for fun companies like oracle use and produce these cards, and the gains from having such cards can be immense… sync always just forces me to take that route…

and no i will not be turning off sync always even tho i can… i tested it on off until now… and tho there are some issues with it, which will be about fully mitigated in any practical sense by an io accelerator card, i’m by far no expert on all of this stuff i just researched it a lot in recent time and follow what i consider to make sense by recommendation of oracle zfs manuals, ixsystems blog posts and other such sources i have been able to find that actually deal with this kind of stuff…

also i plan to make this pool massive… so upgrading something two sizes to small is no advantage long term… i don’t expect to be replacing this card for a long time and might move it into the next server, because i doubt ssd tech will become 10-15x times better before i upgrade that…

yeah like i said… i want to just throw everything into one pool… make storage on my entire local network obsolete… and an expensive luxury… why have 500GB nvme SSD in a gaming pc when its used maybe 5-8 hours a week… i think that tomorrows storage and most likely computers will be much more local datacenter like…

and sure this is a crazy kinda setup… which might not make sense, but i think it does… i think there are plenty of good reasons to run the setup like this… especially long term… after all a storagenode takes 9 months just to start giving 100% return, so data reliability should be counted in the years…

i don’t expect any errors to be caused from this… i haven’t seen any yet and i run scrubs on a frequent basis… a zfs scrub reads and verifies checksums on everything…

so if even a bit was wrong, then i would know about it… and thus far i haven’t had one recorded on drive.

even with me being kinda mean to it for the first long while… ofc other factors come into play… like neglect and disk redundancy… as you wisely stated early on when talking about storage redundancy then raid with 1 redundant drive isn’t an optimal solution… and me running raidz1 x3 i imo the weakest point in my setup… and then maybe my ram since i only run ECC without any mirroring or spare setup

but again ram data is redundant just like slog data is redundant… so long as one of them are working and or powered…

so really if i should improve my data intrigrity even more i should find a way to do a raidz2 (two redundant drives) which gives me the option of missing that a disk was bad or having a bad drive i was unaware of during a rebuild… ofc i mitigated some of that risk by having multiple raidz1’s and then with few drives thus i can rebuild each drive in little time and with greatly reduced data overhead, which limits my system’s time exposed to data corruption… while bigger arrays / raids will need to work much longer to fix the issue and thus increases the likely chance of disk failure…

and i want to do that… it’s just not really that practical if one wants nice raw iops on the hdd’s also while not losing to much data capacity, and keeping everything affordable and easy to manage…