In order to have 1 PB stored, he would need ~66 public IP addresses… I highly doubt that he has that…

i forget how much data he said he had… but it was over 500TB so just used 1TB as an over estimate, and i’m sure there are also more than 1000 nodes able to take data…

just wanted to see if it was possible that it could be him, if he was telling the truth… and the numbers doesn’t seem that far off…

but i duno

even with 66 ip address and the ingress we have been seeing, getting to 15TB pr node or whatever would take… well years… ofc when i joined a year or so ago my node went past 10 TB in just a few months because of the latter end of the storjlabs pre launch stress tests and “SNO Rewards”

Sadly i was just on the tail end of the juicy surge payouts… so basically got those around when my node was vetted… so got like 5$ + 50% lol

but there is also a certain advantage to knowing that the network is live and that what we are seeing is real world numbers, instead of just a temporary boost that one cannot expect to last.

hopefully the new pricing for users will have a long term beneficial impact on the network.

If he works for an ISP or a hosting/cloud company, or a major university etc., that would be easy. Even if he doesn’t, it is possible to collect a lot of single IP addresses from unique blocks at 1 USD/IP/month or less, which can be made profitable with some dedication.

6 month old node and not fully vetted yet, i guess… haven’t bothered checking… was fine but it seems like we switched to getting ingress from a different saltellite which it apparently wasn’t vetted for.

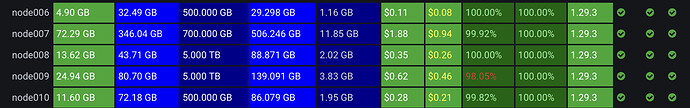

and for reference

15 month node and ofc fully vetted, and near optimal performance tho have been seeing a % or so decrease in upload successrates so at like 98% or better… something i’m looking into

Nope… Still not related

you saying you are also seeing similar amounts of deletes?

deletes have nothing to do with ingress. you said

(It’s logical that your trash goes up after 3 days of downtime…)

that it is…

had also been wondering why it didn’t showed up until 3½ weeks later.

well it did basically equal what i had in ingress, which is why i said it set me back a month.

Oh yeah, the trash is just a direct result of repair while you were down. The bigger the node and the older the data on the node the harder the hit. Try to limit downtime to below 4 hours to prevent that.

As for why it took so long, not sure. But your node relies on garbage collection to get rid of the pieces it shouldn’t have anymore. Maybe the frequency of garbage collection has been lowered on the satellite end.

@andrew2.hart

thank you andrew, i did sort of expect it wasn’t related to my downtime

yeah i try to keep it at near zero… but i really didn’t want to reinstall and reconfigure the server.

lost my entire OS and took me about 60 hours until i finally accepted that i couldn’t save my data in proxmox, because it’s a crappy OS ![]()

turned out most of it did survive anyways… aside from the things i really needed short term to get operational fast lol

yeah last time i had longer down time the trash was almost immediate…

so did sort of think i had gotten past… for whatever reason…

does look like andrew is actually seeing almost the same ratio…of trash to TB…

and i wasn’t really looking for help or an argument about this.

just sort of interested in if it was the downtime that caused the trash now, or not…

i guess the answer is maybe…

we really need a deleted graph on the bandwidth util

now you may say that it’s not possible that one wouldn’t get deleted data with downtime…

and i sort of agree, however it hadn’t been more than a couple of months since last time i had a bit of extended downtime, maybe 3.

which also gave me a lot of deletes like 550GB if memory serves.

so this was really why i wouldn’t expect to see a lot of deletes from my downtime.

because it was recently synced with the network last time i had extended downtime, and thus the repair triggered would be fairly limited… as i mainly have gotten like 1TB of data in those max 3 months.

the rest that was close to repair would have been repaired and thus only the recent added to near repair limit data should get triggered.

So you say that this is a viable way for a partial graceful shutdown? ^^

I’ve got a node that I wanted to downsize by ~200 GB for a long time now, but somehow the deletions just don’t come. Looks like that by a controlled downtime I can get it done without sacrificing all the node’s data.

you tried setting the max storage lower than the current stored.??

deletes should be happening all the time, but yes you could just use a bit of downtime i guess…

to rush the process, but you will have to have had good uptime for it to work reliably.

i think last time somebody was digging into deletion ratios it was like a few % to 5%

i forget the exacts, alas it was something like that…

so even a 1TB node should see like 20-50 GB deletes each month.

Yeah, in fact I did so almost half a year ago. So far the natural deletes trimmed maybe 30 GB. That node is about a year old though.

I think it may partially have been.

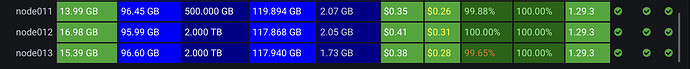

My largest node is just slightly smaller, but has quite a lot less trash.

This really doesn’t matter. Repair during long downtime will trigger for all pieces that are 1 above the repair threshold. 3 months is more than enough to have a fresh set of pieces needing repair.

I have no idea what you mean by this, but there is no syncing taking place.

I think it might be for now. But I would like the caveat that this is only the case because uptime requirements aren’t that strict atm. In the future this may be a very dangerous bet.

Not really. Nodes that have been full for a while will get fewer and fewer deletes over time since data that is likely to be deleted is replaced by new data until what remains is only data that is unlikely to be deleted.

It’s in the earnings estimator, 1.5% in recent months. But, this will likely not be accurate for nodes that have been full for a bit. My full nodes only see about 0.1% deletes this month.

i mean the network syncs (repairs) because the node isn’t online.

if the node is always online which it’s had been for like 6 months before the previous time i had issues, basically what i was trying to say is that the network is use to / not very out of sync with my node being offline.

i don’t think we can count the trash yet… mine seems to still be going up.

sure maybe it was due to downtime, but i don’t think so.

we will see where it sits in a few days…

also doesn’t trash only stick around for a week or so… i may have had some deletions from my downtime that i just didn’t really notice… only noticed it now because it was going up into the triple digits.

which is usually fairly rare, but i was also surprised to not have seen much trash from my downtime.

but figured random chance or maybe the network was use to my node being away since it has happened for a day or two not two months before.

can’t check exactly when, because my proxmox graphs are all gone with my OS ![]()

yeah this is a good point, it is also rather complex and difficult to predict as old datasets may suddenly become unviable and then be updated… so until the network reaches the age of most long term stored data is being kept, we might see flux in the deletions.

i guess they maybe affected by what you mention above, the data is essentially refined into being data that doesn’t get deleted often.

0.1% is really low… numbers like that cannot help but make me think of test data affecting it…

but i suppose it’s possible.

Yeah, I’m laughing about that. Partial graceful exit is exactly the only feature I’m still waiting for, and it turns out, it’s already there ^^ Except not paid the way a full graceful exit would be.