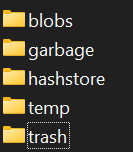

Yeah the storage blobs were all empty. I just took the risk and deleted all the folders, each per satellite and then the whole blobs folder as well.

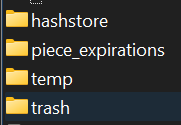

Only these four folders remain:

Hopefully the screenshot is readable.

2025-08-28T21:08:33Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:33Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:33Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:33Z INFO piecestore downloaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “GET”, “Offset”: 0, “Size”: , “Remote Address”: “”}

2025-08-28T21:08:33Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:34Z INFO piecemigrate:chore enqueued for migration {“Process”: “storagenode”, “sat”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”}

2025-08-28T21:08:34Z INFO piecemigrate:chore enqueued for migration {“Process”: “storagenode”, “sat”: “12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs”}

2025-08-28T21:08:34Z INFO piecemigrate:chore enqueued for migration {“Process”: “storagenode”, “sat”: “1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE”}

2025-08-28T21:08:34Z INFO piecemigrate:chore enqueued for migration {“Process”: “storagenode”, “sat”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”}

2025-08-28T21:08:34Z INFO piecemigrate:chore all enqueued for migration; will sleep before next pooling {“Process”: “storagenode”, “active”: {“12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”: true, “12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs”: true, “1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE”: true, “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”: true}, “interval”: “10m0s”}

2025-08-28T21:08:35Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:35Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:36Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

2025-08-28T21:08:37Z INFO piecestore uploaded {“Process”: “storagenode”, “Piece ID”: “”, “Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Action”: “PUT”, “Remote Address”: “”, “Size”: }

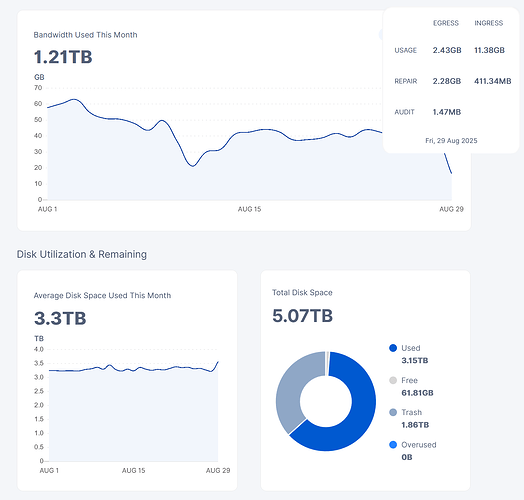

I’m a bit scared about the piecestore after the blue INFO. I’ve seen screenshots here in the forum where it switched to hashstore.