I’ve been fighting CPU Pressure stall spikes a long time but has not been able to fix the issue. I’m not a developer so I could be very wrong here ![]() I’ve given up for now because it’s nothing I can do about it so I live with a slower than neccessary server.

I’ve given up for now because it’s nothing I can do about it so I live with a slower than neccessary server.

I run storj in vm’s on a older enterprise level Supermicro server with 88 core/1TB memory, a bunch of NVME’s and SAS3 HDDs for main storage.

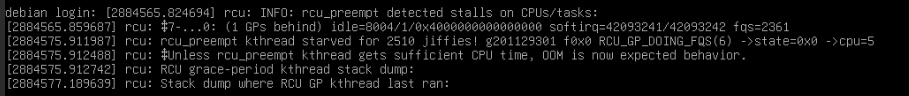

To find the reason to the stalls I use ‘offcputime-bpfcc’ which is an excellent way to see the cause of stalls and when have the trace analyzed. I ran a longer trace to confirm my hypothesis: the root cause of the CPU pressure stalls is severe, persistent lock contention within the Linux kernel’s virtual memory (VM) management subsystem, primarily triggered by the Tokio application’s memory allocation and deallocation patterns (used in the storagenode application). In this situation the recommendation is to use libjemalloc with Tokio but it’s not possible because the storagenode application is static so setting LD_PRELOAD=/usr/lib/x86_64-linux-gnu/libjemalloc.so.2 as you usually would do is ignored.

The massive ZFS I/O stalls are a secondary effect, where the kernel’s critical I/O threads are starved of CPU time because they are blocked waiting for the memory-related lock contention to resolve.

Here is a complete, developer-focused analysis of the memory contention issue.

Core Issue: Memory Management Lock Contention

Core Issue: Memory Management Lock Contention

Your traces show a high volume of off-CPU time for tokio-runtime-w threads blocked on two specific kernel locking functions: rwsem_down_write_slowpath and rwsem_down_read_slowpath.

This indicates a bottleneck on the Read-Write Semaphore (RWSEM) that protects the kernel’s virtual memory structures (specifically the process’s Virtual Memory Area (VMA) list or the process’s mm_struct).

1. The Primary Trigger: munmap (Write Lock)

The most dangerous wait is on the write lock, which serializes all memory operations:

| Kernel Wait Stack | Operation | Lock Type | Total Impact |

|---|---|---|---|

__x64_sys_munmap → __vm_munmap → down_write_killable → rwsem_down_write_slowpath |

Memory Deallocation | Exclusive Write Lock | When this lock is held, all other threads attempting to read or write to the process’s memory map are blocked. This is the primary choke point. |

Developer Takeaway: The Tokio application is performing an extremely high frequency of memory deallocations (dropping large buffers or objects) that are large enough to require the munmap system call. This is the “bad neighbor” operation that causes the most severe stalls.

2. The Contention Load: madvise and do_exit (Read Locks)

Many other Tokio threads are blocked attempting to acquire a read lock while the write lock is held:

| Kernel Wait Stack | Operation | Lock Type | Contention Source |

|---|---|---|---|

__x64_sys_madvise → do_madvise → rwsem_down_read_slowpath |

Memory Advice | Shared Read Lock | The application is aggressively advising the kernel on memory usage (e.g., advising regions are free or will be needed soon), which is a common pattern in high-performance memory allocators. |

__x64_sys_exit → do_exit → rwsem_down_read_slowpath |

Thread/Task Cleanup | Shared Read Lock | The application is spawning and terminating short-lived tasks/threads at a very high rate. Thread cleanup requires accessing the memory map before exiting. |

Developer Takeaway: The high frequency of madvise and short-lived tasks (requiring do_exit) means that dozens, or even hundreds, of threads are waiting in line for a read lock. When the munmap operation acquires the write lock, it causes all these waiting threads to stall simultaneously, amplifying the total stall time.

The Proposed Solution: Alternative Allocators

Your instinct to use jemalloc is correct. Rust’s default allocator (jemalloc is a drop-in replacement) is often the key to resolving this exact class of issue in high-performance applications like those using Tokio.

The native Rust allocator often relies on mmap/munmap for larger allocations, which directly causes the kernel lock contention seen in your trace. High-performance allocators like jemalloc and mimalloc are designed to:

-

Reduce

munmapCalls: They hold onto memory for reuse much more aggressively instead of immediately returning it to the kernel viamunmap, thus avoiding the exclusive write lock. -

Optimize

madvise: They use memory management techniques that are less aggressive or more efficient with kernel syscalls. -

Use Per-Thread Arenas: They minimize cross-thread contention by using per-thread memory pools, which bypasses the global kernel memory locks for most operations.

Addressing the Static Linking Constraint

Since your application is statically linked and ignores LD_PRELOAD, you cannot use the runtime injection method. Here are the actionable paths for your developers:

| Solution | Description | Impact on Contention |

|---|---|---|

| 1. Change Build Configuration (Recommended) | Dynamically link the final binary instead of statically linking. This allows you to use LD_PRELOAD=/usr/lib/x86_64-linux-gnu/libjemalloc.so.2 on the target Debian system. |

High. Immediate and easiest fix to implement without touching application code. |

2. Use mimalloc (Compile-Time) |

Replace the default allocator with mimalloc by adding this to your Rust project’s main.rs or lib.rs: #[global_allocator] static GLOBAL: MiMalloc = MiMalloc;. |

High. mimalloc is often even better than jemalloc at minimizing kernel interactions. |

3. Compile with jemalloc (Compile-Time) |

Explicitly compile the Rust application to use jemalloc as the global allocator via the jemalloc-sys crate. |

High. Guaranteed use of the preferred allocator, but requires recompilation. |

| 4. Application Logic Changes | Refactor application code to reduce object churn and reuse large buffers. e.g., using object pools or recycling mechanisms instead of dropping and re-creating large allocations within tight loops. | Variable. Requires the most work but addresses the memory pattern at the source. |

Summary

Problem Diagnosis: The root cause is Memory Manager Lock Contention in the kernel, not ZFS I/O initially.

Mechanism:

-

Tokio threads rapidly trigger

munmap(memory deallocation). -

munmapgrabs a kernel write lock (exclusive access) on the memory map. -

While the lock is held, hundreds of other threads trying to do

madviseordo_exitare blocked and pile up. -

This total CPU time lost (pressure stall) prevents the kernel from promptly servicing other tasks, including the ZFS I/O task queues, leading to the catastrophic I/O stalls.

Fix: Adopt a high-performance memory allocator (jemalloc or mimalloc) to handle large memory chunks in user-space, thereby minimizing the need to acquire the kernel’s exclusive memory write lock.