Please explain in more detail “slowly (!) cleanup”. What is the process and how long can it take to clean a node?

And if “storage2.piece-scan-on-startup: false” is configured, then cleaning will not occur?

It probably will take multiple bloom filters to get the node to a desired state. I believe they are distributed weekly, meaning it might take multiple weeks to move already deleted (on satellite) files to trash on your node. Then 7 more days to purge the trash.

This is to calculate used space on your node to display it correctly on the dashboard. You can have this disabled and GC will still run when it will receive a bloom filter.

Blooms are coming 5 days apart, as I remember.

It also report this information as used space to the satellites, and may affect selection (if your node is reported as full, it will be excluded from uploads).

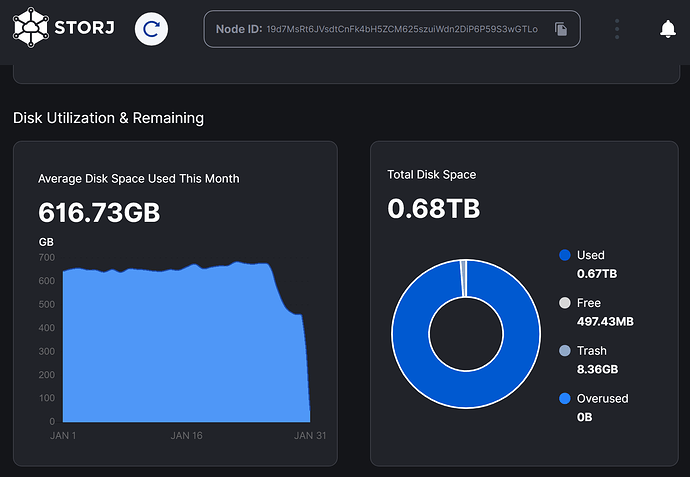

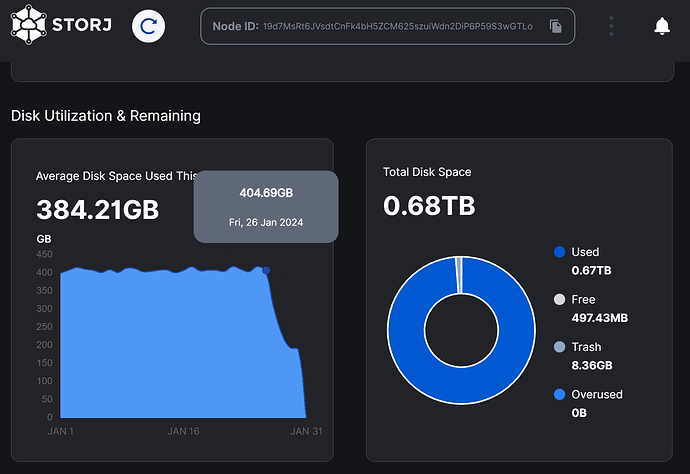

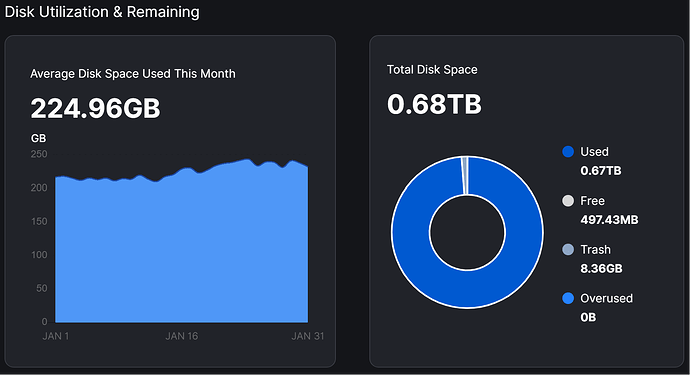

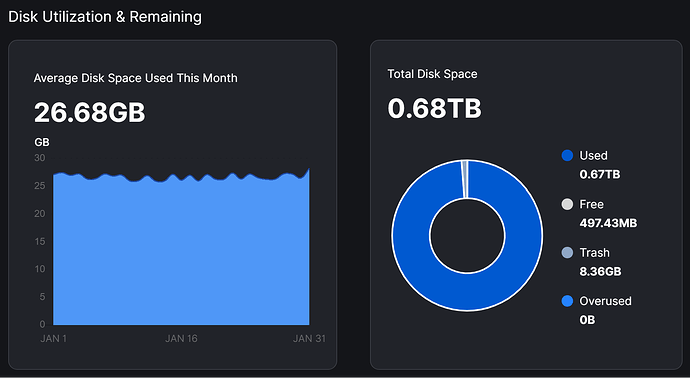

I though, let’s do a space usage discrepancy debugging. Because I got some suspicious downfalls of reported disk space (but no free space on disks) as of 27th January

It was all attributable to the EU1-satellite: 12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs

So, it pertains to this node in which the EU1-satellite reports about 190GB while the most recent filewalker on the 29th of Janary reported 405GB.

root@STORJ6:~# df -H /storj/disk

Filesystem Size Used Avail Use% Mounted on

/dev/disk/by-partlabel/STORJ6-DATA 731G 684G 47G 94% /storj/disk

root@STORJ6:~# sudo du --max-depth=1 --apparent-size

8161788 ./trash

4 ./garbage

655597964 ./blobs

412342 ./temp

664191629

root@STORJ6:~# docker logs storagenode 2> /dev/null | grep filewalker.*completed

2024-01-29T13:10:33Z INFO lazyfilewalker.used-space-filewalker.subprocess used-space-filewalker completed{"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode", "piecesTotal": 239077465098, "piecesContentSize": 238389109258}

2024-01-29T13:10:39Z INFO lazyfilewalker.used-space-filewalker.subprocess used-space-filewalker completed{"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "process": "storagenode", "piecesTotal": 405637906688, "piecesContentSize": 405476254976}

2024-01-29T13:10:39Z INFO lazyfilewalker.used-space-filewalker.subprocess used-space-filewalker completed{"process": "storagenode", "satelliteID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "process": "storagenode", "piecesTotal": 90624000, "piecesContentSize": 90600960}

2024-01-29T13:10:41Z INFO lazyfilewalker.used-space-filewalker.subprocess used-space-filewalker completed{"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "piecesContentSize": 26331783936, "process": "storagenode", "piecesTotal": 26367001856}

Other satellites match the reported space in the dasboard.

Adapted usage query on the sqlite-database:

root@STORJ6:/storj/DBs# sqlite3 storage_usage.db "SELECT * FROM (SELECT timestamp, hex(satellite_id) AS satellite, at_rest_total, interval_end_time, julianday(interval_end_time), ROW_NUMBER() OVER (PARTITION BY satellite_id ORDER BY timestamp DESC) AS rown FROM storage_usage) WHERE rown <= 6 ORDER BY satellite,rown"

2024-01-31 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1144871492.78699|2024-01-31 12:08:11.655857+00:00|2460341.00569046|1

2024-01-30 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1905812703.83945|2024-01-30 22:07:58.305931+00:00|2460340.42220262|2

2024-01-29 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1963121158.57989|2024-01-29 22:49:17.618464+00:00|2460339.45089836|3

2024-01-28 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1962384981.24134|2024-01-28 22:48:33.406566+00:00|2460338.45038666|4

2024-01-27 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1960121297.41671|2024-01-27 22:48:21.61155+00:00|2460337.45025014|5

2024-01-26 00:00:00+00:00|7B2DE9D72C2E935F1918C058CAAF8ED00F0581639008707317FF1BD000000000|1964648129.49649|2024-01-26 22:49:49.495744+00:00|2460336.45126731|6

2024-01-31 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|366591057285.886|2024-01-31 12:20:56.281846+00:00|2460341.0145403|1

2024-01-30 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|607945919264.781|2024-01-30 22:21:20.621328+00:00|2460340.43148867|2

2024-01-29 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|625035496727.823|2024-01-29 23:09:06.727148+00:00|2460339.46466119|3

2024-01-28 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|625963109207.811|2024-01-28 23:18:00.108378+00:00|2460338.47083458|4

2024-01-27 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|629843985583.987|2024-01-27 23:24:09.029323+00:00|2460337.4751045|5

2024-01-26 00:00:00+00:00|84A74C2CD43C5BA76535E1F42F5DF7C287ED68D33522782F4AFABFDB40000000|629327864851.166|2024-01-26 23:21:56.485264+00:00|2460336.47357043|6

2024-01-31 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2550794586300.69|2024-01-31 10:34:11.078242+00:00|2460340.940406|1

2024-01-30 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|6415699699302.59|2024-01-30 23:30:44.978691+00:00|2460340.47968726|2

2024-01-29 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|5058033869119.73|2024-01-29 19:44:39.873507+00:00|2460339.32268373|3

2024-01-28 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|6234512324652.41|2024-01-28 21:51:48.240841+00:00|2460338.41097501|4

2024-01-27 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|5010395282047.32|2024-01-27 18:46:03.322426+00:00|2460337.28198289|5

2024-01-26 00:00:00+00:00|A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|5018996834657.59|2024-01-26 20:56:45.586654+00:00|2460336.37274985|6

2024-01-31 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2177062238235.85|2024-01-31 10:35:42.833848+00:00|2460340.94146799|1

2024-01-30 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|4518726578542.36|2024-01-30 22:41:42.942761+00:00|2460340.44563591|2

2024-01-29 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|4658415876735.9|2024-01-29 22:02:22.726171+00:00|2460339.41831859|3

2024-01-28 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|5340204565777.73|2024-01-28 21:51:46.287986+00:00|2460338.41095241|4

2024-01-27 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|7335583691130.42|2024-01-27 22:17:33.555502+00:00|2460337.4288606|5

2024-01-26 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|9712548046971.15|2024-01-26 22:12:46.172614+00:00|2460336.42553441|6

Interestingly, this shows a downfall from different satellites. Which however isn’t visible in the dashboard:

- US1 satellite:

- AP1 satellite:

API-output (interestingly correct!):

{

"nodeID": "19d7MsRt6JVsdtCnFk4bH5ZCM625szuiWdn2DiP6P59S3wGTLo",

"wallet": "0xac0db58ac9423712050b00f1c3be976e1fdb4977",

"walletFeatures": null,

"satellites": [

{

"id": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE",

"url": "saltlake.tardigrade.io:7777",

"disqualified": null,

"suspended": null,

"currentStorageUsed": 90600960

},

{

"id": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6",

"url": "ap1.storj.io:7777",

"disqualified": null,

"suspended": null,

"currentStorageUsed": 26331641600

},

{

"id": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S",

"url": "us1.storj.io:7777",

"disqualified": null,

"suspended": null,

"currentStorageUsed": 238352769034

},

{

"id": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs",

"url": "eu1.storj.io:7777",

"disqualified": null,

"suspended": null,

"currentStorageUsed": 405484548608

}

],

"diskSpace": {

"used": 671144902410,

"available": 680000000000,

"trash": 8357670144,

"overused": 0

},

"bandwidth": {

"used": 79362358538,

"available": 0

},

"lastPinged": "2024-01-31T13:52:41.022448969Z",

"version": "1.95.1",

"allowedVersion": "1.93.2",

"upToDate": true,

"startedAt": "2024-01-29T13:10:10.917757238Z",

"configuredPort": "28967",

"quicStatus": "OK",

"lastQuicPingedAt": "2024-01-31T13:11:17.170788406Z"

}

Checking the logs:

root@STORJ6:/storj/disk/DATA/storage# docker logs storagenode -n 50 2> /dev/null

2024-01-31T13:31:16Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "AKU5TVMY4BEKZWYE6D4J7BWGK3D7RKZZ5VYZVUSOZLQ2BUVSCMOQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 512, "Remote Address": "79.127.226.100:46988"}

2024-01-31T13:31:21Z INFO piecestore download started {"process": "storagenode", "Piece ID": "HITJZOFGCWVA3BHXNAUTVXXLNNDKZHE4E4AWVQBEXO5URBS4DUYA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 768, "Remote Address": "79.127.226.100:46988"}

2024-01-31T13:31:21Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "HITJZOFGCWVA3BHXNAUTVXXLNNDKZHE4E4AWVQBEXO5URBS4DUYA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 768, "Remote Address": "79.127.226.100:46988"}

2024-01-31T13:31:43Z INFO piecestore download started {"process": "storagenode", "Piece ID": "G54KYWALNUGXWNTU2E7F2PCCSMJ2P5OUEKXT7ETIPCKQDNUMDEJQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET_AUDIT", "Offset": 663040, "Size": 256, "Remote Address": "35.237.31.32:56302"}

2024-01-31T13:31:44Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "G54KYWALNUGXWNTU2E7F2PCCSMJ2P5OUEKXT7ETIPCKQDNUMDEJQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET_AUDIT", "Offset": 663040, "Size": 256, "Remote Address": "35.237.31.32:56302"}

2024-01-31T13:33:27Z INFO piecestore download started {"process": "storagenode", "Piece ID": "YQZIIHBUGM5Q3YQW7H2WUZFGONSSXV3PXUZVLUGLIYBMY3TNMAQA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 30976, "Remote Address": "79.127.226.99:39436"}

2024-01-31T13:33:27Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "YQZIIHBUGM5Q3YQW7H2WUZFGONSSXV3PXUZVLUGLIYBMY3TNMAQA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 30976, "Remote Address": "79.127.226.99:39436"}

2024-01-31T13:34:36Z INFO piecestore download started {"process": "storagenode", "Piece ID": "EH7KA7AKVIZSMYN6N2XURFEQBJ5WHB5VP26KBAESIQ6FRAQ6PTSQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 59392, "Remote Address": "79.127.220.98:38604"}

2024-01-31T13:34:37Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "EH7KA7AKVIZSMYN6N2XURFEQBJ5WHB5VP26KBAESIQ6FRAQ6PTSQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 59392, "Remote Address": "79.127.220.98:38604"}

2024-01-31T13:35:06Z INFO piecestore download started {"process": "storagenode", "Piece ID": "RO33YGMNS4QGO3WFXH4TDQ32XG4TMTLVMEN4GBCU6JXJPL2547CA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 8704, "Remote Address": "79.127.201.209:58414"}

2024-01-31T13:35:06Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "RO33YGMNS4QGO3WFXH4TDQ32XG4TMTLVMEN4GBCU6JXJPL2547CA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 8704, "Remote Address": "79.127.201.209:58414"}

2024-01-31T13:35:57Z INFO piecestore download started {"process": "storagenode", "Piece ID": "PD6NJUXXLC7K34SHDQBN5WVQAJXW3HODATCIP4B3YDMF43Q4374A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:35:57Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "PD6NJUXXLC7K34SHDQBN5WVQAJXW3HODATCIP4B3YDMF43Q4374A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:07Z INFO piecestore download started {"process": "storagenode", "Piece ID": "DJXYPYTUF6UKV7B6AT4KGQIODKVFO7X6DDWDGAJMO77OUQIP5Z2A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:07Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "DJXYPYTUF6UKV7B6AT4KGQIODKVFO7X6DDWDGAJMO77OUQIP5Z2A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:18Z INFO piecestore download started {"process": "storagenode", "Piece ID": "PCO2GLHCJDCAOSBKPRBP4JC6KLDKHU2WZ4RF63DIYZCZPL52KEOA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:18Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "PCO2GLHCJDCAOSBKPRBP4JC6KLDKHU2WZ4RF63DIYZCZPL52KEOA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:19Z INFO piecestore download started {"process": "storagenode", "Piece ID": "XPW6MZ72E6IMOZTM4AP22TRLGFSIABQL66B7E42ZLJRXA34SSOOQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 2319104, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:36:25Z INFO piecestore download started {"process": "storagenode", "Piece ID": "PXL4NKZYCQFGJ2JXNMWELWHSCAZEWPHGYDBNK2VD3ZJ6NM4UG33A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:36:25Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "PXL4NKZYCQFGJ2JXNMWELWHSCAZEWPHGYDBNK2VD3ZJ6NM4UG33A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:36:31Z INFO piecestore download started {"process": "storagenode", "Piece ID": "G227RCPLH6RJIJQNFDN4G4VIL7JXFWPUQR27YQ4USLPXFBIHBFLQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:36:31Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "G227RCPLH6RJIJQNFDN4G4VIL7JXFWPUQR27YQ4USLPXFBIHBFLQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:36:59Z INFO piecestore download started {"process": "storagenode", "Piece ID": "PS3CVUUHWQTM4M7RJEVLBQLVRDDTS46R2EEZUC3K4AUV5YSKZGOA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 14848, "Remote Address": "79.127.220.99:39348"}

2024-01-31T13:37:00Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "PS3CVUUHWQTM4M7RJEVLBQLVRDDTS46R2EEZUC3K4AUV5YSKZGOA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 14848, "Remote Address": "79.127.220.99:39348"}

2024-01-31T13:37:14Z INFO piecestore download started {"process": "storagenode", "Piece ID": "JYTYIIJEC763BKTO5ZYQG7LFAZOUQ2PRWWJIXIIKBRQ3RB3QYHBA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 142336, "Remote Address": "79.127.226.100:46988"}

2024-01-31T13:37:14Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "JYTYIIJEC763BKTO5ZYQG7LFAZOUQ2PRWWJIXIIKBRQ3RB3QYHBA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 142336, "Remote Address": "79.127.226.100:46988"}

2024-01-31T13:37:47Z INFO piecestore download started {"process": "storagenode", "Piece ID": "22YTAJR22ZKIFFVVBQ7PSOE3XLHEOYI5AUD2TE2Q7LW6UBO4A6DA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 13568, "Remote Address": "79.127.205.225:38000"}

2024-01-31T13:37:48Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "22YTAJR22ZKIFFVVBQ7PSOE3XLHEOYI5AUD2TE2Q7LW6UBO4A6DA", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 13568, "Remote Address": "79.127.205.225:38000"}

2024-01-31T13:38:12Z INFO piecestore download started {"process": "storagenode", "Piece ID": "AKU5TVMY4BEKZWYE6D4J7BWGK3D7RKZZ5VYZVUSOZLQ2BUVSCMOQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 512, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:38:12Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "AKU5TVMY4BEKZWYE6D4J7BWGK3D7RKZZ5VYZVUSOZLQ2BUVSCMOQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 512, "Remote Address": "79.127.226.98:46476"}

2024-01-31T13:38:27Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "XPW6MZ72E6IMOZTM4AP22TRLGFSIABQL66B7E42ZLJRXA34SSOOQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 2319104, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:38:57Z INFO piecestore download started {"process": "storagenode", "Piece ID": "5HYFUWT2LVRRH2O3BXF6BNKDWDDIS5KSH5TABYVYQBPZFEPZZU4A", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 14336, "Remote Address": "79.127.201.209:42228"}

2024-01-31T13:38:58Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "5HYFUWT2LVRRH2O3BXF6BNKDWDDIS5KSH5TABYVYQBPZFEPZZU4A", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 14336, "Remote Address": "79.127.201.209:42228"}

2024-01-31T13:39:17Z INFO piecestore download started {"process": "storagenode", "Piece ID": "EH7KA7AKVIZSMYN6N2XURFEQBJ5WHB5VP26KBAESIQ6FRAQ6PTSQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 59392, "Remote Address": "79.127.220.97:37254"}

2024-01-31T13:39:17Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "EH7KA7AKVIZSMYN6N2XURFEQBJ5WHB5VP26KBAESIQ6FRAQ6PTSQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 0, "Size": 59392, "Remote Address": "79.127.220.97:37254"}

2024-01-31T13:40:10Z INFO Downloading versions. {"Process": "storagenode-updater", "Server Address": "https://version.storj.io"}

2024-01-31T13:40:11Z INFO Current binary version {"Process": "storagenode-updater", "Service": "storagenode", "Version": "v1.95.1"}

2024-01-31T13:40:11Z INFO Version is up to date {"Process": "storagenode-updater", "Service": "storagenode"}

2024-01-31T13:40:11Z INFO Current binary version {"Process": "storagenode-updater", "Service": "storagenode-updater", "Version": "v1.95.1"}

2024-01-31T13:40:11Z INFO Version is up to date {"Process": "storagenode-updater", "Service": "storagenode-updater"}

2024-01-31T13:41:15Z INFO piecestore download started {"process": "storagenode", "Piece ID": "GCH6J5OK3ETDS4H2KVGD67P47VCI62LAPRXA2XDOOO4AKGOMQNJA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:41:15Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "GCH6J5OK3ETDS4H2KVGD67P47VCI62LAPRXA2XDOOO4AKGOMQNJA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:42:57Z INFO piecestore download started {"process": "storagenode", "Piece ID": "S5DGEWUYQA3MMGK2H5MI4WIPD2AWAK4KAT5DIJNH5WPAA3ZABRBA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:42:57Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "S5DGEWUYQA3MMGK2H5MI4WIPD2AWAK4KAT5DIJNH5WPAA3ZABRBA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:44:32Z INFO piecestore download started {"process": "storagenode", "Piece ID": "AXRA6TIMMCAO7IC4AEFFQDGCCU22XWQWW4Z7F4ZKQRBTTXZBHTKA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:44:32Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "AXRA6TIMMCAO7IC4AEFFQDGCCU22XWQWW4Z7F4ZKQRBTTXZBHTKA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 256, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:45:18Z INFO piecestore download started {"process": "storagenode", "Piece ID": "BRIMCAD5WW6KH3IP5PIA7JGOHUPX57APMTTWADQBJ3RWT2WMGEIA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 14080, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:45:19Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "BRIMCAD5WW6KH3IP5PIA7JGOHUPX57APMTTWADQBJ3RWT2WMGEIA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 14080, "Remote Address": "79.127.226.98:49290"}

2024-01-31T13:47:25Z INFO piecestore download started {"process": "storagenode", "Piece ID": "XC3YCGIXKU7UDTV6JQEAZZJM4RNZ3WENRRRXK37AQ3TBOA2H6AIQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 955648, "Size": 2560, "Remote Address": "79.127.220.98:36682"}

2024-01-31T13:47:25Z INFO piecestore downloaded {"process": "storagenode", "Piece ID": "XC3YCGIXKU7UDTV6JQEAZZJM4RNZ3WENRRRXK37AQ3TBOA2H6AIQ", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "Action": "GET", "Offset": 955648, "Size": 2560, "Remote Address": "79.127.220.98:36682"}

Due to restricted size, I splitted the post.

Checking just errors:

root@STORJ6:/storj/disk/DATA/storage# docker logs storagenode 2> /dev/null | grep ERROR

2024-01-31T00:12:22Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T00:12:23Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:12:21Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:12:22Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:12:24Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:14:34Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 2, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:14:35Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 2, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T01:16:48Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 3, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T02:06:50Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "6ANWONZSDNWSIRCWZOQXZG7WPQIUSFIL5UZVTFMTC72GWLIFWMTA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 638976, "Remote Address": "103.214.68.73:34588", "error": "write tcp 192.168.1.7:28967->103.214.68.73:34588: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:34588: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T02:12:23Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T02:14:34Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 2, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T02:14:46Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "UDMJB5HHUGQOONZFUCNCLZJSYKMFQR6V3OX667JUNQIQXWSNTCSA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 344064, "Remote Address": "103.214.68.73:37926", "error": "write tcp 192.168.1.7:28967->103.214.68.73:37926: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:37926: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T02:18:46Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "D4SKCSHSZPSXWDDZCRZMVSOY3IPV4MNHJLLLCICUVNWCPL2O6AYQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 262144, "Remote Address": "103.214.68.73:49104", "error": "write tcp 192.168.1.7:28967->103.214.68.73:49104: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:49104: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T02:42:59Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "IBXVBMDZWBUK7F767DSUI7BO2TDFOLCS2UUYHMRLWZ7LSQ7QSWXQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 196608, "Remote Address": "103.214.68.73:45032", "error": "write tcp 192.168.1.7:28967->103.214.68.73:45032: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:45032: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T02:44:39Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "AUZMWGQG5LWBECFYBW7JJSOHJUM27W5DNNVB57JBWYER3YBG7VFA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 262144, "Remote Address": "103.214.68.73:40996", "error": "write tcp 192.168.1.7:28967->103.214.68.73:40996: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:40996: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T03:01:15Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "74B6TZBUZYY7ZRXD4FVZGPTIO2OV3NJWRBZYPBFG4FI6RLJ3LKTA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 360448, "Remote Address": "103.214.68.73:52118", "error": "write tcp 192.168.1.7:28967->103.214.68.73:52118: write: connection reset by peer", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:52118: write: connection reset by peer\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T03:12:23Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T03:21:53Z ERROR piecestore download failed {"process": "storagenode", "Piece ID": "ERA427L3X4YXRTHQRSTIFXHF2CMXNNQH5UPJ7F2JFIMTM6GOGA3A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 0, "Size": 262144, "Remote Address": "103.214.68.73:53940", "error": "write tcp 192.168.1.7:28967->103.214.68.73:53940: use of closed network connection", "errorVerbose": "write tcp 192.168.1.7:28967->103.214.68.73:53940: use of closed network connection\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).sendData.func1:861\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2024-01-31T04:12:22Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T05:12:21Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T11:12:22Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T12:12:22Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

2024-01-31T12:12:23Z ERROR contact:service ping satellite failed {"process": "storagenode", "Satellite ID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "attempts": 1, "error": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out", "errorVerbose": "ping satellite: failed to ping storage node, your node indicated error code: 0, rpc: tcp connector failed: rpc: dial tcp 84.86.172.102:28973: connect: connection timed out\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatelliteOnce:209\n\tstorj.io/storj/storagenode/contact.(*Service).pingSatellite:157\n\tstorj.io/storj/storagenode/contact.(*Chore).updateCycles.func1:87\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/common/sync2.(*Cycle).Start.func1:77\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

I’m actually stuck now, the only thing are the ping-errors. Already in the logs now and then for months, without any consequences.

Specific satellite API reporting seems te be off again:

{

"id": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs",

"storageDaily": [

{

"atRestTotal": 9193400603121.168,

"atRestTotalBytes": 399713069700.92035,

"intervalInHours": 23,

"intervalStart": "2024-01-01T00:00:00Z"

},

{

"atRestTotal": 9381456699230.488,

"atRestTotalBytes": 407889421705.6734,

"intervalInHours": 23,

"intervalStart": "2024-01-02T00:00:00Z"

},

{

"atRestTotal": 10378781703222.395,

"atRestTotalBytes": 415151268128.89575,

"intervalInHours": 25,

"intervalStart": "2024-01-03T00:00:00Z"

},

{

"atRestTotal": 9437838844170.36,

"atRestTotalBytes": 410340819311.75476,

"intervalInHours": 23,

"intervalStart": "2024-01-04T00:00:00Z"

},

{

"atRestTotal": 9776414751147.787,

"atRestTotalBytes": 407350614631.1578,

"intervalInHours": 24,

"intervalStart": "2024-01-05T00:00:00Z"

},

{

"atRestTotal": 9223775156216.303,

"atRestTotalBytes": 401033702444.1871,

"intervalInHours": 23,

"intervalStart": "2024-01-06T00:00:00Z"

},

{

"atRestTotal": 9826518759797.723,

"atRestTotalBytes": 409438281658.23846,

"intervalInHours": 24,

"intervalStart": "2024-01-07T00:00:00Z"

},

{

"atRestTotal": 9634175771216.453,

"atRestTotalBytes": 401423990467.35223,

"intervalInHours": 24,

"intervalStart": "2024-01-08T00:00:00Z"

},

{

"atRestTotal": 9083517064448.975,

"atRestTotalBytes": 412887139293.1352,

"intervalInHours": 22,

"intervalStart": "2024-01-09T00:00:00Z"

},

{

"atRestTotal": 9907958781927.766,

"atRestTotalBytes": 412831615913.6569,

"intervalInHours": 24,

"intervalStart": "2024-01-10T00:00:00Z"

},

{

"atRestTotal": 9288934146482.166,

"atRestTotalBytes": 403866702020.96375,

"intervalInHours": 23,

"intervalStart": "2024-01-11T00:00:00Z"

},

{

"atRestTotal": 9702906399900.945,

"atRestTotalBytes": 404287766662.53937,

"intervalInHours": 24,

"intervalStart": "2024-01-12T00:00:00Z"

},

{

"atRestTotal": 9377618418060.197,

"atRestTotalBytes": 407722539915.66077,

"intervalInHours": 23,

"intervalStart": "2024-01-13T00:00:00Z"

},

{

"atRestTotal": 9795777536356.832,

"atRestTotalBytes": 408157397348.20135,

"intervalInHours": 24,

"intervalStart": "2024-01-14T00:00:00Z"

},

{

"atRestTotal": 8812769052732.104,

"atRestTotalBytes": 400580411487.8229,

"intervalInHours": 22,

"intervalStart": "2024-01-15T00:00:00Z"

},

{

"atRestTotal": 9770242954815.967,

"atRestTotalBytes": 407093456450.6653,

"intervalInHours": 24,

"intervalStart": "2024-01-16T00:00:00Z"

},

{

"atRestTotal": 9592263663598.543,

"atRestTotalBytes": 417054941895.5888,

"intervalInHours": 23,

"intervalStart": "2024-01-17T00:00:00Z"

},

{

"atRestTotal": 9745965930081.83,

"atRestTotalBytes": 406081913753.4096,

"intervalInHours": 24,

"intervalStart": "2024-01-18T00:00:00Z"

},

{

"atRestTotal": 9779239878485.203,

"atRestTotalBytes": 407468328270.2168,

"intervalInHours": 24,

"intervalStart": "2024-01-19T00:00:00Z"

},

{

"atRestTotal": 8960326220873.436,

"atRestTotalBytes": 407287555494.2471,

"intervalInHours": 22,

"intervalStart": "2024-01-20T00:00:00Z"

},

{

"atRestTotal": 9702977589155.314,

"atRestTotalBytes": 404290732881.47144,

"intervalInHours": 24,

"intervalStart": "2024-01-21T00:00:00Z"

},

{

"atRestTotal": 9614755269102.266,

"atRestTotalBytes": 418032837787.05505,

"intervalInHours": 23,

"intervalStart": "2024-01-22T00:00:00Z"

},

{

"atRestTotal": 9840061450166.984,

"atRestTotalBytes": 410002560423.6243,

"intervalInHours": 24,

"intervalStart": "2024-01-23T00:00:00Z"

},

{

"atRestTotal": 10494978692149.824,

"atRestTotalBytes": 403653026621.1471,

"intervalInHours": 26,

"intervalStart": "2024-01-24T00:00:00Z"

},

{

"atRestTotal": 9187690563649.268,

"atRestTotalBytes": 417622298347.694,

"intervalInHours": 22,

"intervalStart": "2024-01-25T00:00:00Z"

},

{

"atRestTotal": 9712548046971.154,

"atRestTotalBytes": 404689501957.1314,

"intervalInHours": 24,

"intervalStart": "2024-01-26T00:00:00Z"

},

{

"atRestTotal": 7335583691130.421,

"atRestTotalBytes": 305649320463.7675,

"intervalInHours": 24,

"intervalStart": "2024-01-27T00:00:00Z"

},

{

"atRestTotal": 5340204565777.734,

"atRestTotalBytes": 232182807207.72757,

"intervalInHours": 23,

"intervalStart": "2024-01-28T00:00:00Z"

},

{

"atRestTotal": 4658415876735.904,

"atRestTotalBytes": 194100661530.6627,

"intervalInHours": 24,

"intervalStart": "2024-01-29T00:00:00Z"

},

{

"atRestTotal": 4518726578542.362,

"atRestTotalBytes": 188280274105.93176,

"intervalInHours": 24,

"intervalStart": "2024-01-30T00:00:00Z"

},

{

"atRestTotal": 2177062238235.8496,

"atRestTotalBytes": 197914748930.53177,

"intervalInHours": 11,

"intervalStart": "2024-01-31T00:00:00Z"

}

],

"bandwidthDaily": [

{

"egress": {

"repair": 0,

"audit": 18944,

"usage": 53388800

},

"ingress": {

"repair": 113636864,

"usage": 14453424640

},

"delete": 0,

"intervalStart": "2024-01-01T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 24832,

"usage": 43607808

},

"ingress": {

"repair": 0,

"usage": 1161216

},

"delete": 0,

"intervalStart": "2024-01-02T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 18176,

"usage": 42324480

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-03T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 22784,

"usage": 31815680

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-04T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 24832,

"usage": 69389312

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-05T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 18176,

"usage": 67374080

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-06T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 24064,

"usage": 65996800

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-07T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 25600,

"usage": 91877888

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-08T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 24064,

"usage": 47329024

},

"ingress": {

"repair": 0,

"usage": 23827200

},

"delete": 0,

"intervalStart": "2024-01-09T00:00:00Z"

},

{

"egress": {

"repair": 275200,

"audit": 16128,

"usage": 40317184

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-10T00:00:00Z"

},

{

"egress": {

"repair": 761344,

"audit": 21248,

"usage": 131164160

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-11T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 21760,

"usage": 232110592

},

"ingress": {

"repair": 2319360,

"usage": 184957440

},

"delete": 0,

"intervalStart": "2024-01-12T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 18944,

"usage": 323465984

},

"ingress": {

"repair": 8010240,

"usage": 318719488

},

"delete": 0,

"intervalStart": "2024-01-13T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 16640,

"usage": 111704832

},

"ingress": {

"repair": 43901952,

"usage": 1480283648

},

"delete": 0,

"intervalStart": "2024-01-14T00:00:00Z"

},

{

"egress": {

"repair": 2319360,

"audit": 18944,

"usage": 82023936

},

"ingress": {

"repair": 164340224,

"usage": 2965606912

},

"delete": 0,

"intervalStart": "2024-01-15T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 18176,

"usage": 136841984

},

"ingress": {

"repair": 0,

"usage": 51183616

},

"delete": 0,

"intervalStart": "2024-01-16T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 23552,

"usage": 113637888

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-17T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 17152,

"usage": 65073920

},

"ingress": {

"repair": 103680,

"usage": 64832768

},

"delete": 0,

"intervalStart": "2024-01-18T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 17408,

"usage": 79386880

},

"ingress": {

"repair": 241889280,

"usage": 5459587840

},

"delete": 0,

"intervalStart": "2024-01-19T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 15616,

"usage": 112828160

},

"ingress": {

"repair": 1942272,

"usage": 117588224

},

"delete": 0,

"intervalStart": "2024-01-20T00:00:00Z"

},

{

"egress": {

"repair": 362752,

"audit": 17664,

"usage": 90639616

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-21T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 23552,

"usage": 11288320

},

"ingress": {

"repair": 57330432,

"usage": 1335191040

},

"delete": 0,

"intervalStart": "2024-01-22T00:00:00Z"

},

{

"egress": {

"repair": 413184,

"audit": 8960,

"usage": 31472640

},

"ingress": {

"repair": 3479552,

"usage": 93901312

},

"delete": 0,

"intervalStart": "2024-01-23T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 17152,

"usage": 65370880

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-24T00:00:00Z"

},

{

"egress": {

"repair": 4638720,

"audit": 20736,

"usage": 32773376

},

"ingress": {

"repair": 58341632,

"usage": 801422848

},

"delete": 0,

"intervalStart": "2024-01-25T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 19968,

"usage": 30694144

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-26T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 10752,

"usage": 37234432

},

"ingress": {

"repair": 35513344,

"usage": 487649536

},

"delete": 0,

"intervalStart": "2024-01-27T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 7424,

"usage": 37719296

},

"ingress": {

"repair": 8879616,

"usage": 132224512

},

"delete": 0,

"intervalStart": "2024-01-28T00:00:00Z"

},

{

"egress": {

"repair": 70144,

"audit": 6656,

"usage": 45390592

},

"ingress": {

"repair": 27620608,

"usage": 565843712

},

"delete": 0,

"intervalStart": "2024-01-29T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 7936,

"usage": 5908736

},

"ingress": {

"repair": 0,

"usage": 8439040

},

"delete": 0,

"intervalStart": "2024-01-30T00:00:00Z"

},

{

"egress": {

"repair": 0,

"audit": 2304,

"usage": 24230912

},

"ingress": {

"repair": 0,

"usage": 0

},

"delete": 0,

"intervalStart": "2024-01-31T00:00:00Z"

}

],

"storageSummary": 273252886897503.7,

"averageUsageBytes": 378196119574.5493,

"bandwidthSummary": 31676927232,

"egressSummary": 2363773184,

"ingressSummary": 29313154048,

"currentStorageUsed": 405484548608,

"audits": {

"auditScore": 1,

"suspensionScore": 1,

"onlineScore": 0.8210537030605998,

"satelliteName": "eu1.storj.io:7777"

},

"auditHistory": {

"score": 0.8210537030605998,

"windows": [

{

"windowStart": "2024-01-01T00:00:00Z",

"totalCount": 52,

"onlineCount": 32

},

{

"windowStart": "2024-01-01T12:00:00Z",

"totalCount": 62,

"onlineCount": 42

},

{

"windowStart": "2024-01-02T00:00:00Z",

"totalCount": 52,

"onlineCount": 37

},

{

"windowStart": "2024-01-02T12:00:00Z",

"totalCount": 71,

"onlineCount": 59

},

{

"windowStart": "2024-01-03T00:00:00Z",

"totalCount": 50,

"onlineCount": 38

},

{

"windowStart": "2024-01-03T12:00:00Z",

"totalCount": 52,

"onlineCount": 34

},

{

"windowStart": "2024-01-04T00:00:00Z",

"totalCount": 52,

"onlineCount": 46

},

{

"windowStart": "2024-01-04T12:00:00Z",

"totalCount": 50,

"onlineCount": 43

},

{

"windowStart": "2024-01-05T00:00:00Z",

"totalCount": 54,

"onlineCount": 41

},

{

"windowStart": "2024-01-05T12:00:00Z",

"totalCount": 68,

"onlineCount": 56

},

{

"windowStart": "2024-01-06T00:00:00Z",

"totalCount": 54,

"onlineCount": 37

},

{

"windowStart": "2024-01-06T12:00:00Z",

"totalCount": 51,

"onlineCount": 34

},

{

"windowStart": "2024-01-07T00:00:00Z",

"totalCount": 45,

"onlineCount": 37

},

{

"windowStart": "2024-01-07T12:00:00Z",

"totalCount": 65,

"onlineCount": 56

},

{

"windowStart": "2024-01-08T00:00:00Z",

"totalCount": 58,

"onlineCount": 52

},

{

"windowStart": "2024-01-08T12:00:00Z",

"totalCount": 61,

"onlineCount": 49

},

{

"windowStart": "2024-01-09T00:00:00Z",

"totalCount": 55,

"onlineCount": 48

},

{

"windowStart": "2024-01-09T12:00:00Z",

"totalCount": 56,

"onlineCount": 45

},

{

"windowStart": "2024-01-10T00:00:00Z",

"totalCount": 42,

"onlineCount": 34

},

{

"windowStart": "2024-01-10T12:00:00Z",

"totalCount": 39,

"onlineCount": 30

},

{

"windowStart": "2024-01-11T00:00:00Z",

"totalCount": 45,

"onlineCount": 41

},

{

"windowStart": "2024-01-11T12:00:00Z",

"totalCount": 43,

"onlineCount": 42

},

{

"windowStart": "2024-01-12T00:00:00Z",

"totalCount": 40,

"onlineCount": 40

},

{

"windowStart": "2024-01-12T12:00:00Z",

"totalCount": 46,

"onlineCount": 45

},

{

"windowStart": "2024-01-13T00:00:00Z",

"totalCount": 39,

"onlineCount": 30

},

{

"windowStart": "2024-01-13T12:00:00Z",

"totalCount": 48,

"onlineCount": 44

},

{

"windowStart": "2024-01-14T00:00:00Z",

"totalCount": 30,

"onlineCount": 25

},

{

"windowStart": "2024-01-14T12:00:00Z",

"totalCount": 58,

"onlineCount": 40

},

{

"windowStart": "2024-01-15T00:00:00Z",

"totalCount": 43,

"onlineCount": 32

},

{

"windowStart": "2024-01-15T12:00:00Z",

"totalCount": 55,

"onlineCount": 42

},

{

"windowStart": "2024-01-16T00:00:00Z",

"totalCount": 26,

"onlineCount": 25

},

{

"windowStart": "2024-01-16T12:00:00Z",

"totalCount": 47,

"onlineCount": 46

},

{

"windowStart": "2024-01-17T00:00:00Z",

"totalCount": 49,

"onlineCount": 47

},

{

"windowStart": "2024-01-17T12:00:00Z",

"totalCount": 45,

"onlineCount": 45

},

{

"windowStart": "2024-01-18T00:00:00Z",

"totalCount": 41,

"onlineCount": 36

},

{

"windowStart": "2024-01-18T12:00:00Z",

"totalCount": 41,

"onlineCount": 31

},

{

"windowStart": "2024-01-19T00:00:00Z",

"totalCount": 48,

"onlineCount": 34

},

{

"windowStart": "2024-01-19T12:00:00Z",

"totalCount": 41,

"onlineCount": 34

},

{

"windowStart": "2024-01-20T00:00:00Z",

"totalCount": 37,

"onlineCount": 35

},

{

"windowStart": "2024-01-20T12:00:00Z",

"totalCount": 32,

"onlineCount": 26

},

{

"windowStart": "2024-01-21T00:00:00Z",

"totalCount": 41,

"onlineCount": 32

},

{

"windowStart": "2024-01-21T12:00:00Z",

"totalCount": 47,

"onlineCount": 37

},

{

"windowStart": "2024-01-22T00:00:00Z",

"totalCount": 63,

"onlineCount": 46

},

{

"windowStart": "2024-01-22T12:00:00Z",

"totalCount": 60,

"onlineCount": 46

},

{

"windowStart": "2024-01-23T00:00:00Z",

"totalCount": 27,

"onlineCount": 17

},

{

"windowStart": "2024-01-23T12:00:00Z",

"totalCount": 39,

"onlineCount": 18

},

{

"windowStart": "2024-01-24T00:00:00Z",

"totalCount": 43,

"onlineCount": 32

},

{

"windowStart": "2024-01-24T12:00:00Z",

"totalCount": 46,

"onlineCount": 34

},

{

"windowStart": "2024-01-25T00:00:00Z",

"totalCount": 42,

"onlineCount": 37

},

{

"windowStart": "2024-01-25T12:00:00Z",

"totalCount": 64,

"onlineCount": 45

},

{

"windowStart": "2024-01-26T00:00:00Z",

"totalCount": 40,

"onlineCount": 34

},

{

"windowStart": "2024-01-26T12:00:00Z",

"totalCount": 51,

"onlineCount": 44

},

{

"windowStart": "2024-01-27T00:00:00Z",

"totalCount": 28,

"onlineCount": 26

},

{

"windowStart": "2024-01-27T12:00:00Z",

"totalCount": 20,

"onlineCount": 16

},

{

"windowStart": "2024-01-28T00:00:00Z",

"totalCount": 17,

"onlineCount": 15

},

{

"windowStart": "2024-01-28T12:00:00Z",

"totalCount": 16,

"onlineCount": 14

},

{

"windowStart": "2024-01-29T00:00:00Z",

"totalCount": 13,

"onlineCount": 13

},

{

"windowStart": "2024-01-29T12:00:00Z",

"totalCount": 14,

"onlineCount": 13

},

{

"windowStart": "2024-01-30T00:00:00Z",

"totalCount": 15,

"onlineCount": 14

},

{

"windowStart": "2024-01-30T12:00:00Z",

"totalCount": 17,

"onlineCount": 17

},

{

"windowStart": "2024-01-31T00:00:00Z",

"totalCount": 10,

"onlineCount": 9

}

]

},

"priceModel": {

"EgressBandwidth": 200,

"RepairBandwidth": 200,

"AuditBandwidth": 200,

"DiskSpace": 149

},

"nodeJoinedAt": "2023-11-05T14:59:37.426708Z"

}

Interestingly all deletes are zero…

That’s normal. We don’t use direct DELETE requests any more, but GC/bloom filter takes care about the deletion.

Everything is async and deletions are delayed. They should be closer to each other after the next GC processing + trash expiry time.

So, no means to see whether bloom filter has been applied a.k.a. this is a relic from old times when deletes were sent to the nodes? Because in the time span presented here, there should be at least 4 bloom filters have been applied.

I would say, that it’s quite curious that over half of the files from one satellite had been deleted in three days. Implying a non-random distribution of uploaded files, or some other unexpected process in my opinion.

This isn’t a storagenode fling, since this pertains satellite reported disk usage. Which mathed up to five days ago. And none of the files has been trashed at this point, implying no bloom filter has been applied since then.

You may see the appliance of the Bloom filter in your logs: search for “gc-filewalker” and “started|completed”.

Or just “gc-filewalker” and “completed”.

Yes, that’s true. Huge drop shouldn’t be normal (sometimes it happens, but not 50%, and should be visible on https://storjstats.info/

You can find the the affected satellite by selecting them one by one. It is some decommissioned satellite?

Seem to have been applied some successfully since last restart:

root@STORJ6:/# docker logs storagenode 2> /dev/null | grep gc-filewalker

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker subprocess started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode", "createdBefore": "2024-01-24T17:59:59Z", "bloomFilterSize": 752311}

2024-02-01T16:24:44Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker completed {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode", "piecesCount": 1344635, "piecesSkippedCount": 0}

2024-02-01T16:24:44Z INFO lazyfilewalker.gc-filewalker subprocess finished successfully {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker starting subprocess {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker subprocess started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode", "createdBefore": "2024-01-29T17:59:59Z", "bloomFilterSize": 40810}

2024-02-02T15:43:39Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker completed {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode", "piecesCount": 68969, "piecesSkippedCount": 0}

2024-02-02T15:43:39Z INFO lazyfilewalker.gc-filewalker subprocess finished successfully {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

But in the end, I still don’t understand the downfall of data reported by the satellite on the node on relation to the real disk usage. I got three of those nodes BTW. All 12 others seem not to have this particular problem.

Where do you see the downfall? It looks good to me. But at 2024-01-31 you got the data only from the first half of the day. Your node was either offline, or you executed this query between 2024-01-31 12:08 and the evening.

The second column is byte hour since the end of the previous period (not from the beginning of the day!!!)

Let’s say, you have this:

2024-01-31 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2177062238235.85|2024-01-31 10:35:42.833848+00:00|2460340.94146799|1

2024-01-30 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|4518726578542.36|2024-01-30 22:41:42.942761+00:00|2460340.44563591|2

2024-01-29 00:00:00+00:00|AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|4658415876735.9|2024-01-29 22:02:22.726171+00:00|2460339.41831859|3

Between the first and second line, there is only 0.4958 julian day (2460340.94146799 - 2460340.44563591), between second and third there is 1.02.

But if you divide the space with the period, you will get almost the same number:

2177062238235.85 / (2460340.94146799 - 2460340.44563591) / 24 = ~182946869014

4518726578542.36 / (2460340.44563591 - 2460339.41831859) / 24 = ~183273727056

The difference is 326 Mbyte, that can be normal, IMHO.

I know, this calculation is weird, but this is how does it work. See my diagram in the original post…

I did the query on 31th of January. The problem debuted on the 27th. So I’m not complaining about the numbers on the 31th.

The most worrisome satellite is AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000 (EU1) which went from 9712548046971.15 on the 27th to 4518726578542.36 on the 30th.

I rewrote the query for you, reuse it if you want:

root@STORJ6:/storj/DBs# sqlite3 storage_usage.db "SELECT satellite, timestamp, avgByteOverTime FROM (SELECT timestamp, hex(satellite_id) AS satellite, at_rest_total / (julianday(interval_end_time) - julianday(LAG(interval_end_time, -1) OVER (PARTITION BY satellite_id ORDER BY timestamp DESC))) / 24 AS avgByteOverTime, ROW_NUMBER() OVER (PARTITION BY satellite_id ORDER BY timestamp DESC) AS rown FROM storage_usage) WHERE rown <= 10 ORDER BY satellite,timestamp"

(... cut some rows ...)

# US1-satellite (own comment, not resulting from the query).

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-19 00:00:00+00:00|220021529315.547

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-20 00:00:00+00:00|230290357199.521

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-21 00:00:00+00:00|229754703705.441

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-22 00:00:00+00:00|230158800033.14

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-23 00:00:00+00:00|230519401809.691

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-24 00:00:00+00:00|229972825289.589

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-25 00:00:00+00:00|230103913632.444

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-26 00:00:00+00:00|230904050784.82

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-27 00:00:00+00:00|229607218397.458

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-28 00:00:00+00:00|230091373298.68

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-29 00:00:00+00:00|231160904420.42

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-30 00:00:00+00:00|231045812662.545

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-01-31 00:00:00+00:00|230573851503.36

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-02-01 00:00:00+00:00|230230908341.314

A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000|2024-02-02 00:00:00+00:00|230802765973.653

# EU1-satellite (own comment, not resulting from the query).

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-19 00:00:00+00:00|400679988310.598

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-20 00:00:00+00:00|404154207190.649

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-21 00:00:00+00:00|403388305515.166

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-22 00:00:00+00:00|403598018232.216

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-23 00:00:00+00:00|403505748973.127

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-24 00:00:00+00:00|403342715554.236

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-25 00:00:00+00:00|403756110453.291

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-26 00:00:00+00:00|403933211716.945

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-27 00:00:00+00:00|304636042486.423

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-28 00:00:00+00:00|226565909972.335

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-29 00:00:00+00:00|192681336045.798

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-30 00:00:00+00:00|183273725893.9

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-01-31 00:00:00+00:00|182861016747.46

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-02-01 00:00:00+00:00|182606036054.375

AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000|2024-02-02 00:00:00+00:00|182618822769.336

As you can see, satellite A28B4F04E10BAE85D67F4C6CB82BF8D4C0F0F47A8EA72627524DEB6EC0000000 remains stable on about 230GB, as are the other satellites of wich the rows have been cut. But satellite AF2C42003EFC826AB4361F73F9D890942146FE0EBE806786F8E7190800000000 falls from 404 to 182GB.

That very well corresponds with the graphs I posted on Jan 30th I think.

Problem recognized?

Seems only 2 satellites so far.

Sure, on all my nodes I’ve only seen these satellites passing bloom filters. Except two or three, that also got a bloom filter from satellite 1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE.

But it’s unlikely a bloom filter problem. The bloom filter only effectuates the actions already known by the satellite. And the satellite reports back -so already knows- that over half of the node has been trashed on that satellite.

Yeah… But your node should actually do this work and report that it’s finished. Did it?