No, but I restrict logs to 30MB and remove them on each startup.

But this happened:

root@STORJ6:/# docker logs storagenode 2> /dev/null | grep gc-filewalker

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker subprocess started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode"}

2024-02-01T16:24:26Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker started {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode", "createdBefore": "2024-01-24T17:59:59Z", "bloomFilterSize": 752311}

2024-02-01T16:24:44Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker completed {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S", "process": "storagenode", "piecesCount": 1344635, "piecesSkippedCount": 0}

2024-02-01T16:24:44Z INFO lazyfilewalker.gc-filewalker subprocess finished successfully {"process": "storagenode", "satelliteID": "12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker starting subprocess {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker subprocess started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode"}

2024-02-02T15:43:38Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker started {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode", "createdBefore": "2024-01-29T17:59:59Z", "bloomFilterSize": 40810}

2024-02-02T15:43:39Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker completed {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "process": "storagenode", "piecesCount": 68969, "piecesSkippedCount": 0}

2024-02-02T15:43:39Z INFO lazyfilewalker.gc-filewalker subprocess finished successfully {"process": "storagenode", "satelliteID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6"}

2024-02-04T00:29:48Z INFO lazyfilewalker.gc-filewalker starting subprocess {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2024-02-04T00:29:48Z INFO lazyfilewalker.gc-filewalker subprocess started {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

2024-02-04T00:29:48Z INFO lazyfilewalker.gc-filewalker.subprocess Database started {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "process": "storagenode"}

2024-02-04T00:29:48Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker started {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "process": "storagenode", "createdBefore": "2024-01-30T17:59:59Z", "bloomFilterSize": 130561}

2024-02-04T00:29:56Z INFO lazyfilewalker.gc-filewalker.subprocess gc-filewalker completed {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "piecesCount": 316686, "piecesSkippedCount": 0, "process": "storagenode"}

2024-02-04T00:29:56Z INFO lazyfilewalker.gc-filewalker subprocess finished successfully {"process": "storagenode", "satelliteID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs"}

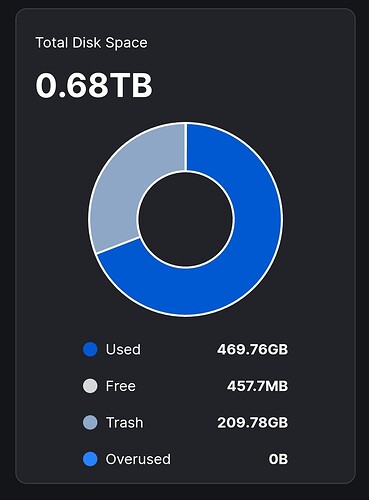

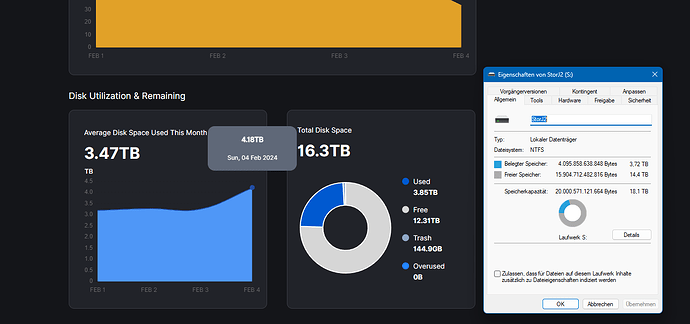

So, indeed, no disk discrepancy anymore. But essentially about 30% of the node has been trashed in about three days.

So, problem solved from disk usage discrepancy to idiosyncratic process affecting only some nodes. Essentially suggesting a non-random distribution of files or something?