do you have errors before this line?

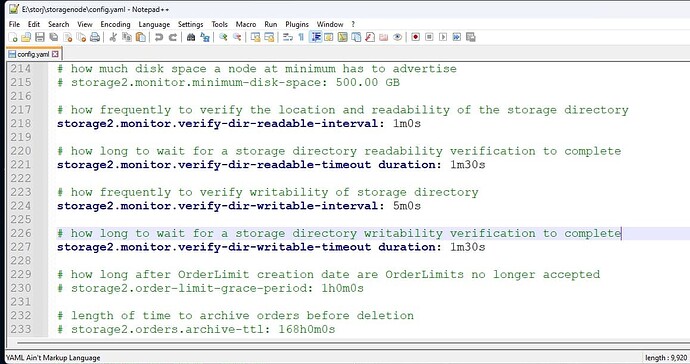

There are two timeouts - for read and for write. You may change the read timeout if you got error related to read and write timeout if you got error related to write.

After the change you need to explicitly save the file (Notepad++ will not offer to save changes on close) and restart the node.

The timeout you may try to increase in a small steps, like 30s.

Developers did not get a confirmation of the bug (we need to reproduce this error). The same code is working in docker and Linux, macOS and FreeBSD binaries, but seems affected only Windows and only a small part, which have had performance problems before (the 100% load of the disk for a long time). It could be a Windows-specific problem though, but we need to be able to reproduce it.

For example my Windows node doesn’t have this issue, however it’s full, so not representative.

At the moment the fix could be to reduce a disk load or increase a timeout.

на русском

Там два таймаута - один на чтение, другой - на запись. Если у вас возникает ошибка чтения, можно попробовать увеличить таймаут на чтение, если ошибка записи - увеличить таймаут на запись.

После изменения параметров необходимо явно записать файл (Notepad++ не предлагает сохранить изменения при закрытии окна) и перезапустить узел.

Таймаут лучше увеличивать понемногу.

Разработчики пока не получили подтверждения о наличии ошибки. Этот же код работает и в docker и Linux binary, пока выглядит так, что задета очень малая часть узлов, у которых и раньше были проблемы с производительностью (загрузка диска 100% в течение долгого времени). Возможно это что-то специфичное для Windows, что было бы странно.

Например мой узел на Windows работает нормально, однако он полон, так что не репрезентативно.

Пока что фикс состоит в том, чтобы либо снизить нагрузку на диск, либо увеличить таймаут.

No

And

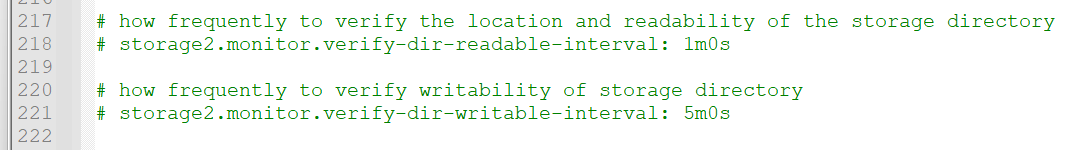

Depending on your error. For the failed a readability check error you may increase a readable timeout, for the failed a writeability check error you may increase a writeable timeout. But it’s better to increase only on 30s first, so it would be

storage2.monitor.verify-dir-readable-timeout: 1m30s

storage2.monitor.verify-dir-readable-interval: 1m30s

Or

storage2.monitor.verify-dir-writable-timeout: 1m30s

You need to save the config after the change and restart the node.

Nope, I didn’t have any issues with 100% HDD load for a prolonged time but nevertheless I’m experiencing the abovementioned error regularly. And it began at the same time as the others started to have it. It’s really suspicious just for a coincidence.

I am just seeing this error too:

2023-04-01T04:16:09.678Z ERROR services unexpected shutdown of a runner {"Process": "storagenode", "name": "piecestore:monitor", "error": "piecestore monitor: timed out after 1m0s while verifying writability of storage directory", "errorVerbose": "piecestore monitor: timed out after 1m0s while verifying writability of storage directory\n\tstorj.io/storj/storagenode/monitor.(*Service).Run.func2.1:150\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/storj/storagenode/monitor.(*Service).Run.func2:146\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75"}

It’s likely not a hardware defect but slow storage with current high ingress.

This can turn into a huge problem, just imagine more independent satellites and more huge customers up- and downloading maxing out the underlying nodes.

Instead of making the nodes go down, they should be throttled.

Yes, but only needed parameter was enough.

This parameter was introduced to reduce the chance to be disqualified because of hardware issues.

The increasing a timeout should help to avoid stopping of the node under high load.

However, many here tells, that their node is not overloaded. From the other side i do not see, how not overloaded disk cannot answer even after a minute.

Surely disks can be overloaded. And it is very good to try to avoid disqualifications.

But all this requires more and more monitoring of the nodes.

The initial idea of run and forget seems to be forgotten.

Personally I believe a node must adapt much more dynamically to different scenarios because as a SNO I have no control over what satellite operators and customers are doing.

Have a problem with the node, a couple minutes node stopping to work with an error

The Storj V3 Storage Node service terminated unexpectedly. It has done this 1 time(s).

In the log file I see an error like this

2023-03-31T22:56:50.072+0300 ERROR piecestore download failed {“Piece ID”:

This started 2 days ago, can some one help me pls?

Have this error in long:

2023-03-31T23:04:20.433+0300 ERROR piecedeleter could not send delete piece to trash {“Satellite ID”: “12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S”, “Piece ID”: “AYW4ETKBPNANXE4ZA7U5QGU6TNGS2WQFCZIXTXYGEMYWZW7JQMOQ”, “error”: “pieces error: pieceexpirationdb: context canceled”, “errorVerbose”: “pieces error: pieceexpirationdb: context canceled\n\tstorj.io/storj/storagenode/storagenodedb.(*pieceExpirationDB).Trash:112\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:387\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75”}

Short after that I’m getting

023-03-31T23:04:22.329+0300 FATAL Unrecoverable error {“error”: “piecestore monitor: timed out after 1m0s while verifying writability of storage directory”, “errorVerbose”: “piecestore monitor: timed out after 1m0s while verifying writability of storage directory\n\tstorj.io/storj/storagenode/monitor.(*Service).Run.func2.1:150\n\tstorj.io/common/sync2.(*Cycle).Run:160\n\tstorj.io/storj/storagenode/monitor.(*Service).Run.func2:146\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:75”}

Windows node? You may have the same issue as in this thread - Fatal Error on my Node

Yes looks like the same, windows node.

So I understood correctly that not only my node got this problem? And there is no real fix for it?

The mitigation (not the fix) is to increase a write timeout on 30s.

The fix would be to reduce a load to allow the disk to answer in time.

Can all of you check the size of db files in the storagenode data location? Some reported a while ago that one of dbs got huge for no apparent reason. Maybe you have the same problem here?

oops he did it again…

here i send the full log level error, the time the error appeared again.

aside that the lines are like the first and the last one. run half and a day fine

thinking about to increase timeouts too

all specs are way above the minimum requirements ![]() just teamspeak is running on this same maschine.

just teamspeak is running on this same maschine.

run some load to the storj drive and the cpu. and writespeed is way above 50MB/s all fine, node not affected. rw goes back to typicaly under 7mb/s from storj.

cpu only around 85 while deindexing ssd main drive in the same time.

did that yesterday, in the storj drive all db files are some MB.

I know timeout increase is only a mitigation. But my CPU load never goes above 10% and my HDD load seldom goes up tp 25%.

SMART and CHKDSK report no issues with drive.

Is this the kind of load that will cause that timeout error?

2023-03-31T13:02:47.467+0200 ERROR piecestore download failed {"Piece ID": "F4EW7WLUSON6YZ4ZMQA6TTG5WUKSZ6HWSEYLNYFJOIAYJCYL4RCA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 160768, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

2023-03-31T13:02:53.722+0200 ERROR piecestore download failed {"Piece ID": "5UDQP3UR2PXVGCUVPKE36UGRBMXNBV2ED7J3KMVASJDQ35S2CWOA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 581632, "Size": 311296, "Remote Address": "*******censored ip**********", "error": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.", "errorVerbose": "write tcp 10.0.0.22:28967-*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.\n\tstorj.io/drpc/drpcstream.(*Stream).rawWriteLocked:367\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:458\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6.2:729\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2023-03-31T13:03:13.124+0200 ERROR piecestore download failed {"Piece ID": "GCGEEJ4IX3IUC7Z53CHVL34CJYAZYL5OOTG4TJHCCDLBNDMI2FLA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 285184, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.", "errorVerbose": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6.2:729\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2023-03-31T13:03:32.119+0200 ERROR piecestore download failed {"Piece ID": "47ZHWQCB3JEGYNUI533FUGRYCZ2DE53P3BFI75MI2AHLLEVTJ7XA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 918272, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967-*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

2023-03-31T13:03:33.826+0200 ERROR piecestore upload failed {"Piece ID": "K7VCDHPEVWQNK5OPPV7BSBMHEWN36L44WFA74LG4W6VOMVGDG2TQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT", "error": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:197\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223", "Size": 2097152, "Remote Address": "*******censored ip**********"}

2023-03-31T13:03:39.230+0200 ERROR piecestore download failed {"Piece ID": "Z3CR7IZSRXCGCBU3YWAU6QMFD7FUQI6WXFV6V4TCHEIHHN2WHYHQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 523776, "Size": 56064, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

2023-03-31T13:03:58.551+0200 ERROR piecestore download failed {"Piece ID": "NI4EP2A64YXWNN5Z3367G2SILI7W7L5XWLKIDHV2RPKC3WX25QLQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 326400, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.", "errorVerbose": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6.2:729\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2023-03-31T13:04:23.259+0200 ERROR piecestore download failed {"Piece ID": "2DOJMF3J5EXDTMSQGVOFTUZQUAD6STGUOZH4VZFEWYNCIBE42NQQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 131584, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

2023-03-31T13:04:33.620+0200 ERROR piecestore download failed {"Piece ID": "L5VHRIPHXRJT5RQWAJL2Y52GFTARBEDXR4D4N5MMWCOGZYAFNRZA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 73984, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.", "errorVerbose": "write tcp 10.0.0.22:28967->*******censored ip**********: wsasend: An existing connection was forcibly closed by the remote host.\n\tstorj.io/drpc/drpcstream.(*Stream).rawFlushLocked:401\n\tstorj.io/drpc/drpcstream.(*Stream).MsgSend:462\n\tstorj.io/common/pb.(*drpcPiecestore_DownloadStream).Send:349\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6.2:729\n\tstorj.io/common/rpc/rpctimeout.Run.func1:22"}

2023-03-31T13:05:03.910+0200 ERROR piecestore download failed {"Piece ID": "J7DMEWI7ZNPNXPRUFPQ3KBAE7W6I7V3NC272UOWPKJAPOYW25Q4A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 49152, "Size": 65536, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967-*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

2023-03-31T13:05:18.192+0200 ERROR piecestore download failed {"Piece ID": "RV7GIXIPO3GLO6FLP7TXNANQHRR76E2H3XJVVNQNCJU6JI5TNX4Q", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET", "Offset": 452352, "Size": 127488, "Remote Address": "*******censored ip**********", "error": "manager closed: read tcp 10.0.0.22:28967->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.", "errorVerbose": "manager closed: read tcp 10.0.0.22:28967-->*******censored ip**********: wsarecv: An existing connection was forcibly closed by the remote host.\n\tgithub.com/jtolio/noiseconn.(*Conn).readMsg:183\n\tgithub.com/jtolio/noiseconn.(*Conn).Read:143\n\tstorj.io/drpc/drpcwire.(*Reader).ReadPacketUsing:96\n\tstorj.io/drpc/drpcmanager.(*Manager).manageReader:223"}

i have a bunch of download failed and only just a few upload failed (1 out of 30)