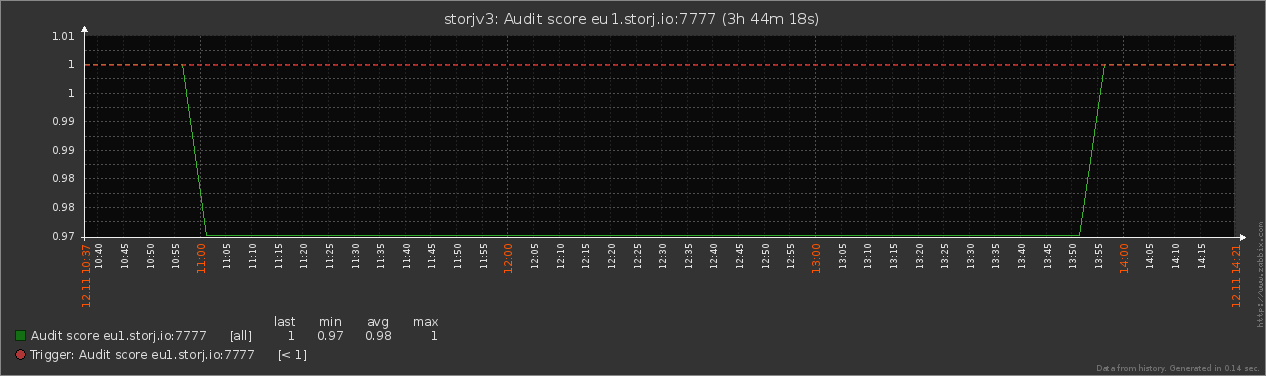

Hi, I just got a notification that the audit score for my node is less than 1. Looking through my logs, I found this:

cat docker.00065.log | grep AUDIT | grep fail | wc -l

0

cat docker.00065.log | grep AUDIT | grep started | wc -l

5187

cat docker.00065.log | grep AUDIT | grep downloaded | wc -l

5187

cat docker.00065.log | grep REPAIR | grep fail | wc -l

4

So, audits seem to be OK, but there are failed repair downloads, which I know also affect audit score.

2021-11-12T08:23:11.814Z ERROR piecestore download failed {"Piece ID": "3AUGAI7MMFTKWGQOWMBO5TMPGC6ZYPHR7F466JXJOITAZ5CBI5JA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "signing: piece key: invalid signature", "errorVerbose": "signing: piece key: invalid signature\n\tstorj.io/common/storj.PiecePublicKey.Verify:67\n\tstorj.io/common/signing.VerifyUplinkOrderSignature:60\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrder:102\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6:663\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:674\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

2021-11-12T08:23:11.897Z ERROR piecestore download failed {"Piece ID": "ZSYW6HKR2WZ7GZI7I3MXU42HH45LNJU6L7COGCSBH2WOJGLI5XHQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrderLimitSignature:145\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).verifyOrderLimit:62\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:490\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

2021-11-12T08:50:00.313Z ERROR piecestore download failed {"Piece ID": "4SGNT6DEUVKQTDAQ5D7KRM5TZS4HAHHXV3OSAM3C4WHZTRY25VIQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrderLimitSignature:145\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).verifyOrderLimit:62\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:490\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

2021-11-12T09:06:15.098Z ERROR piecestore download failed {"Piece ID": "RCXFOOKE2QDD5TF4HDOGZ2JI4HIEYNHP7ETWBSKIHNHDFROVMUWA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrderLimitSignature:145\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).verifyOrderLimit:62\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:490\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

What is the cause of this and how can I fix it? I checked, server time seems to be synced with ntp servers.

I will monitor this closely and will shut my node down if this happens again to prevent DQ.

SGC

November 12, 2021, 10:12am

2

searched forum for signature is not valid.

It’s a latest version.

Please, stop and remove the container:

docker stop -t 300 storagenode

docker rm storagenode

docker image rm storjlabs/storagenode

Then run it back with all your parameters, then check your logs.

I have removed and pulled the image again, we’ll see.

I do not think it’s the same problem because in the post, the node refuses to start, while my node starts and runs, but also fails some repair downloads.

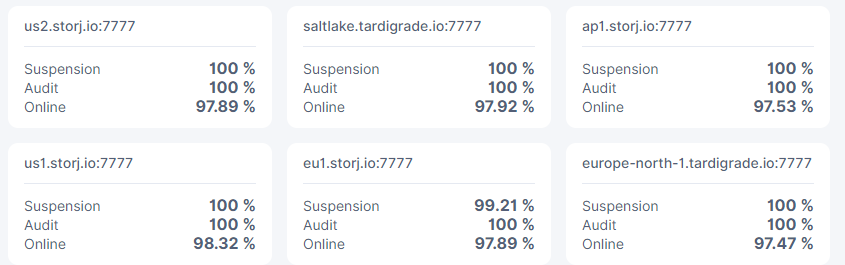

It’s only on eu1 satellite (at least for now). Interestingly, I also got two PUT_REPAIR failures, also on the same satellite:

2021-11-12T09:15:43.800Z ERROR piecestore upload failed {"Piece ID": "7URJKIBTD6DFRJ22QCU3T4G7ADDGISZWA3EFPEABZYYGSKZWPYPA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 2319360}

2021-11-12T09:16:45.025Z ERROR piecestore upload failed {"Piece ID": "GIUYP4BYK3HZBZOLLGMYYQJDUH5YFCRCEXTLBAIGPKOJ2V2PEKZQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 2317056}

SGC

November 12, 2021, 12:33pm

4

i actually have 2 nodes which has a slight drop in suspension score… guess a satellite…

i guess i should try and check what that is about…

2021-11-12T08:49:28.341Z ERROR piecestore download failed {"Piece ID": "EHMDRZJVMGVW2SWD7D3EC3AKADUBCERDZHZATIIREPDD37CYXNDQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrderLimitSignature:145\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).verifyOrderLimit:62\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:490\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

2021-11-12T09:05:05.153Z ERROR piecestore upload failed {"Piece ID": "BBJL2SSQMPHJI7X5J6HPCE6UJS6BW7EB27M6PXIE4XBZYRFA7NPA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 2317056}

2021-11-12T10:22:46.666Z ERROR piecestore upload failed {"Piece ID": "3VOTPWQSK47H4SDOTXHSZSJONRRZOKUX5JC5AX63YRD6JKUGXUDQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "signing: piece key: invalid signature", "errorVerbose": "signing: piece key: invalid signature\n\tstorj.io/common/storj.PiecePublicKey.Verify:67\n\tstorj.io/common/signing.VerifyUplinkOrderSignature:60\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrder:102\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:355\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 1843200}

2021-11-12T11:13:37.504Z ERROR piecestore upload failed {"Piece ID": "FP4KXZH3HMUDPF6GVUTWUDPNRVY4IS4DEWTJ5HCWS4RARK32HG5A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 2317056}

2021-11-12T11:23:41.453Z ERROR piecestore upload failed {"Piece ID": "RBGPGTLOWM5TJPDUEYKFGRM5IWF2JPWRLPUPSREUCJSMN4IJDV6A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 2317056}

seems like we are seeing the same thing.@Alexey this seems weird, kinda makes me wonder if this was what caused my other drops in suspension score, even tho i didn’t manage to find the cause.

Same on my node same sat

Another node

This appears to be a wide issue not a single node but every single node I have 5 nodes with the same issue.

2021-11-12T09:05:04.950Z ERROR piecestore upload failed {"Piece ID": "Y7GWIJUYPUTUX4G7DZILQNHNJEG6P2C53BPABBAYRU4V2IZ62FVQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match

2021-11-12T08:23:14.085Z ERROR piecestore download failed {"Piece ID": "3CDJYXWGSZ6SVEY5QHKZVJEDGCAOF6IMVFFDR4AF3HUROUF72T2A", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid

SGC

November 12, 2021, 12:58pm

6

I’ve checked my logs, this issue seems to have started today 2021-11-12 older logs a week back has no such entries.

This seems like it could be a serious issue long term, my best guess would be something like a security certificate has run out on the EU1 sat@bre @Knowledge @littleskunk

1 Like

bre

November 12, 2021, 2:25pm

8

Thank you for alerting us @SGC

2 Likes

Yep, seeing the same thing now as well. No need to repeat screenshots and errors as they are the same. But I would like to note that it seems the repair workers seem to be sending bad requests to nodes and nodes are penalized for rightfully rejecting them.

At the moment I’m not too worried about serious consequences as this only hits the suspension score, so at the worst nodes will get suspended, not disqualified. However, we’ve seen similar issues escalate in the past and increase in frequency. If that happens here and a significant number of nodes get suspended, that could still impact data availability.

Sucks that this is happening on a friday

It seems that this has hit both scores on my node, at least for a short time.

There are no “real” audit failures and the count of “download started” and “downloaded” lines for audits is equal, so no timeouts either.

The scores have recovered, at least for now, and the last error in the log is this:

2021-11-12T13:03:29.021Z ERROR piecestore upload failed {"Piece ID": "NWCY6Q6536MQN2UNL2EYLI7CN4PNCOKNKVCS4NCGFENNHY7DD3KA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "PUT_REPAIR", "error": "hashes don't match", "errorVerbose": "hashes don't match\n\tstorj.io/common/rpc/rpcstatus.Error:82\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyPieceHash:120\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Upload:385\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func1:220\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52", "Size": 658176}

Maybe this was a transient problem. I don’t like that it affected the audit scores though.

Alexey

November 12, 2021, 3:22pm

11

You need also to measure time between them…

$ cat /mnt/x/storagenode3/storagenode.log | grep 1wFTAgs9DP5RSnCqKV1eLf6N9wtk4EAtmN5DpSxcs8EjT69tGE | grep -E "GET_AUDIT" | jq -R '. | split("\t") | (.[4] | fromjson) as $body | {SatelliteID: $body."Satellite ID", ($body."Piece ID"): {(.[0]): .[3]}}' | jq -s 'reduce .[] as $item ({}; . * $item)'

...

"EY6AAGG33LEZK3O7NIM2S3R5VEPXRBIYCKPWYSV5LDWNMHFYF7EA": {

"2021-08-22T22:42:40.601Z": "download started",

"2021-08-22T22:50:28.975Z": "download started",

"2021-08-22T23:04:59.672Z": "d…

As far as I know (or rather, what my script says), the longest interval between “download started” and “downloaded” for GET_AUDIT is 1.57 seconds for that satellite (a bit over 2 seconds overall). For GET_REPAIR on that satellite it’s 4.44 seconds.

There are two GET_REPAIR error types:

2021-11-12T08:23:11.814Z ERROR piecestore download failed {"Piece ID": "3AUGAI7MMFTKWGQOWMBO5TMPGC6ZYPHR7F466JXJOITAZ5CBI5JA", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "signing: piece key: invalid signature", "errorVerbose": "signing: piece key: invalid signature\n\tstorj.io/common/storj.PiecePublicKey.Verify:67\n\tstorj.io/common/signing.VerifyUplinkOrderSignature:60\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrder:102\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download.func6:663\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:674\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

and

2021-11-12T08:23:11.897Z ERROR piecestore download failed {"Piece ID": "ZSYW6HKR2WZ7GZI7I3MXU42HH45LNJU6L7COGCSBH2WOJGLI5XHQ", "Satellite ID": "12L9ZFwhzVpuEKMUNUqkaTLGzwY9G24tbiigLiXpmZWKwmcNDDs", "Action": "GET_REPAIR", "error": "untrusted: invalid order limit signature: signature verification: signature is not valid", "errorVerbose": "untrusted: invalid order limit signature: signature verification: signature is not valid\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).VerifyOrderLimitSignature:145\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).verifyOrderLimit:62\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:490\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:228\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:104\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:97\n\tstorj.io/drpc/drpcctx.(*Tracker).track:52"}

Maybe one of them affects unknown audits and the other normal audits?

SGC

November 12, 2021, 3:53pm

13

BrightSilence:

At the moment I’m not too worried about serious consequences as this only hits the suspension score, so at the worst nodes will get suspended, not disqualified. However, we’ve seen similar issues escalate in the past and increase in frequency. If that happens here and a significant number of nodes get suspended, that could still impact data availability.

i was told that if one get suspended and then goes above 60% then the sat will DQ for going below 60% suspension score within the next 30 days or so…

but might be over pretty quick if one did get into suspension, because it would bounce right back and then possibly go below again…

You’re confusing it with the uptime suspension. There if you are suspended fore uptime below 60% you get 7 days to fix any issues, then a 30 day monitoring period starts and if uptime is not above 60% at the end of that period, you get disqualified.

As far as I’m aware there is no permanent disqualification as a result of a dropping suspension score.

Me neither, that is a bit more serious… At least they are still infrequent enough that we’re not getting anywhere close to any DQ/Suspension consequences.

1 Like

Getting that SMS was not fun, but at least it seems to have stopped. Getting my node disqualified because of a bug in the code (if this turns out to be a bug), now that would be awful.

Based on history I’m certain it would be reinstated if it was really due to a bug or satellite misconfiguration.

1 Like

CutieePie:

May be of relevance but I’m still on version v1.41.2 on docker ?

Could be, my node is v1.42.4

SGC

November 12, 2021, 4:43pm

20

also on 1.42.4 here… and did update 49 hours ago

Our devs are currently troubleshooting this issue. Thanks for making us aware of it.

4 Likes

hopefully it isn’t going to eat into peoples weekends. Let us know if we can do more from our end to aid the bug hunting.

hopefully it isn’t going to eat into peoples weekends. Let us know if we can do more from our end to aid the bug hunting.