The Windows node stopped due to an unexpected error, and when I turned it back on a few minutes later, about 200g that had been saved was gone. I thought it was trash usage, but it wasn’t. It seems like the stored information just disappeared as it was cut off. Is this really the case?

I don’t know on what these stats have been based on. But there are different discrepancies: the reported size on satellites for today, is an estimation op to the end of the time-window (which in most cases is an overestimation, somehow; although 200GB difference is quite much).

Besides there is a discrepancy between real usage and reported usage, because the bloom filters are overfitted meaning that also some deleted blobs match the filter and aren’t deleted on your node.

If it’s based on the real data, one of the filewalker may have finished and therefore disk usage had been adjusted.

I may not understand it because the language you spoke is English, but even if I listen to it in my native language, I don’t think I’ll understand it because it’s a technical part… I’ll have to move on. Thanks for your reply ![]()

If the Storagenode is stopped, you need to figure out - why.

The answer usually in the few last lines from your logs, so you need to fix this issue.

The customers uploads randomly. If your node is died in the middle of the transfer - it’s likely will not settle on the usage and will not be paid.

It’s been a while since I started, so I don’t think I’ll find anything even if I search 20 logs. Can I search after a specific language? (ex error) The log is large and cannot be opened in Notepad.

( It seems that there are especially many errors in Windows… (Docker Hallelujah))

- Do not use Notepad

- Yes, you can (PowerShell):

sls FATAL "$env:ProgramFiles\Storj\Storage Node\storagenode.log" | select -last 10

For docker Desktop for Windows (PowerShell):

docker logs storagenode 2>&1 | sls FATAL | select -last 10

The FATAL one is a FATAL, the root cause. Since your disk is unable to check the readability of the single file - you likely have a problem.

See

in short:

- Stop the node

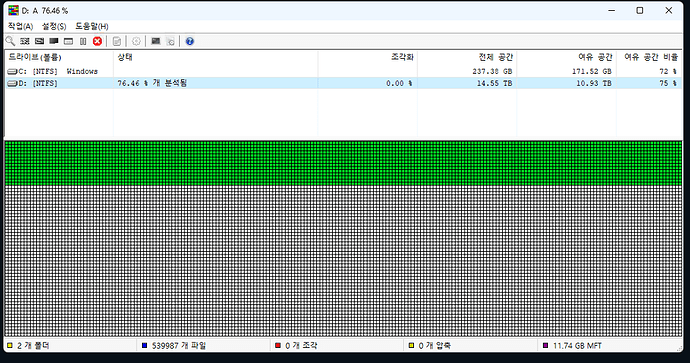

- Perform a defragmentation if you use NTFS

- Run the node

- Check your logs

If you still has FATAL errors, then you need to do something with that.

If Windows - make sure that a defragmentation job is not disabled for this disk (it’s enabled by default). Make a defragmentation right away.

If you use Linux - make sure that your storage location is LOCAL, or, at least connected via iSCSI, the network filesystems are not supported and may (will) not work.

Make sure that you do not use NTFS under Linux, if so, you need to reformat this drive ASAP to ext4 (backup and restore data of course).

It would have been nice to start with ext4, but I regret starting with NTFS because I didn’t know at first…

So… Are you using NTFS under Linux?!

No, I used NTFS on Windows. Later, while browsing forums, I found out that EXT4 format is also possible in Windows.

(It seemed too much time had passed to start again, so I was planning to use the EXT4 format in Windows for the next node, but it is convenient since I use Linux Docker, so I don’t think I will have to use Windows anymore…)

yes, it’s possible… but you likely will have issues. They are not… Likes each others, if in short…

Ok, do you have FATAL errors in your logs?

I’m currently defragmenting it, but I don’t know when it will finish…![]() After it’s finished, can I use the log command you wrote?

After it’s finished, can I use the log command you wrote?

I also see a locked database FATAL.

Is it bad? I don’t know what locked database means

This is mean that you likely loose some bandwidth (or what database is locked) records.

So the historic and stat data could be wrong.

Did the node crash?

In any case this is the signal that disk is slow to respond and it doesn’t keep up with the load. If that happens even after the defragmentation, then perhaps the only solution would be to move databases to SSD.

By the way - is it a VM?

We want databases in RAM:

It’s possible even now, but requires scripting to sync DBs to the RAM disk and back.

Your idea is much more complicated in the implementation, so I do not believe it would be implemented this way.

But perhaps it could be implemented differently - we already keeps in memory some information, which syncs periodically to the database file (like pieces with expiration, etc.).

I would like to try that if you have such a script.

As already said, any way is fine that stops the databases wasting disk IOPS.

The Windows node is not a VM. It is operated as a Windows installation. The VM is running Prometheus (I did not know how to set the storage period of Prometheus for Windows, so I ran the VM to use Docker)