That sounds very interesting! I’ll take a look tomorrow when I have more time.

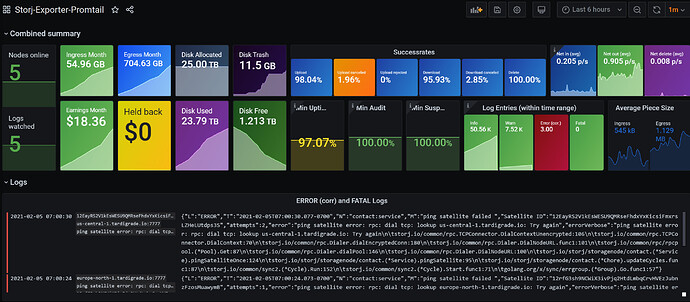

I checked out your work on github. Looks impressive. You tried to make it easy as a combined deployment but I have a different setup so I’ll have to separate that again ![]() It’ll take some work to get such a setup but I think you have the right approach, it looks better to have the logs available this way and being able to check the error message in the grafana dashboard directly instead of having to crawl through logfiles.

It’ll take some work to get such a setup but I think you have the right approach, it looks better to have the logs available this way and being able to check the error message in the grafana dashboard directly instead of having to crawl through logfiles.

I still like my logfiles though, so I have to figure out how to do that with logfiles instead of redirecting docker logging.

But about the loki plugin: Why does the latest not work? Using an old plugin is not my favourite (I’m aware we’re doing that too with the dashboards due to bugs in newer versions of the boomtable plugin… sometimes you can’t avoid it.)

This is definitely possible. I do this with one of my non-Docker native binary nodes. Promtail picks up the file sends it through the same pipeline stages as the method using the Docker Loki driver. There’s a TODO section in the github for me to put instructions for using that method. It’s the best of both worlds since you’d be able to keep your logfiles and have them shipped to Promtail/Loki/Grafana.

I think the latest could work for the Loki and Promtail containers, but I had issues with the latest Loki Docker log driver. There honestly could have been a bunch of confounding factors in the early stages while I was trying to figure it out, so now that it’s working, I’ll set up a quick test docker-compose stack with latest to see if it works. Having said that, for Grafana products, latest seems to really track master rather than latest stable release. v2.1.0 for Loki/Promtail is a month old, while latest is a couple hours old as of this writing. I’m happy to follow point releases for these tools.

On a related note, the ARM and ARM64 docker drivers were just one-off images so they’re admittedly much older.

So long story short, if you wanted to dive in using text-based log files without dealing with the Loki Docker driver, you could include the following in your Promtail config:

...

scrape_configs:

- job_name: storj

static_configs:

- targets:

- localhost

labels:

job: storj

nodename: storj03

__path__: /srv/storj/log/storj03.log

# TODO figure out dynamic labelling from log filename for multiple nodes

# - targets:

# - localhost

# labels:

# job: storj

# nodename: storjXX

# __path__: /srv/storj/log/storjXX.log

# same pipeline_stages as the loki_push_api method

pipeline_stages:

- json:

expressions:

...

Thanks for the information. You’re right about the loki version if latest tracks the master branch directly.

I’ll hopefully give it a try in the next couple days.

You could open a new thread for this project, since it’s quite different (even though both use the logs and intend similar output but the way is quite different and will need different support and questions). Or we let @Alexey split the topic.

For sure! I’ll wait for Alexey to decide what to do and likely take a few days to play with dashboard layouts that include key log line items like in the above screenshot, as well as a link to another dashboard which allows for more detailed log searching.

If you are storing all log lines, that will make the prometheus db grow as much as the logfiles, which will be immense. Any thoughts about that?

Ultimately we only need a few metrics from the info and warn messages and after that, they could be deleted. Only errors and fatal are actually interesting imo.

Good point. The Loki log storage database is separate from Prometheus. Prometheus only keeps the scraped metrics, no different than when using the exporter method.

The default Loki local-config.yaml that I included indeed does not have a retention limit set at this time. I’ll put a sane limit of 2 weeks in there for now and provide instructions for how to increase it if people wish.

This is interesting. I wonder how best to archive error/fatal and discard the rest. I’ll play around with that in Loki a bit this weekend.

@kevink - solid addition to the logging/grafana tracking for SNO’s, honestly quality. I took maybe 10min per node and got each setup with an accompanying bash script to handle up/down+rm/rmi/pull/build and all went without a hitch.

One suggestion, and I hope I’m not the only one this bugs, but would it not make more sense to display the storage IO in bytes and the Net IO in bits as that is the way they are represented in their respective systems. Eg, you measure disk bandwidth in MBps, and you measure your network in Mbps. Not demanding, as I’ve already adjusted the dashboards myself in my install, but thought it would make sense to others as well.

thanks, that is actually a good suggestion! Didn’t think about that. I’ll implement it in the next release which will also show the space used per satellite per node (metrics for that are only part of the dev release of the storj-exporter at the moment).

Now i found finally time to check it out, nice work! Soon will publish a new release with it for the Ansible Role.

Not sure if this is known, but the combined dashboard has this error while viewing 3x 1.21.1 nodes with latest exporter & log-exporter & combined dashboard:

and unsure if these are related, but appeared about the same time:

Don’t know about the first one, seems strange. Grafana is up to date?

The one in debug is normal. Select only one node and it’ll show its join date per satellite.

I let it keep going and now we’ve got all three that’ve gone dead on me:

They seem to have gone south after updating the storj-exporter containers after the dev branch was merged in.

Edit: completely rebuilt grafana and ended up with what looks to be working now but unsure about the egress on the end:

Perfect. You might not have enough repair egress to get that metric.

I updated the dashboard, it is now also published on grafana directly (but you can also still find it in my github repository): Storj-Exporter-Boom-Table-combined dashboard for Grafana | Grafana Labs

Changes include:

- Changed metrics for OnlineScore,SuspensionScore and Audits have all been updated.

- Node details now show the Space Used per satellite! This is a new metric added in the storj-exporter with the last update!

- Node details StorageIO now also shows the StorageSum so you can see how the usedSpace behaved

- Storage/HDD activity overview is now displayed in Bytes/sec instead of bits/sec as this is more common for HDDs. Also I added the StorageSum again so you can quickly see how much your SpaceUsed grew/shrunk due to ingress/deletes

- Storage/HDD activity now also per Satellite so you can find out which satellite is pushing/deleting how much data

- A storage usage category so you have all storage stats at once (if you still need that after the last 2 additions)

Hope you enjoy the update.

Looks snazzy- also loving the fact that we can know what SAT is taking up the most space for our nodes (no surprise its Euro N).

As an aside, I see you’re getting slammed by the last 24hrs of massive deletes for US Central as well.

As a site node- I noticed that the following pane doesn’t pay attention to node section for both queries (rate does but not sum- missing ,instance=~"$node.*" from second query):

oops, well spotted, fixed the missing node selection and updated the dashboard on both sources. Thanks for the report.

PS: Yeah those massive deletes on us-central are hitting me too… but it’s a long way until my biggest node is actually below it’s allocated space ![]()

For the Node Overview Boom Table, there are a couple fixes that would clean up the look when selecting specific nodes. Current it looks like this for me when I select storj01:

Adding the label instance=~"$node.*" to the Repair and Suspension columns would align with the way the other columns are treated and would end up looking like this: