Right, but for cases when it writes bits and pieces here and there that may not add up to the full record size — having smaller record size may help. The downside is more space is needed on the special device. No idea if this is worth it.

Again SSD comes into my mind here. Why wasn’t hashstore designed in a way to direct uploads to a SSD and move it from there into hashstore? Wouldn’t that be even faster for the customer and relatively cheap for the SNO?

It is not needed for Storj Select and not needed in most cases for Storj Global.

But if someone wants - they can try to implement your idea and submit a PR, we are happy to accept it.

For me it sounds like you want that our devs are implemented a special device (or an ARC cache?). It maybe easier to you to switch to ZFS or enable it in LVM. The analogue for Windows is long exist too with a tiered storage. I do not think that we should implement a function, which is already exist on any modern storage system.

The question was asked from the SNO perspective as it sounded like we are getting to a point where every ms counts. For SNOs and for customers.

For me it sounds like everything is already there:

with

I am just assuming that SSDs will outperform HDDs and will continue to do so. SSD technology will be further developed and improved while HDD technology is performance-wise already stagnant because it is at the end of its lifespan the gap will become even wider.

So for me it sounds natural to think of improvements on storage not only about HDDs but also of ways to make use of the state of the art SSD technology that offers incredible speeds already today and will be provide even more performance in the future.

No, you just need to migrate to a hashstore backend automatically (slower) or manually. However, with an automatic you may also share issues which our team is willing to fix.

When all nodes are migrated to hashstore we maybe would be able to spent more time to implement a support of a corner use cases too, or new features. I still not convinced that our devs should reinvent a wheel (ARC cache, tiered storage, whatever is it called in a modern storage systems).

Yes, but even after migration what I am saying is that the options to have different places for uploads (potentially piecestore backed by SSD) and hashstore (most likely on HDD for economical storage) and a background job that moves the pieces from upload location to hashstore are already in place and eventually could be used or developed further to gain more upload performance out of it.

This feels like another big waste of time. We are talking about 0.0x percent differences while real success rate is only like 70 percent. ![]()

Am curious what the actual rate is.

Since the nodes are apparently not accurate in measuring their own success rate, how can we calculate it?

Would it be better to receive this Metric periodically from satellites like the nodes currently do for uptime?

Definitely I would say if the nodes calculation is not reliable.

It’s actually even better than that. The uploads are directed to RAM, which is so much faster than even SSD. And only in some spare time, they get dumped to disk.

Current success rate for 12nRLLozTqKdD5KRjLu23C8Xz6ZKxkzngpRhoKUZtfruDcCMpar:

Same for 1zWMZAUsyxpi1v9J5Me9ukcdKiaCDEpeftiTSdcrXJh7RvHEyf

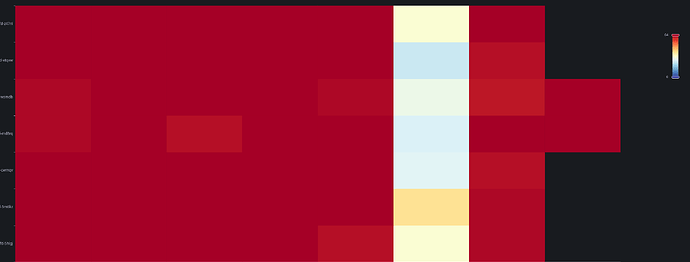

Each row is one single Kubernetes pod of our Satellite.

Each column is a different edge location.

Red is the max value (63-64). Both of these nodes are quite good.

Except from the European edge location (the yellow/blue column). (didn’t check the IP, but let me guess: it’s US)

Likely the first node received only a few request from the last edge region, that’s why we don’t have scores there from some of the pods.

In this specific case, I see no difference between piecestore/hashstore success rate.

Awesome, thank you very much for checking! So the piecestore nodes are indeed showing misleading cancel rates.

yes, both nodes are in US, one in Washington, another in California.

I’ve started migration on all remaining nodes ![]()

So calculating success rates based on client logs has always been useless. ![]()

Is there a chance to make real numbers from satellites available to node api?

I tend to agree.

I’ve found the better method has always been to calculate what sticks (space delta) vs. Ingress I/O delta of your node(s). I believe the web stat dashboard has made that quite obvious all long. Provided one checked the deltas of ingress bandwidth vs. the amount stored, refreshed over any X period of time.

2 cents,

Julio

But how do you know what stuck? It only known after garbage collection run, so not realtime.

Because comparatively, it’s always far less than the success rate for the same period, and by extension after a garbage collection run, it can only get worse. I would suggest it would be best to check at, or near, the start of a month, when totals are merely in the GBs and do so in-between actual garbage runs. Nevertheless, at any time it should be prevalent (loss of decimal places to TBs), that’s always been my observation, even prior to the current ruthless +/-48 hour TTL data deletes.

2 cents,

Julio

Actually, I think all we’ve learned from this thread is that just piecestore success rates have been useless, but the hashstore success rates are much more accurate! This is good, because this also means the storage node has a better understanding right up front if a piece can immediately be removed instead of having to get garbage collected.

So, I guess the summary is, existing success rate dashboards should become more accurate during this rollout.

With hashstore my “success rate” is more or less unchanged and way above 95 percent. My nodes are in europe behind vpn and slow dsl connection while most traffic coming from north america. Those numbers are obviously nonsense.

How?

Bdhdhdhdjsjdyfkdksh

Liks so: Poor Success rate on hashstore - #37 by jtolio

Instead of this, you can write <div></div><div></div><div></div> – it will not appear but consume 20 characters.