2 top cases: 20TB and 17TB nodes (raidz2). active since 2018, open style ‘cabinet’ with wheels (easy to rotate/move).

It will be good to add UPS here, as in voltage drop here first will die hdds.

Also 1 core on power cpu can be better than all 4 in raspberry.

All my nodes are currently running on a Supermicro SuperServer 2048U-RTR4 equipped with 4 x Intel Xeon CPU E5-4669 v4 CPUs - (88 non-ht cores in total)

You can find out more about the server hardware here : http://www.th3van.dk/SM01-hardware.txt

Thanks - I’ll have a look at duf.

I’m not running any sort of preclear on any of the HDDs, since we never had that many issues with Seagates enterprise HDD’s.

I just plug them into the SuperChassis JBOD and add a single node to the HDD to honer the one node per HDD policy, as recommended by Storj themselves. If a HDD eventually decides to go haywire, I’ll just replace it, start a new node, and let the storj network take care of the rest.

Th3Van.dk

But in that JBOD cannot fit 100 HDDs. Do you run multiple nodes on one drive? How many Nodes at max for one HDD? Am wondering if the Seagate Exos’ are having I/O issues with the many nodes running on them.

Can you also say which internet connection you are using? Is 1G/1G sufficient oder 10G/10G?

There are 2 x SuperChassis 847E1C-R1K28JBOD hooked up via the AVAGO/Broadcom MegaRAID SAS 9480-8i8e controller in the main Supermicro SuperServer 2048U-RTR4.

Each JBOD chassi has 44 drive bays, so in total 88 HDD’s.

We have ordered a 3rd chassi SuperChassis 847E1C-R1K23JBOD to hold the last 17 HDDs.

We have also ordered two extra MegaRAID SAS 9480-8i8e controllers, so each chassi will have its own controller in the main server.

Currently only server010->025 are sharing hdd space with server091->107, which will be migrated to the new set of HDDs, when the chassi and controllers arrived early next year.

The maximum number of nodes I had on a single HDD was 4 on this setup, which I quickly migrated to other HDDs, as the nodes grow bigger over time, and read/write to the HDD went from fine to worse, even with the SAS controller built-in cache enabled.

Well, I did not have any other issues with the Exos HDDs, other than the read/write speed drooped as the nodes grow bigger.

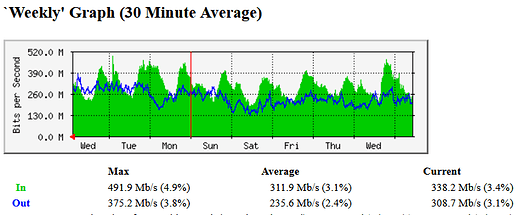

The main server is equipped with a single 10Gbit/s NIC which is more than enough :

Graph are based on the Cisco switch port, where the main server is hooked up to.

In = egress / Out = ingress

Th3Van.dk

I see that each node has a separate IP in separate /24, how do you manage that?

You also just bought SAS-Drives instead of cheaper SATA-Drives. Can you tell the benefits from SAS-Drives? That connection between computers trough SAS is interesting. Would like to have more information about it.

Some of the IP’s are from our DC, and others are from VPS.

Th3van

- SATA is less expensive, and it’s better suited for desktop file storage.

- SAS is generally more expensive, but it’s better suited for use in servers or in processing-heavy computer workstations.

Th3Van.dk

Wow! So many nodes and so little traffic. Is good to know that one usual home broadband connection can handle anything Storj needs, for a normal setup, even little monsters. ![]() You could use 500/500 mbs and you would be ok.

You could use 500/500 mbs and you would be ok.

Interesting the network traffic graph. I wonder why are those waves there, instead of a nice plain? That is engress or ingress? In a previous post, In was marked as Engress, and is confusing.

I find that egress has a daily variation with my setup too, as well as a weekly variation (less in weekends). Ingress varies too, but I can’t determine a pattern like that.

It reminds me of the problem I had with DDOS protection in router. It gave me errors at 1 hour intervals, blocking the sattelites. Maybe he has something like this on his network too, some firewall, malware protection, DDOS prevention, etc. It seems not a normal storj network activity.

Top Left: Dedicated Chia node with two Yotamaster enclosures

Top Right: Two node XCP-NG cluster (with a Ubiquiti switch on top ![]() )

)

Bottom Left: Synology expansion

Bottom Right: Synology 720+ (and a small UPS for some network stuff)

My Storj node lives in the XCP-NG cluster (2 vCPU 8 GB RAM), with 6 TB of storage living on the Synology via an iSCSI LUN.

6 posts were merged into an existing topic: Nodes uses VPN to bypass /24 rule

I wanted to post an update since it has been 2 years since i first uploaded photos.

We said goodbye to the HP ML150 G5 yesterday. We ended up selling it off since it was only 8 cores and that was becoming a limiting factor. My HP N54L Microserver is still going and has just had a 4TB drive added to it that I no longer needed in an old NAS system I’ve replaced. So, no new hardware bought - just shuffled around to get better efficiency. Literally all the other systems in the first photo have been replaced. I now primarily run on 3 x dell T320’s with e5 2470 v2 cpu’s with 20 threads. Each system has 64GB ram and I hope to upgrade that further. I was running Esxi 6.7 and Proxmox but Esxi 6.7 has now gone EOL and my hardware won’t support 7 without replacing the SAS card so now I am fully Proxmox. My employer will also move to Proxmox within the next 30 days. The HP ML150 G6 in the pic on the far right is now my new NAS running TrueNAS Scale, also with 64GB RAM and 16 threads with 2 sets of 4 SAS drive bays. I was running TrueNAS Core previously on another box but I just find Scale suits my needs better. My employer is now also using Backblaze B2 storage so I also plan to start experimenting with Storj as well.

Nice tanks collection ![]()

Yeah, been doing plastic models since I was 8 years old. I have some diecast aircraft/tanks as well.

3x DL380 G9 @ 12x 18TB WD datacenter drives Raid6, only two servers on picture other one in another location.

Running ESXi on all hosts managed by vcenter, using checkpoint firewalls. I have four different sites and 29 nodes totaling 600TB of storage.

Synology backup takes daily esxi hosts backup.

Monitoring with zabbix & grafana.

Fully meshed s2s VPN between all sites.