Once FileZilla update their macaroon version (whatever that is!), it’s actually really easy to use Tardigrade with it.

I’ll just wait until they do and then let it rip ![]()

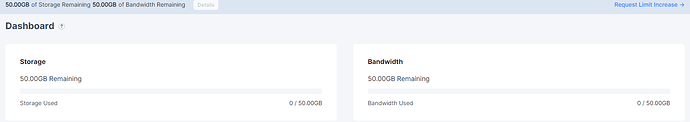

This banner should be site-wide and not just on dashboard and billing page.

Currently it makes no sense to show on dashboard since the stats are already shown separately.

2 posts were merged into an existing topic: Us2 beta with filezilla

I also did a test on the new us2 together with duplicati and my tardigrade-backend. It worked flawlessly until the 50GB where used completely.

Any chance I could have more than the 50GB storage and bandwidth, please?

Happy to provide much higher limits as long as you can validate your test cases. Reach out to me directly with the email you registered with and we can start the process. Appreciate you testing the new service.

A post was merged into an existing topic: Us2 beta with filezilla

Hello!

I could not upload through gateway. I got this error:

Error: WARNING: Expected number of all hosts (0) to be remote +1 (0) (*errors.errorString)

Thank you for the feedback, can you provide more detail so I may replicate? Looking for what utility, etc.

I guess I have a wrong setup. I have changed the Access Grant and now I got an other error:

Error: gateway setup error: uplink: unable to unmarshal access grant: proto: pb.Scope: illegal tag 0 (wire type 2)

I was on Mac OS X 10.11.6, gateway version 1.1.9, uplink version 1.16.1

To test our hosted gateway would will need something other than uplink. Generally I advise rclone.

Example

> # setup rclone

> rclone config

> # select n (New Remote)

> # name

> s3rctest

> # select 4 (4 / Amazon S3 Compliant Storage Provider)

> 4

> # select 13 (13 / Any other S3 compatible provider)

> 13

> # select 1 (1 / Enter AWS credentials in the next step \ “false”)

> 1

> # enter access key

> <access_key>

> # enter secret key

> <secret_key>

> # select 1 ( 1 / Use this if unsure. Will use v4 signatures and an empty region.\ “”)

> 1

> # enter endpoint

> gateway.tardigradeshare.io

> # use default location_constraint

> # use default ACL

> # edit advanced config

> n

> # review config and select default

> # quit config

> q

> # make bucket and path

> rclone mkdir s3rctest:testpathforvideo

> # list bucket:path

> rclone lsf s3rctest:

> # copy video over

> rclone copy --progress /Users/dominickmarino/Desktop/Screen\ Recording\ 2021-03-12\ at\ 10.48.10\ AM.mov s3rctest:testpathforvideo/videos

> # list file uploaded

> rclone ls s3rctest:testpathforvideo

> # output (40998657 videos/Screen Recording 2021-03-12 at 10.48.10 AM.mov)

My results so far: I was able to upload 2 files without issues, first one almost 1 GB, second one 6 GB.

The third file I was not able to upload with around 33 GB. The upload kept restarting and at one point finally dies. Which is odd as when I can succesfully upload a 6 GB file then I should be able to upload a 33 GB file as well.

What program you have used?

I have used Filzilla.

I have just tried to access a bucket/file via share link (http://link.tardigradeshare.io/).

For that I created a new Access Grant with full access.

However when I try to access it, I get an Object not found error or even Malformed request.

So I am wondering, if this is not (yet) implemented or works differently so that I am doing something wrong.

So my experience with FileZilla Pro using the S3 connector was OK but needs improvement.

The upload of both a set of very large files and lots of small files was fine. The very large files saturated my upstream connection (160Mbps), the lots of small files didn’t, but that’s to be expected.

Downloading was less impressive.

Small files obviously didn’t download very quickly but I was surprised that downloading the very large files (5 concurrent connection) didn’t really go beyond 200Mbps on my 500Mbps line.It doesn’t seem to be line contention as I can download full speed form other sites.

Single download of a very large file was around 30-40 Mbps.

Deletions are also a problem. When I try to delete a folder with thousands of small files in subfolders, eventually I get an error “Error: Connection timed out after 20 seconds of inactivity” and I don’t know why. The whole process then stops.

I am using a Ubiquiti Dream Machine Pro, which has IPS/SPS enabled. I will turn that off to see if that makes a difference.

UPDATE: IPS/SPS didn’t make a difference, still very limited download bandwidth

I think that works on US1 satellite and not US2 (the one we’re testing)?

I finally got it to work but it works a bit unexpected at least to me.

The share requires at least one uploaded file to be shown. It’s probably the same on the other satellites but I never tried with an empty share on them.

So right after creation of a share, the bucket is not visible reporting an error not found, which I think should be changed. After the first file has been uploaded then the share is showing as expected.

Additional note: While searching for a cause of the reported problem, I have created many Access Grants and deleting them and I need to say that the GUI needs a major overhaul. To me there is no good usage concept behind it.

I give 3 examples:

- For the first Access Grant, I get a screen asking for resp. generating a mnemonic sentence resp. asking for a passphrase. For subsequent Access Grants there is no such offering only the option to enter the passphrase. As I don’t see a hierarchy of Access Grants, I don’t see a reason why one gets created differently than the other.

- Another thing is if you cancel the creation of an Access Grant right after clicking next in step 1, it gets added to the overview of Access Grants without any further information. So a user has absolutely no clue if at this point a working Access Grant has been created or not.

- Access grants cannot be retrieved after creation. While I understand this behavior from a tech standpoint, from a users perspective it would be much more desirable if they could be retrieved or looked up at least for ‘some’ period of time or for example until the browser gets closed.

There are many more examples like this. To me as non technician this GUI looks like some tech guys with a lot of knowledge about the platform have build it without ever thinking of the users who might not have the same level of knowledge.

This is why I believe this GUI needs major rework to suit the users and not the creators of it. But also to reduce risks of unintended leaks, a potential threat that I have tried to bring up here: Are there ways to mitigate (unintended) leaks?

So a non-intuitive GUI like this could turn into a serious issue for the users of the platform. One example for the risks it poses is that when creating an Access Grant all permissions are set to allow for all buckets for forever duration by default. This is a recipe for disasters.

Hello so do you encrypt my data or i should be crpyt? And I have another two questions

Unable to transfer data on different projects?

Why have a grant of a separate projects?

Hello @muaddib,

Welcome to the forum!

We encrypt your data if you use Gateway-MT and you encrypt your data when you use a native connectors (FileZilla or rclone or Duplicati, etc.) or tools like uplink. The difference is where the encryption is happening.

In case of native connectors and tools the encryption is happening on your device before it would leave your network. This method is called client-side encryption.

In case of Gateway-MT and using s3-compatible tools the encryption is happening with your encryption phrase on Gateway-MT (on the server). The access grant is stored on the server and encrypted with your access key. It’s still safe, but not as good as client-side encryption. So, I would recommend to encrypt your data additionally if possible, when you use an s3-compatible tools with Gateway-MT.

We are working on implementation of client-side encryption for Gateway-MT. The challenge is to keep it compatible with an s3 protocol (it doesn’t support client-side encryption out-of-box).

You can use separate projects to have separate billing for example, or make teams (you can invite your colleagues to the project for coworking).

You can create different access grants with restricted access to share your objects with others and do not allow them to see all other objects. It’s suitable for storing sensitive data in shared buckets, or other use cases.