Somewhat concerning that 17 hours after the first post not a single response from storj either.

If there is a mass shutdown of nodes to avoid getting DQ’ed it’s going to make it interesting to see if any customers lose access to their data. This could get out of hand quite quickly.

Well if data is still being deleted, the problem does get worse over time and we’ve already seen nodes with scores at 80%. I’m keeping an eye on https://stats.storjshare.io/nodes.json , but so far there hasn’t been an increase in disqualifications. So I think we’re good for now.

I think (and hope) that if DQ happens due to this problem on that particular server, Storj can, via

software update, rollback the disqualified id and restore the status.

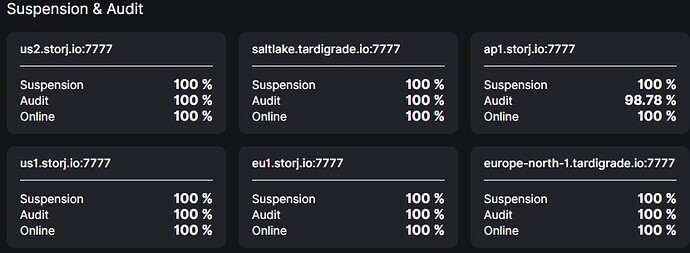

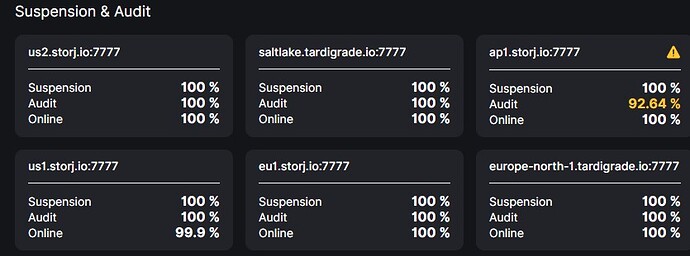

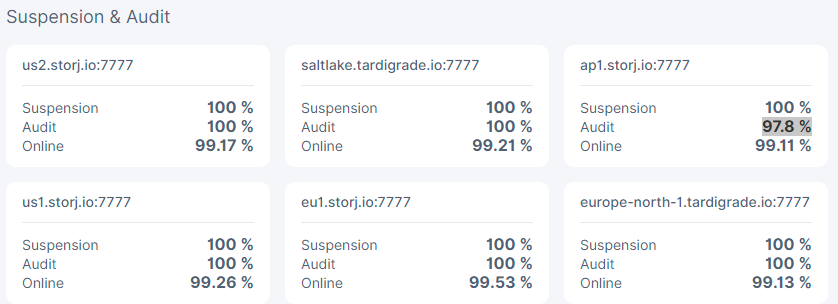

FYI my audit score is increased from my last message! =D

Hello @luddinho ,

Welcome to the forum!

@luddinho @gnegnus @cynicaldrink @CutieePie @waistcoat @Luis

Please, search for GET_AUDIT and failed in the same line in your logs. If you can found - then please, search all records for the failed piece.

Our Team is aware of the issue and working on restoring a reputation of affected nodes.

I’ve only 1 line.

2021-07-22T18:53:59.929+0200 ERROR piecestore download failed {"Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Action": "GET_AUDIT", "error": "file does not exist", "errorVerbose": "file does not exist\n\tstorj.io/common/rpc/rpcstatus.Wrap:73\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:534\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:217\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:102\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:95\n\tstorj.io/drpc/drpcctx.(*Tracker).track:51"}

Please look in your logs for all lines containing this piece ID: GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA

Hello @Alexey,

I already did a grep on GET_AUDIT and since I have restarted with a new created container I’m not able to see any failed. All of the audits seems to have the info “download start” and then “downloaded”.

The ap1 satelite seems to be audited 3 times since I have restarted the node.

2021-07-23T10:37:39.860+0200 INFO piecestore download start {“Piece ID”: “TTWGUGDZRA3WASVHVOJMNURIY6G7GASGFHRECQG5GX4HQTEKSIZA”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

2021-07-23T10:37:40.227+0200 INFO piecestore downloaded {“Piece ID”: “TTWGUGDZRA3WASVHVOJMNURIY6G7GASGFHRECQG5GX4HQTEKSIZA”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

2021-07-23T10:37:44.518+0200 INFO piecestore download start {“Piece ID”: “FZUWZHWZVMRDLXH3O4AR5Z4VH2BLNP7I4AKAZ3TEXNNBC2ONLVVQ”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

2021-07-23T10:37:44.893+0200 INFO piecestore downloaded {“Piece ID”: “FZUWZHWZVMRDLXH3O4AR5Z4VH2BLNP7I4AKAZ3TEXNNBC2ONLVVQ”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

2021-07-23T11:40:35.537+0200 INFO piecestore download start {“Piece ID”: “CHL37WACHGEKST555YEWW3HMVRJUGOAG5CQLC4VR546DOICZMVCQ”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

2021-07-23T11:40:35.928+0200 INFO piecestore downloaded {“Piece ID”: “CHL37WACHGEKST555YEWW3HMVRJUGOAG5CQLC4VR546DOICZMVCQ”, “Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Action”: “GET_AUDIT”}

And it seems that the Audit value increases in the dashboard view.

Today morning it shwed the value 87.64% and now it is back to 89.4%

Nevertheless, the errors in the log are remaining…

If the errors are not related to the Audit drop, what else is the root cause for those log errors?

Is there something the node operator can do or is there something wrong with the ap1 sattelite?

Kind regards

Unfortunately I do not see any errors in the provided excerpt.

However, it’s enough of error samples at the moment, thanks to @BrightSilence , @CutieePie, @waistcoat and others!

Seems a bug in the storagenode, when a lot of pieces got deleted to the trash.

In other words, we as node operator just have to ignore those kind of error messages from the log until there is an update available of storagenode?

I think so. But the analysis is not finished yet, so it’s too early to jump to conclusions.

I’ll update the thread when I would have more info than now.

I’ve selected all the lines for that specific piece id.

2021-07-22T17:38:45.153+0200 INFO piecedeleter delete piece sent to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA"}

2021-07-22T18:10:48.315+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-22T18:53:59.927+0200 INFO piecestore download started {"Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Action": "GET_AUDIT"}

2021-07-22T18:53:59.929+0200 ERROR piecestore download failed {"Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Action": "GET_AUDIT", "error": "file does not exist", "errorVerbose": "file does not exist\n\tstorj.io/common/rpc/rpcstatus.Wrap:73\n\tstorj.io/storj/storagenode/piecestore.(*Endpoint).Download:534\n\tstorj.io/common/pb.DRPCPiecestoreDescription.Method.func2:217\n\tstorj.io/drpc/drpcmux.(*Mux).HandleRPC:33\n\tstorj.io/common/rpc/rpctracing.(*Handler).HandleRPC:58\n\tstorj.io/drpc/drpcserver.(*Server).handleRPC:102\n\tstorj.io/drpc/drpcserver.(*Server).ServeOne:60\n\tstorj.io/drpc/drpcserver.(*Server).Serve.func2:95\n\tstorj.io/drpc/drpcctx.(*Tracker).track:51"}

2021-07-22T19:05:31.466+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-22T20:08:27.207+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-22T21:10:02.766+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-22T22:17:23.649+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-22T23:23:05.029+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T00:30:00.349+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T01:48:52.698+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T02:38:16.799+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T03:29:40.686+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T04:48:07.415+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T05:47:56.483+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T06:40:41.038+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T07:35:35.730+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T08:57:28.736+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T10:08:23.268+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T11:23:33.218+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T12:35:59.077+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}

2021-07-23T13:32:30.412+0200 ERROR piecedeleter could not send delete piece to trash {"Satellite ID": "121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6", "Piece ID": "GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA", "error": "pieces error: v0pieceinfodb: sql: no rows in result set", "errorVerbose": "pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57"}hey since this update this error is showing in my logs ": “pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57”}

2021-07-22T17:24:38.423Z ERROR piecedeleter could not send delete piece to trash {“Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Piece ID”: “YIEGOPJLQEZZZWV4XICWZ45AB4CKPFFWPEVVE53I7R3OPFRIJMTA”, “error”: “pieces error: v0pieceinfodb: sql: no rows in result set”, “errorVerbose”: “pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57”}

2021-07-22T17:24:38.427Z ERROR piecedeleter could not send delete piece to trash {“Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Piece ID”: “WZIZXGGDLMJDLSHTZHHDZPVNC7STZ64L3CNSYCJMH5ZLIQ5EW4YA”, “error”: “pieces error: v0pieceinfodb: sql: no rows in result set”, “errorVerbose”: “pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57”}

2021-07-22T17:24:38.429Z ERROR piecedeleter could not send delete piece to trash {“Satellite ID”: “121RTSDpyNZVcEU84Ticf2L1ntiuUimbWgfATz21tuvgk3vzoA6”, “Piece ID”: “KBFW7RTHZBJVT4LEMEQR6ZZN33TBQ2MEDW5UCJMBD4BLKN2HG2JA”, “error”: “pieces error: v0pieceinfodb: sql: no rows in result set”, “errorVerbose”: “pieces error: v0pieceinfodb: sql: no rows in result set\n\tstorj.io/storj/storagenode/storagenodedb.(*v0PieceInfoDB).Get:131\n\tstorj.io/storj/storagenode/pieces.(*Store).MigrateV0ToV1:404\n\tstorj.io/storj/storagenode/pieces.(*Store).Trash:348\n\tstorj.io/storj/storagenode/pieces.(*Deleter).deleteOrTrash:185\n\tstorj.io/storj/storagenode/pieces.(*Deleter).work:135\n\tstorj.io/storj/storagenode/pieces.(*Deleter).Run.func1:72\n\tgolang.org/x/sync/errgroup.(*Group).Go.func1:57”}and this is also happening

Already reported here

Yes, this confirms you’re seeing the same issue. I guess we’ll have to wait and see what they’ll find.

I have my suspicion that this is more likely an issue on the satellite end. Since it seems the initial delete is processed just fine on the storage node end. It should never have received any subsequent delete requests or audits from the satellite. It seems the satellite thinks the deletes haven’t been processed correctly, even though they have. So I’d say it’s either on the satellite end or in the communication between the two. Based on the logs it doesn’t seem like the storagenode is doing anything wrong.

What does this mean in detail? Do you have only one piece id “GNEAJQTG4SM4YSEHFLDGIHTQO45YECPE53VLG3MJ6AC7DYKUVWOA” that causes the error?

In my case there are tons of pieces (in total 45072) causing the same error.

We all have tons of those delete errors. But luckily very few of them are audited. That log shows the history of a specific piece that was audited.