I do have an update (Note I tend to use all the deadlines).

For context, I’ve posted a couple of updates and mentioned this in the Town Hall about how we use test data on the network. This post should give you some more visibility into how we use test data and what you can expect over the next few months.

What are we doing with test data?

Text data serves a number of different purposes, some of which may be more obvious than others. The main uses for test data are:

- Storage Node Incentive - probably the reason you care about most, all test data is paid as if it were real customer data. We don’t distinguish between real and test data since we’re using storage space and bandwidth either way. Until we have PBs of customer data on the network, we want to make sure the early storage nodes earn money as we grow demand.

- Use Case Validation - as we pursue different opportunities with partners and customers, we use test data to simulate actual customer data and use cases. We do this so we can be confident that the Tardigrade platform will meet the customer’s need and also to simplify onboarding

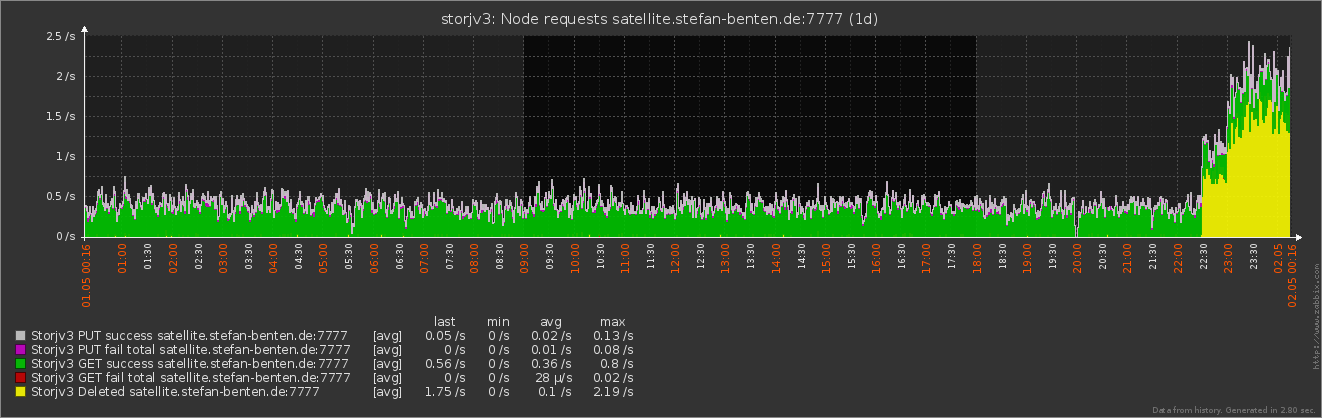

- Load Testing - as we test the scalability of the network and make improvements, it’s useful to push high volumes of data through the network to determine the limits of the different network components

- Performance Testing - we’re continuously testing the network to evaluate the performance, particularly relative to customer expectations for different use cases

- Functional Testing - test data helps us assess the different functions beyond the client use for upload, and download, including file repair, audit, other durability-related components

- Competitive Benchmarking - we test performance against other cloud providers to compare performance on some standard functions. The most common benchmark test is put and get on a 10 MB file.

- App Testing - as we, or partners and customers develop different connectors or app integrations, we do significant testing to determine how those apps will behave in the wild

- Incentivizing demand - We’ll be using test data to continue to support supply in advance of forecasted customer demand.

How much test data do we plan to push to the network?

The amount of test data we push to the network varies, but there a couple of different factors that impact the amount of data and bandwidth we’re using for testing. Those factors include:

- Test priority - Depending on where we are in a release cycle, we may want to test different aspects of the platform. When we’re testing concurrency, vs. streaming performance for uploads and downloads, deletes, repairs, etc. the needs change and the test patterns change.

- Customer and partner POCs - testing larger use cases or proving value to specific customers/partners

- Coordinating initiatives - spinning up new satellites or testing new features, code or use cases

Over the past few months, we’ve been testing a wide range of different factors on the platform, including significant amounts of egress bandwidth. Over the next few months, we’ll be shifting our test patterns to more closely simulate real customer use cases. While priorities change from time to time, in general, we expect that our test profile will change over the next few months in terms of how much storage and bandwidth we use for testing.

We have a few PB of test data on the network now, but we expect that to grow to around 8 PB (pre expansion factor) over the next few months. In terms of download traffic, we’ve used a higher rate of downloads as a percentage of data stored over the last few months, but we expect that to average out to somewhere around 10% of data stored over the next few months.

While the Salt Lake satellite will be focused on load and performance testing, the other satellites (including our new European satellite) wil be focused on customer growth and testing customer-oriented use cases, including projects like GitBackup and some POCs with prospective customers and partners.

The test data and bandwidth planned over the next few months may increase or decrease depending on what we need at any particular time. One thing we plan on continuing to do is use surge payouts to maintain a relatively stable level of network payout. We know that significant swings in the incentive program for storage nodes has a big impact on the community.

It will take some time to get 8 PB loaded on the network, but we’ve been ramping up the load process across all satellites. More importantly, we’re working even harder on getting real (paid) customer data on the network. A number of long time supporters have published posts that we need time to build a successful cloud storage business on top of this network and with the reception we got in the media, with partners and customers at launch, it’s going to be a wild ride… We will continue to be more transparent and clear when it comes to expectations, especially for new SNOs.