1. Setting up prometheus

For storing all statistics of the nodes, we use prometheus. So choose one PC/Server where you want to run prometheus. It doesn’t need to be a powerful PC and it doesn’t need much space, you can decide how much space prometheus is allowed to use or how long you want to store your statistics. However, make sure you choose a PC (or a directory) that doesn’t write to an SD card as that would shorten its lifespan significantly.

On that chosen PC we first create a prometheus.yml file in a location you prefer:

# Sample config for Prometheus.

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: 'example'

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets: ['localhost:9093']

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# Override the global default and scrape targets from this job every 5 seconds.

scrape_interval: 5s

scrape_timeout: 5s

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

Then we create a prometheus container:

sudo docker run -d -p 9090:9090 --restart unless-stopped --user 1000:1000 --name prometheus \

-v /sharedfolders/config/prometheus.yml:/etc/prometheus/prometheus.yml \

-v /sharedfolders/prometheus:/prometheus \

prom/prometheus --storage.tsdb.retention.time=360d --storage.tsdb.retention.size=30GB \

--config.file=/etc/prometheus/prometheus.yml --storage.tsdb.path=/prometheus

What you need to change in this command:

- The paths “/sharedfolders/…” behind the “-v” arguments are my local paths. You have to change them according to where you just created the configuration file “prometheus.yml” and where you want the directory for the prometheus data. You could use a docker volume for storing that data but personally I prefer a directory. Make sure the directory exists and is owned by your first user.

- The --storage.tsdb.retention.time is set to 360 days. Decide for yourself how long you want to store stats.

- The --storage.tsdb.retention.size=30GB configures how big the prometheus database is allowed to get. If it gets bigger, it starts deleting old entries.

The argument --user 1000:1000 makes the container run as the first user of the PC (e.g. pi on an RPI) instead of root. You can remove it if you prefer to run your containers as root but I’d recommend running all containers as a user (unless they only work as root but all containers in this How-To including the storagenode can run as a user. Warning: Don’t change from root to a user! It will mess up your storagenode as the filesystem permissions will still be wrong.).

Don’t change the other arguments in the command.

The missing directories will be created on the first start of the container. If you however chose a location other than the os harddrive, you might want to use --mount for the prometheus storage directory like you do with the storagenodes, so that a HDD disconnect doesn’t result in the database being written to your OS drive (in which case those directories have to be present before you start the container).

Now you can check if prometheus is running by visiting: http://<“ip of your pc”>:9090

2. Setting up storagenode prometheus exporters

The prometheus exporter for storagenodes is made by @greener and the repository has all information needed: GitHub - anclrii/Storj-Exporter: Prometheus exporter for monitoring Storj storage nodes

There is also a thread on this forum about the exporter:

In this How-To we run the exporter on the device that runs the storagenode. It’s also possible to run it on the device running prometheus instead but I think this is more convenient. Also we’ll be using docker to run the exporter as it is easier.

So in short, do this on every PC that runs a node and run it for every node on that PC (with the required changes described below):

For x86/64 systems run this:

sudo docker run -d --restart=unless-stopped --link=storagenode --name=storj-exporter \

-p 9651:9651 -e STORJ_HOST_ADDRESS="storagenode" anclrii/storj-exporter:latest

For arm-devices you need to build the image yourself:

git clone https://github.com/anclrii/Storj-Exporter

cd Storj-Exporter

sudo docker build -t storj-exporter .

sudo docker run -d --restart=unless-stopped --link=storagenode --name=storj-exporter \

-p 9651:9651 -e STORJ_HOST_ADDRESS="storagenode" storj-exporter

What do you need to change in this command? (for all architectures)

- –link=storagenode, “storagenode” needs to be the name of the container of the storagenode you want to link to

- –name: choose an appropriate name for the container, doesn’t really matter what, it’s just for you (and needed in the next step)

- -e STORJ_HOST_ADDRESS: “storagenode” needs to be the name of the container of the storagenode you want to link to

- -p 9651:9651, if you run multiple nodes on one device, you need to change the port on the additional nodes, like -p 9652:9651

After starting the container you can check if it works by visiting: http://<“ip of node”>:9651 and it will print a lot of information.

If you run netdata on your PC, make sure to follow the netdata section in the storj-exporter repository to disable netdata’s polling of the exporter port as this will result in a heavy CPU load on your PC.

3. Configuring prometheus to scrape the storagenode information

Edit your prometheus.yml with your favourite editor and add the following job to the scrape_configs section:

- job_name: storagenode1

scrape_interval: 30s

scrape_timeout: 20s

metrics_path: /

static_configs:

- targets: ["storj-exporter1:9651"]

labels:

instance: "node1"

What do you need to change in this section?

- job_name: whatever you like

- scrape_interval: how often data is being pulled from your node. 30s is a good value to see bandwidth data.

- targets: change this to match the name of your storj-exporter container from the last step and the port from the last step like [“container_name:port”] if you want to add a local storj-exporter. If you want to add a storj-exporter running on a different machine, use the ip-adress of that machine like [“ip-address:port”]

- labels: instance: “node1”: choose any label you like, this will be the name shown in the prometheus data and on the grafana dashboard. It will be the name of your node everywhere. Make sure you can recognize the node from this name.

If you run multiple nodes on multiple devices, you have to add such a job section for each node on each device with the appropriate ip and port defined in the last step.

Now restart the prometheus container to use the changed configuration.

Then visit http://<“ip”>:9090/targets where you can see all your job configurations and if that exporter endpoint could be reached. Wait a while until all endpoints have been scraped to see if all your configurations work correctly.

4. Grafana

Install grafana on the PC your running Prometheus (or on any other but this would be advantageous):

sudo docker run -d \

-p 3000:3000 \

--name=grafana \

--restart=unless-stopped \

--user=1000 \

-v /sharedfolders/grafana:/var/lib/grafana \

-e "GF_INSTALL_PLUGINS=grafana-clock-panel,grafana-simple-json-datasource,yesoreyeram-boomtable-panel 1.3.0" \

-e GF_PLUGINS_ALLOW_LOADING_UNSIGNED_PLUGINS="yesoreyeram-boomtable-panel" \

grafana/grafana

What do you need to change in this command?

- name: can be whatever you like

- user: this is the same as explained for prometheus

- -v /sharedfolders/… : change this path to wherever you want grafana to store your settings. You could use a docker volume but I prefer a path.

After starting grafana, visit http://<“ip-adress”>:3000

You will then need to log in for the first time. The default user and password is both “admin”. You’ll have to change it afterwards.

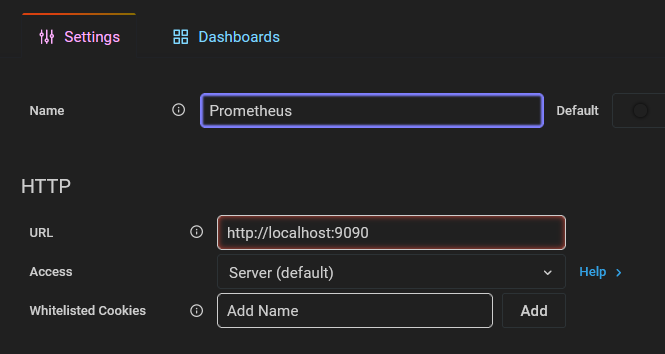

Configure the datasource:

Should be automatically filled like this:

Replace “localhost” with the IP-address of your PC that is running prometheus.

Then Save&Test.

The overview of added datasource should then look like mine, where you can see the Ip-Adresse of my host “http://192.168.178.10:9090”.

Now you need a dashboard for your nodes:

Installation instructions:

Import Storj-Exporter-Boom-Table.json via your Grafana UI ("+" -> Import), select your prometheus datasorce at the top-left of the dashboard

Copy the boom-table.json from https://raw.githubusercontent.com/anclrii/Storj-Exporter-dashboard/master/Storj-Exporter-Boom-Table.json

and paste it into the json field of the import:

Then load and import it. No further configuration of the datasource should be needed and it will show all your nodes. It might take a while until there is enough data to show enough data in some graphs.

5. Enjoy

Hope this How-To helped you set up monitoring for all your nodes.

Let me know if a step was unclear or where you have problems and we’ll figure it out.